batch normalization - layer normalization - instance normalization - group normalization

batch normalization - layer normalization - instance normalization - group normalization

feature normalization (batch normalization - layer normalization - instance normalization - group normalization)

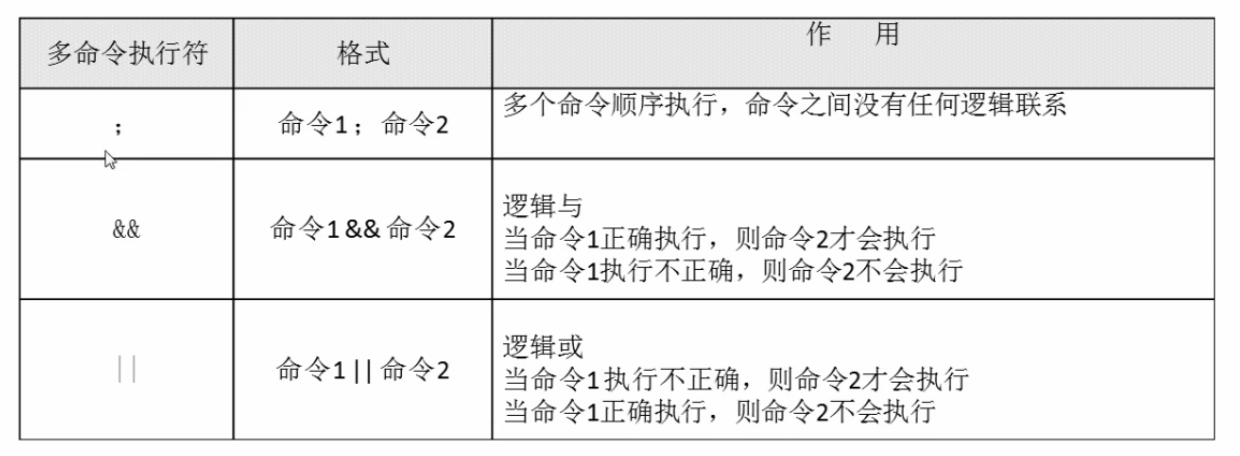

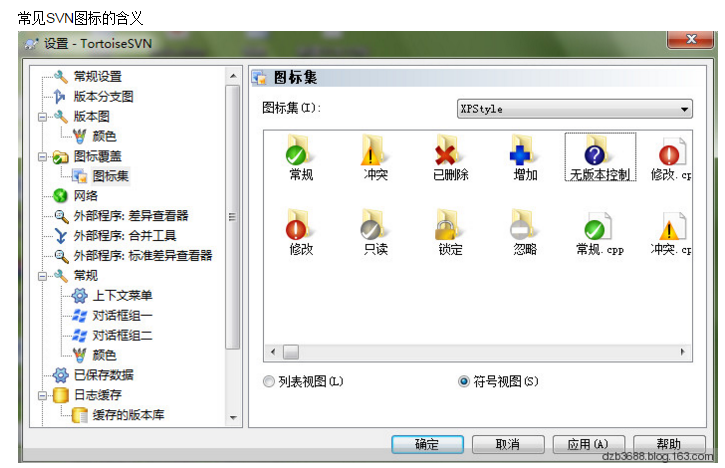

1. 4D tensor ( N × C × H × W N \times C \times H \times W N×C×H×W)

The size of feature maps is N × C × H × W N \times C \times H \times W N×C×H×W ( N = 4 N = 4 N=4 in this example). 4D tensor ( N × C × H × W N \times C \times H \times W N×C×H×W), indicating number of samples, number of channels, height and width of a channel respectively.

Different normalizers estimate statistics along different axes. 不同的归一化器沿不同的轴估计统计信息。

[Differentiable Learning-to-Normalize via Switchable Normalization]

2. batch normalization

batch normalization 在 batch 方向归一化, N × H × W N \times H \times W N×H×W 数据上计算 mean and variance。batch normalization 对 batch size 的大小敏感,每次计算均值和方差是在一个 batch 上。如果 batch size 太小,则计算的均值、方差不足以代表整个数据分布 (不能反映全局的统计信息)。

对每个 batch 中同一维特征做 normalization。

https://www.zhihu.com/

https://www.zhihu.com/

batch normalization 在 [batch, height, weight] 上计算 mean 和 variance,维度是[channels],后面保留层表达能力的 β \beta β 和 γ \gamma γ 维度是 [channels]。

3. layer normalization

layer normalization 在 [height, weight, channels] 上计算 mean 和 variance,维度是 [batch],后面保留层表达能力的 β \beta β 和 γ \gamma γ 维度是 [channels]。

4. batch normalization - layer normalization

https://www.zhihu.com/

4. instance normalization

5. group normalization

4. batch normalization - layer normalization - instance normalization - group normalization

Each subplot shows a feature map tensor, with N N N as the batch axis, C C C as the channel axis, and ( H , W H, W H,W) as the spatial axes. The pixels in blue are normalized by the same mean and variance, computed by aggregating the values of these pixels.

[Group Normalization]

还没有评论,来说两句吧...