cuda数据复制与计算重叠

数据复制与计算的重叠

深入学习:

CUDA流:最佳实践和常见陷阱

非默认流中执行主机到设备和设备到主机的内存传输。

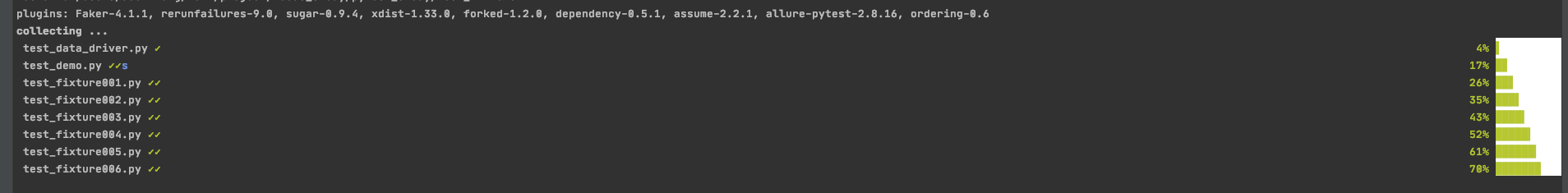

复制与计算重叠的代码示例

第一个示例适用于数据的条目数能被流的数量整除的情况,第二个示例则是不能整除的情况。

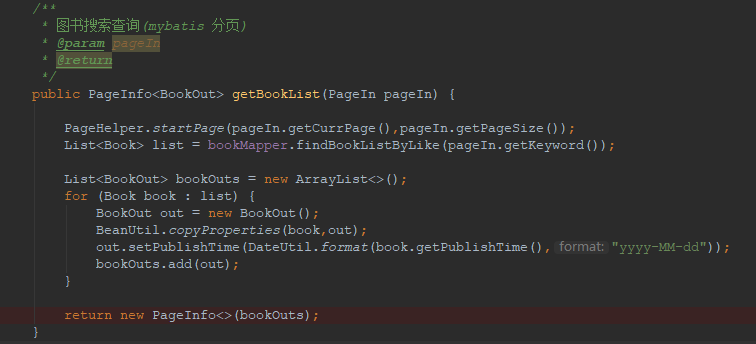

N可被流的数量整除

// "Simple" version where number of entries is evenly divisible by number of streams.// Set to a ridiculously low value to clarify mechanisms of the technique.const uint64_t num_entries = 10;const uint64_t num_iters = 1UL << 10;// Allocate memory for all data entries. Make sure to pin host memory.cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);cudaMalloc (&data_gpu, sizeof(uint64_t)*num_entries);// Set the number of streams.const uint64_t num_streams = 2;// Create an array of streams containing number of streamscudaStream_t streams[num_streams];for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamCreate(&streams[stream]);// Set number of entries for each "chunk". Assumes `num_entires % num_streams == 0`.const uint64_t chunk_size = num_entries / num_streams;// For each stream, calculate indices for its chunk of full dataset and then, HtoD copy, compute, DtoH copy.for (uint64_t stream = 0; stream < num_streams; stream++) {// Get start index in full dataset for this stream's work.const uint64_t lower = chunk_size*stream;// Stream-indexed (`data+lower`) and chunk-sized HtoD copy in the non-default stream// `streams[stream]`.cudaMemcpyAsync(data_gpu+lower, data_cpu+lower,sizeof(uint64_t)*chunk_size, cudaMemcpyHostToDevice,streams[stream]);// Stream-indexed (`data_gpu+lower`) and chunk-sized compute in the non-default stream// `streams[stream]`.decrypt_gpu<<<80*32, 64, 0, streams[stream]>>>(data_gpu+lower, chunk_size, num_iters);// Stream-indexed (`data+lower`) and chunk-sized DtoH copy in the non-default stream// `streams[stream]`.cudaMemcpyAsync(data_cpu+lower, data_gpu+lower,sizeof(uint64_t)*chunk_size, cudaMemcpyDeviceToHost,streams[stream]);}// Destroy streams.for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamDestroy(streams[stream]);

N不可被流的数量整除

// Able to handle when `num_entries % num_streams != 0`.const uint64_t num_entries = 10;const uint64_t num_iters = 1UL << 10;cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);cudaMalloc (&data_gpu, sizeof(uint64_t)*num_entries);// Set the number of streams to not evenly divide num_entries.const uint64_t num_streams = 3;cudaStream_t streams[num_streams];for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamCreate(&streams[stream]);// Use round-up division (`sdiv`, defined in helper.cu) so `num_streams*chunk_size`// is never less than `num_entries`.// This can result in `num_streams*chunk_size` being greater than `num_entries`, meaning// we will need to guard against out-of-range errors in the final "tail" stream (see below).const uint64_t chunk_size = sdiv(num_entries, num_streams);for (uint64_t stream = 0; stream < num_streams; stream++) {const uint64_t lower = chunk_size*stream;// For tail stream `lower+chunk_size` could be out of range, so here we guard against that.const uint64_t upper = min(lower+chunk_size, num_entries);// Since the tail stream width may not be `chunk_size`,// we need to calculate a separate `width` value.const uint64_t width = upper-lower;// Use `width` instead of `chunk_size`.cudaMemcpyAsync(data_gpu+lower, data_cpu+lower,sizeof(uint64_t)*width, cudaMemcpyHostToDevice,streams[stream]);// Use `width` instead of `chunk_size`.decrypt_gpu<<<80*32, 64, 0, streams[stream]>>>(data_gpu+lower, width, num_iters);// Use `width` instead of `chunk_size`.cudaMemcpyAsync(data_cpu+lower, data_gpu+lower,sizeof(uint64_t)*width, cudaMemcpyDeviceToHost,streams[stream]);}// Destroy streams.for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamDestroy(streams[stream]);

例子:

#include <cstdint>#include <iostream>#include "helpers.cuh"#include "encryption.cuh"void encrypt_cpu(uint64_t * data, uint64_t num_entries,uint64_t num_iters, bool parallel=true) {#pragma omp parallel for if (parallel)for (uint64_t entry = 0; entry < num_entries; entry++)data[entry] = permute64(entry, num_iters);}__global__void decrypt_gpu(uint64_t * data, uint64_t num_entries,uint64_t num_iters) {const uint64_t thrdID = blockIdx.x*blockDim.x+threadIdx.x;const uint64_t stride = blockDim.x*gridDim.x;for (uint64_t entry = thrdID; entry < num_entries; entry += stride)data[entry] = unpermute64(data[entry], num_iters);}bool check_result_cpu(uint64_t * data, uint64_t num_entries,bool parallel=true) {uint64_t counter = 0;#pragma omp parallel for reduction(+: counter) if (parallel)for (uint64_t entry = 0; entry < num_entries; entry++)counter += data[entry] == entry;return counter == num_entries;}int main (int argc, char * argv[]) {Timer timer;Timer overall;const uint64_t num_entries = 1UL << 26;const uint64_t num_iters = 1UL << 10;const bool openmp = true;const uint64_t num_streams = 32;timer.start();uint64_t * data_cpu, * data_gpu;cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);cudaMalloc (&data_gpu, sizeof(uint64_t)*num_entries);timer.stop("allocate memory");check_last_error();encrypt_cpu(data_cpu, num_entries, num_iters, openmp);timer.start();cudaStream_t streams[num_streams];for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamCreate(&streams[stream]);const uint64_t chunk_size = sdiv(num_entries, num_streams);for (uint64_t stream = 0; stream < num_streams; stream++) {const uint64_t lower = chunk_size*stream;const uint64_t upper = min(lower+chunk_size, num_entries);const uint64_t width = upper-lower;cudaMemcpyAsync(data_gpu+lower, data_cpu+lower,sizeof(uint64_t)*width, cudaMemcpyHostToDevice,streams[stream]);decrypt_gpu<<<80*32, 64, 0, streams[stream]>>>(data_gpu+lower, width, num_iters);cudaMemcpyAsync(data_cpu+lower, data_gpu+lower,sizeof(uint64_t)*width, cudaMemcpyDeviceToHost,streams[stream]);}for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamSynchronize(streams[stream]);for (uint64_t stream = 0; stream < num_streams; stream++)cudaStreamDestroy(streams[stream]);timer.stop("encrypt data on CPU");overall.start();timer.start();//cudaMemcpy(data_gpu, data_cpu,// sizeof(uint64_t)*num_entries, cudaMemcpyHostToDevice);timer.stop("copy data from CPU to GPU");check_last_error();timer.start();// decrypt_gpu<<<80*32, 64>>>(data_gpu, num_entries, num_iters);timer.stop("decrypt data on GPU");check_last_error();timer.start();//cudaMemcpy(data_cpu, data_gpu,// sizeof(uint64_t)*num_entries, cudaMemcpyDeviceToHost);timer.stop("copy data from GPU to CPU");overall.stop("total time on GPU");check_last_error();timer.start();const bool success = check_result_cpu(data_cpu, num_entries, openmp);std::cout << "STATUS: test "<< ( success ? "passed" : "failed")<< std::endl;timer.stop("checking result on CPU");timer.start();cudaFreeHost(data_cpu);cudaFree (data_gpu);timer.stop("free memory");check_last_error();}

还没有评论,来说两句吧...