CDH 6.3 大数据平台搭建

文章目录

- 一.CDH概述

- 二.安装CDH前准备

- 2.1 环境准备

- 2.2 安装前准备

- 2.2.1 主机名配置(所有节点)

- 2.2.2 防火墙及SeLinux配置(所有节点)

- 2.2.3 NTP服务配置(所有节点)

- 2.2.4 安装python(所有节点)

- 2.2.5 数据库需求(主节点)

- 2.2.6 安装JDK(所有节点)

- 2.2.7 下载安装包(所有节点)

- 2.2.8 安装MySQL的jdbc驱动(主节点)

- 2.2.9 创建CDH源数据库、用户、amon服务的数据库(主节点)

- 2.2.10 修改Linux swappiness参数(所有节点)

- 2.2.11 禁用透明页(所有节点)

- 三.CDH部署

- 3.1 离线部署CM server及agent

- 3.1.1 创建软件目录解压软件(所有节点)

- 3.1.2 选择hp1为主节点作为cm server,直接部署(主节点)

- 3.1.3 cm agent部署 (所有节点)

- 3.1.4 修改agent配置,指向server节点hp1 (所有节点)

- 3.1.5 server配置(主节点)

- 3.2 主节点部署离线parcel源 (主节点)

- 3.2.1 安装httpd

- 3.2.2 部署离线parcel源 (主节点)

- 3.2.3 页面访问

- 3.3 主节点启动server (主节点)

- 3.4 所有节点启动agent (所有节点)

- 3.5 web页面操作

- 3.5.1 登录主节点的7180端口

- 3.5.2 选择免费版

- 3.5.3 创建集群

- FAQ

- 1.CDH文件权限问题

- 2.CDH yarn资源包问题

- 3.hue的load Balancer启动失败

- 参考

一.CDH概述

Cloudera版本(Cloudera’s Distribution Including Apache Hadoop,简称“CDH”),基于Web的用户界面,支持大多数Hadoop组件,包括HDFS、MapReduce、Hive、Pig、 Hbase、Zookeeper、Sqoop,简化了大数据平台的安装、使用难度。

由于组件齐全,安装维护方便,国内已经有不少公司部署了CDH大数据平台,此处我选择CDH 6.3版本。

二.安装CDH前准备

2.1 环境准备

主机配置:

| IP | 主机名 |

|---|---|

| 10.31.1.123 | hp1 |

| 10.31.1.124 | hp2 |

| 10.31.1.125 | hp3 |

| 10.31.1.126 | hp4 |

硬件配置:

每台主机:CPU4核、内存8G、硬盘500G

软件版本:

| 名称 | 版本 |

|---|---|

| 操作系统 | CentOS release 7.8 (Final) 64位 |

| JDK | 1.8 |

| 数据库 | MySQL 5.6.49 |

| JDBC | MySQL Connector Java 5.1.38 |

| Cloudera Manager | 6.3.1 |

| CDH | 6.3.1 |

2.2 安装前准备

2.2.1 主机名配置(所有节点)

分别在各个主机下设置主机名

hostnamectl set-hostname hp1hostnamectl set-hostname hp2hostnamectl set-hostname hp3hostnamectl set-hostname hp4

配置4台机器 /etc/hosts

vi /etc/hosts127.0.0.1 localhost10.31.1.123 hp110.31.1.124 hp210.31.1.125 hp310.31.1.126 hp4

配置4台机器 /etc/sysconfig/network

-- 以123为例,其它3台参考[root@10-31-1-123 ~]# more /etc/sysconfig/network# Created by anacondaHOSTNAME=hp1

2.2.2 防火墙及SeLinux配置(所有节点)

关闭防火墙

systemctl disable firewalldsystemctl stop firewalld

配置SeLinux

vi /etc/selinux/configSELINUX=enforcing 改为 SELINUX=permissive

2.2.3 NTP服务配置(所有节点)

yum install ntpsystemctl start ntpdsystemctl enable ntpd

hp1为ntp服务器,其它3台同步123的

vi /etc/ntp.configrestrict 10.31.1.0 mask 255.255.255.0server 10.31.1.123systemctl restart ntpd

2.2.4 安装python(所有节点)

CDH要求python 2.7版本,此处系统自带,略过

2.2.5 数据库需求(主节点)

此处安装MySQL5.6版本,安装步骤略过

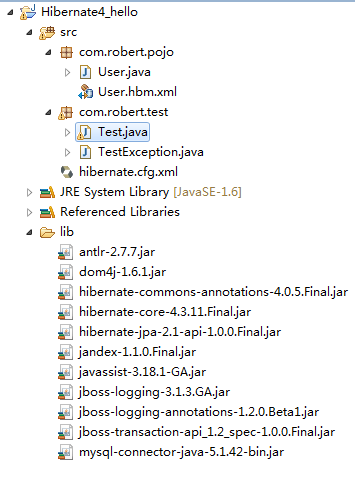

2.2.6 安装JDK(所有节点)

此处选择安装JDK 1.8

cd /usr/mkdir javacd javawget http://download.oracle.com/otn-pub/java/jdk/8u181-b13/96a7b8442fe848ef90c96a2fad6ed6d1/jdk-8u181-linux-x64.tar.gzAuthParam=1534129356_6b3ac55c6a38ba5a54c912855deb6a22mv jdk-8u181-linux-x64.tar.gzAuthParam\=1534129356_6b3ac55c6a38ba5a54c912855deb6a22 jdk-8u181-linux-x64.tar.gztar -zxvf jdk-8u181-linux-x64.tar.gzvi /etc/profile#javaexport JAVA_HOME=/usr/java/jdk1.8.0_181export PATH=$JAVA_HOME/bin:$PATHexport CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib

如下,显示java安装成功

[root@10-31-1-123 java]# java -versionjava version "1.8.0_181"Java(TM) SE Runtime Environment (build 1.8.0_181-b13)Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)

2.2.7 下载安装包(所有节点)

CM:CM6.3.1

连接:https://archive.cloudera.com/cm6/6.3.1/repo-as-tarball/cm6.3.1-redhat7.tar.gz

Parcel:

https://archive.cloudera.com/cdh6/6.3.1/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel

https://archive.cloudera.com/cdh6/6.3.1/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1

https://archive.cloudera.com/cdh6/6.3.1/parcels/manifest.json

以上软件打包近网盘中,可自取:

链接:https://pan.baidu.com/s/1UH50Uweyi7yg6bV7dl02mQ

提取码:nx7p

2.2.8 安装MySQL的jdbc驱动(主节点)

[root@10-31-1-123 mysql]# mkdir -p /usr/share/java[root@10-31-1-123 mysql]# cd /usr/share/java[root@10-31-1-123 java]#[root@10-31-1-123 java]# ll总用量 832-rw-r--r--. 1 root root 848067 1月 15 2014 mysql-connector-java-commercial-5.1.25-bin.jar[root@10-31-1-123 java]#[root@10-31-1-123 java]# mv mysql-connector-java-commercial-5.1.25-bin.jar mysql-connector-java.jar[root@10-31-1-123 java]# ll总用量 832-rw-r--r--. 1 root root 848067 1月 15 2014 mysql-connector-java.jar[root@10-31-1-123 java]#

2.2.9 创建CDH源数据库、用户、amon服务的数据库(主节点)

create database cmf DEFAULT CHARACTER SET utf8;create database amon DEFAULT CHARACTER SET utf8;grant all on cmf.* TO 'cmf'@'%' IDENTIFIED BY 'www.research.com';grant all on amon.* TO 'amon'@'%' IDENTIFIED BY 'www.research.com';flush privileges;

2.2.10 修改Linux swappiness参数(所有节点)

为了避免服务器使用swap功能而影响服务器性能,一般都会把vm.swappiness修改为0(cloudera建议10以下)

[root@hp1 mysql]# cd /usr/lib/tuned/[root@hp1 tuned]# grep "vm.swappiness" * -Rlatency-performance/tuned.conf:vm.swappiness=10throughput-performance/tuned.conf:vm.swappiness=10virtual-guest/tuned.conf:vm.swappiness = 30然后将文件中的配置依次修改为0修改后将这些文件同步到其他机器上

2.2.11 禁用透明页(所有节点)

[root@hp1 ~]# vim /etc/rc.local在文件中添加如下内容:echo never > /sys/kernel/mm/transparent_hugepage/defragecho never > /sys/kernel/mm/transparent_hugepage/enabled然后将该文件同步其他机器上,然后启动所有服务器

三.CDH部署

3.1 离线部署CM server及agent

3.1.1 创建软件目录解压软件(所有节点)

[root@10-31-1-123 cdh]# mkdir -p /opt/cloudera-manager[root@10-31-1-123 cloudera-manager]# cd /usr/local/cdh/[root@10-31-1-123 cdh]# ls -lrth总用量 3.3G-rw-r--r--. 1 root root 34K 11月 13 15:46 manifest.json-rw-r--r--. 1 root root 1.4G 11月 13 16:10 cm6.3.1-redhat7.tar.gz-rw-r--r--. 1 root root 40 11月 13 16:10 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1-rw-r--r--. 1 root root 2.0G 11月 13 16:37 CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel[root@10-31-1-123 cdh]#[root@10-31-1-123 cdh]# tar -zxf cm6.3.1-redhat7.tar.gz -C /opt/cloudera-manager[root@10-31-1-123 cdh]#

3.1.2 选择hp1为主节点作为cm server,直接部署(主节点)

cd /opt/cloudera-manager/cm6.3.1/RPMS/x86_64/rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm --nodeps --forcerpm -ivh cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm --nodeps --force

安装记录:

警告:cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm: 头V3 RSA/SHA256 Signature, 密钥 ID b0b19c9f: NOKEY准备中... ################################# [100%]正在升级/安装...1:cloudera-manager-daemons-6.3.1-14################################# [100%][root@10-31-1-123 x86_64]# rpm -ivh cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm --nodeps --force警告:cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm: 头V3 RSA/SHA256 Signature, 密钥 ID b0b19c9f: NOKEY准备中... ################################# [100%]正在升级/安装...1:cloudera-manager-server-6.3.1-146################################# [100%]Created symlink from /etc/systemd

3.1.3 cm agent部署 (所有节点)

cd /opt/cloudera-manager/cm6.3.1/RPMS/x86_64rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm --nodeps --forcerpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm --nodeps --force

3.1.4 修改agent配置,指向server节点hp1 (所有节点)

sed -i "s/server_host=localhost/server_host=10.31.1.123/g" /etc/cloudera-scm-agent/config.ini

3.1.5 server配置(主节点)

vim /etc/cloudera-scm-server/db.propertiescom.cloudera.cmf.db.type=mysqlcom.cloudera.cmf.db.host=10.31.1.123com.cloudera.cmf.db.name=cmfcom.cloudera.cmf.db.user=cmfcom.cloudera.cmf.db.password=www.research.comcom.cloudera.cmf.db.setupType=EXTERNAL

3.2 主节点部署离线parcel源 (主节点)

3.2.1 安装httpd

yum install -y httpd

3.2.2 部署离线parcel源 (主节点)

mkdir -p /var/www/html/cdh6_parcelcp /usr/local/cdh/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel /var/www/html/cdh6_parcel/mv /usr/local/cdh/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1 /var/www/html/cdh6_parcel/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.shamv /usr/local/cdh/manifest.json /var/www/html/cdh6_parcel/systemctl start httpd

3.2.3 页面访问

http://10.31.1.123/cdh6_parcel/

3.3 主节点启动server (主节点)

[root@10-31-1-123 x86_64]# systemctl start cloudera-scm-server[root@10-31-1-123 x86_64]# ll /var/log/cloudera-scm-server/总用量 20-rw-r-----. 1 cloudera-scm cloudera-scm 19610 11月 13 17:29 cloudera-scm-server.log-rw-r-----. 1 cloudera-scm cloudera-scm 0 11月 13 17:29 cmf-server-nio.log-rw-r-----. 1 cloudera-scm cloudera-scm 0 11月 13 17:29 cmf-server-perf.log[root@10-31-1-123 x86_64]# tail /var/log/cloudera-scm-server/cloudera-scm-server.logat com.mchange.v2.c3p0.WrapperConnectionPoolDataSource.getPooledConnection(WrapperConnectionPoolDataSource.java:195)at com.mchange.v2.c3p0.WrapperConnectionPoolDataSource.getPooledConnection(WrapperConnectionPoolDataSource.java:184)at com.mchange.v2.c3p0.impl.C3P0PooledConnectionPool$1PooledConnectionResourcePoolManager.acquireResource(C3P0PooledConnectionPool.java:200)at com.mchange.v2.resourcepool.BasicResourcePool.doAcquire(BasicResourcePool.java:1086)at com.mchange.v2.resourcepool.BasicResourcePool.doAcquireAndDecrementPendingAcquiresWithinLockOnSuccess(BasicResourcePool.java:1073)at com.mchange.v2.resourcepool.BasicResourcePool.access$800(BasicResourcePool.java:44)at com.mchange.v2.resourcepool.BasicResourcePool$ScatteredAcquireTask.run(BasicResourcePool.java:1810)at com.mchange.v2.async.ThreadPoolAsynchronousRunner$PoolThread.run(ThreadPoolAsynchronousRunner.java:648)2020-11-13 17:29:26,939 WARN C3P0PooledConnectionPoolManager[identityToken->2t3hq3ad1hj75spfrks4l|3be4f71]-HelperThread-#1:com.mchange.v2.resourcepool.BasicResourcePool: Having failed to acquire a resource, com.mchange.v2.resourcepool.BasicResourcePool@3b0ee03a is interrupting all Threads waiting on a resource to check out. Will try again in response to new client requests.2020-11-13 17:29:27,568 INFO main:com.cloudera.enterprise.CommonMain: Statistics not enabled, JMX will not be registered[root@10-31-1-123 x86_64]#

3.4 所有节点启动agent (所有节点)

systemctl start cloudera-scm-agent

3.5 web页面操作

3.5.1 登录主节点的7180端口

http://10.31.1.123:7180/

登陆用户名:admin

登陆密码: admin

3.5.2 选择免费版

3.5.3 创建集群

输入集群名

输入集群主机,此处用主机名

选择存储库

版本一定要对应上,不然一直安装不成功

添加本地的存储 //10.31.1.123/cdh6\_parcel/

//10.31.1.123/cdh6\_parcel/

然后把{latest_version}改为当前版本 : 6.3.1

安装JDK

配置SSH登陆

等待安装结束

安装parcels

安装告一段落

选择服务;

默认项:

创建数据库

create database hive DEFAULT CHARACTER SET utf8;grant all on hive.* TO 'hive'@'%' IDENTIFIED BY 'hive';create database oozie DEFAULT CHARACTER SET utf8;grant all on oozie.* TO 'oozie'@'%' IDENTIFIED BY 'oozie';create database hue DEFAULT CHARACTER SET utf8;grant all on hue.* TO 'hue'@'%' IDENTIFIED BY 'hue';flush privileges;--hue会报错mkdir /usr/lib64/mysqlcp /usr/local/mysql/lib/libmysqlclient.so.18.1.0 /usr/lib64/mysql/cd /usr/lib64/mysql/ln -s libmysqlclient.so.18.1.0 libmysqlclient.so.18[root@hp1 mysql]# more /etc/ld.so.confinclude ld.so.conf.d/*.conf/usr/lib64/mysql[root@hp1 mysql]# ldconfi

审核更改:

大功告成:

FAQ

1.CDH文件权限问题

Hive执行语句的时候提示 /user权限不够

hive>> select count(*) from fact_sale;Query ID = root_20201119152619_16f496b5-2482-4efb-a26c-e18117b2f10cTotal jobs = 1Launching Job 1 out of 1Number of reduce tasks determined at compile time: 1In order to change the average load for a reducer (in bytes):set hive.exec.reducers.bytes.per.reducer=<number>In order to limit the maximum number of reducers:set hive.exec.reducers.max=<number>In order to set a constant number of reducers:set mapreduce.job.reduces=<number>org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

解决方案:

[root@hp1 ~]#[root@hp1 ~]# hadoop fs -ls /Found 2 itemsdrwxrwxrwt - hdfs supergroup 0 2020-11-15 12:22 /tmpdrwxr-xr-x - hdfs supergroup 0 2020-11-15 12:21 /user[root@hp1 ~]#[root@hp1 ~]# hadoop fs -chmod 777 /userchmod: changing permissions of '/user': Permission denied. user=root is not the owner of inode=/user[root@hp1 ~]#[root@hp1 ~]# sudo -u hdfs hadoop fs -chmod 777 /user[root@hp1 ~]#[root@hp1 ~]#[root@hp1 ~]# hadoop fs -ls /Found 2 itemsdrwxrwxrwt - hdfs supergroup 0 2020-11-15 12:22 /tmpdrwxrwxrwx - hdfs supergroup 0 2020-11-15 12:21 /user[root@hp1 ~]#

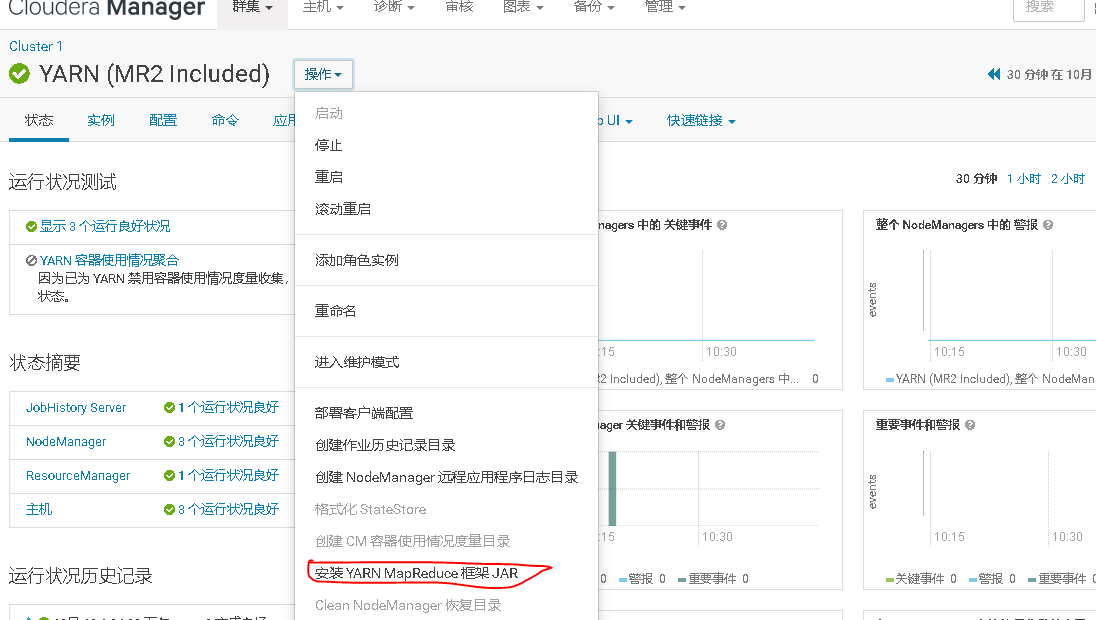

2.CDH yarn资源包问题

hive insert into语句报错:

> insert into fact_sale(id,sale_date,prod_name,sale_nums) values (1,'2011-08-16','PROD4',28);Query ID = root_20201119163832_f78a095d-2656-4da6-825f-64127e84b8b4Total jobs = 3Launching Job 1 out of 3Number of reduce tasks is set to 0 since there's no reduce operator20/11/19 16:38:32 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69Starting Job = job_1605767427026_0013, Tracking URL = http://hp3:8088/proxy/application_1605767427026_0013/Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1605767427026_0013Hadoop job information for Stage-1: number of mappers: 0; number of reducers: 02020-11-19 16:39:12,211 Stage-1 map = 0%, reduce = 0%Ended Job = job_1605767427026_0013 with errorsError during job, obtaining debugging information...FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTaskMapReduce Jobs Launched:Stage-Stage-1: HDFS Read: 0 HDFS Write: 0 HDFS EC Read: 0 FAILTotal MapReduce CPU Time Spent: 0 msec

根据提示看错误得到错误信息:

跑mapreduce任务报错Download and unpack failed

安装一下就OK

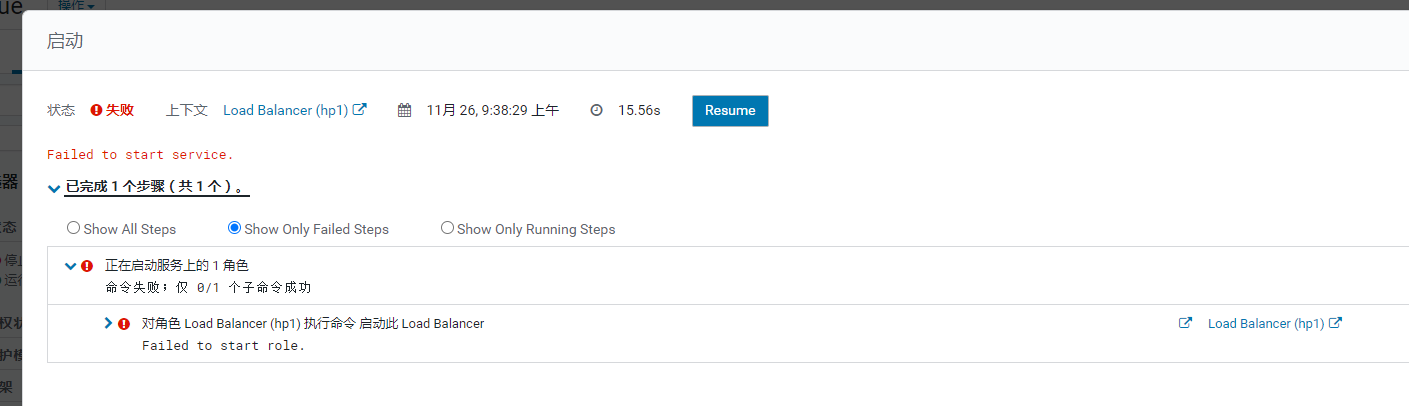

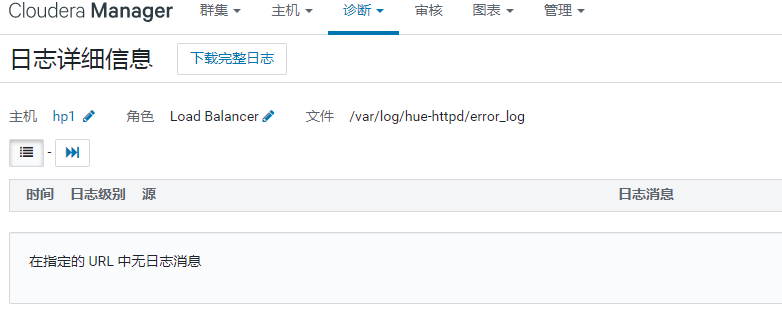

3.hue的load Balancer启动失败

load Balancer启动失败

查看日志,提示也没有日志,创建了指定目录,也没有日志输出

解决方案:

yum -y install httpdyum -y install mod_ssl

重新启动hue

参考

1.https://docs.cloudera.com/documentation/enterprise/latest/topics/installation.html

2.https://www.cnblogs.com/shwang/p/12112508.html

3.https://blog.csdn.net/gxd520/article/details/100982436

4.https://wxy0327.blog.csdn.net/article/details/51768968

5.https://blog.csdn.net/sinat\_35045195/article/details/102566776

错误处理,参考

1.https://blog.csdn.net/weixin\_39478115/article/details/77483251

2.https://q.cnblogs.com/q/110190/

还没有评论,来说两句吧...