K8S(14)监控实战-grafana出图_alert告警

k8s监控实战-grafana出图_alert告警

目录

k8s监控实战-grafana出图_alert告警

1 使用炫酷的grafana出图

1.1 部署grafana

- 1.1.1 准备镜像

- 1.1.2 准备rbac资源清单

- 1.1.3 准备dp资源清单

- 1.1.4 准备svc资源清单

- 1.1.5 准备ingress资源清单

- 1.1.6 域名解析

- 1.1.7 应用资源配置清单

1.2 使用grafana出图

- 1.2.1 浏览器访问验证

- 1.2.2 进入容器安装插件

- 1.2.3 配置数据源

- 1.2.4 添加K8S集群信息

- 1.2.5 查看k8s集群数据和图表

2 配置alert告警插件

2.1 部署alert插件

- 2.1.1 准备docker镜像

- 2.1.2 准备cm资源清单

- 2.1.3 准备dp资源清单

- 2.1.4 准备svc资源清单

- 2.1.5 应用资源配置清单

2.2 K8S使用alert报警

- 2.2.1 k8s创建基础报警规则文件

- 2.2.2 K8S 更新配置

- 2.2.3 测试告警

1 使用炫酷的grafana出图

prometheus的dashboard虽然号称拥有多种多样的图表,但是实在太简陋了,一般都用专业的grafana工具来出图

grafana官方dockerhub地址

grafana官方github地址

grafana官网

1.1 部署grafana

1.1.1 准备镜像

docker pull grafana/grafana:5.4.2docker tag 6f18ddf9e552 harbor.zq.com/infra/grafana:v5.4.2docker push harbor.zq.com/infra/grafana:v5.4.2

准备目录

mkdir /data/k8s-yaml/grafanacd /data/k8s-yaml/grafana

1.1.2 准备rbac资源清单

cat >rbac.yaml <<'EOF'apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/cluster-service: "true"name: grafanarules:- apiGroups:- "*"resources:- namespaces- deployments- podsverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/cluster-service: "true"name: grafanaroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: grafanasubjects:- kind: Username: k8s-nodeEOF

1.1.3 准备dp资源清单

cat >dp.yaml <<'EOF'apiVersion: extensions/v1beta1kind: Deploymentmetadata:labels:app: grafananame: grafananame: grafananamespace: infraspec:progressDeadlineSeconds: 600replicas: 1revisionHistoryLimit: 7selector:matchLabels:name: grafanastrategy:rollingUpdate:maxSurge: 1maxUnavailable: 1type: RollingUpdatetemplate:metadata:labels:app: grafananame: grafanaspec:containers:- name: grafanaimage: harbor.zq.com/infra/grafana:v5.4.2imagePullPolicy: IfNotPresentports:- containerPort: 3000protocol: TCPvolumeMounts:- mountPath: /var/lib/grafananame: dataimagePullSecrets:- name: harborsecurityContext:runAsUser: 0volumes:- nfs:server: hdss7-200path: /data/nfs-volume/grafananame: dataEOF

创建frafana数据目录

mkdir /data/nfs-volume/grafana

1.1.4 准备svc资源清单

cat >svc.yaml <<'EOF'apiVersion: v1kind: Servicemetadata:name: grafananamespace: infraspec:ports:- port: 3000protocol: TCPtargetPort: 3000selector:app: grafanaEOF

1.1.5 准备ingress资源清单

cat >ingress.yaml <<'EOF'apiVersion: extensions/v1beta1kind: Ingressmetadata:name: grafananamespace: infraspec:rules:- host: grafana.zq.comhttp:paths:- path: /backend:serviceName: grafanaservicePort: 3000EOF

1.1.6 域名解析

vi /var/named/zq.com.zonegrafana A 10.4.7.10

systemctl restart named

1.1.7 应用资源配置清单

kubectl apply -f http://k8s-yaml.zq.com/grafana/rbac.yamlkubectl apply -f http://k8s-yaml.zq.com/grafana/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/grafana/svc.yamlkubectl apply -f http://k8s-yaml.zq.com/grafana/ingress.yaml

1.2 使用grafana出图

1.2.1 浏览器访问验证

访问http://grafana.zq.com,默认用户名密码admin/admin

能成功访问表示安装成功

进入后立即修改管理员密码为admin123

1.2.2 进入容器安装插件

grafana确认启动好以后,需要进入grafana容器内部,安装以下插件

kubectl -n infra exec -it grafana-d6588db94-xr4s6 /bin/bash# 以下命令在容器内执行grafana-cli plugins install grafana-kubernetes-appgrafana-cli plugins install grafana-clock-panelgrafana-cli plugins install grafana-piechart-panelgrafana-cli plugins install briangann-gauge-panelgrafana-cli plugins install natel-discrete-panel

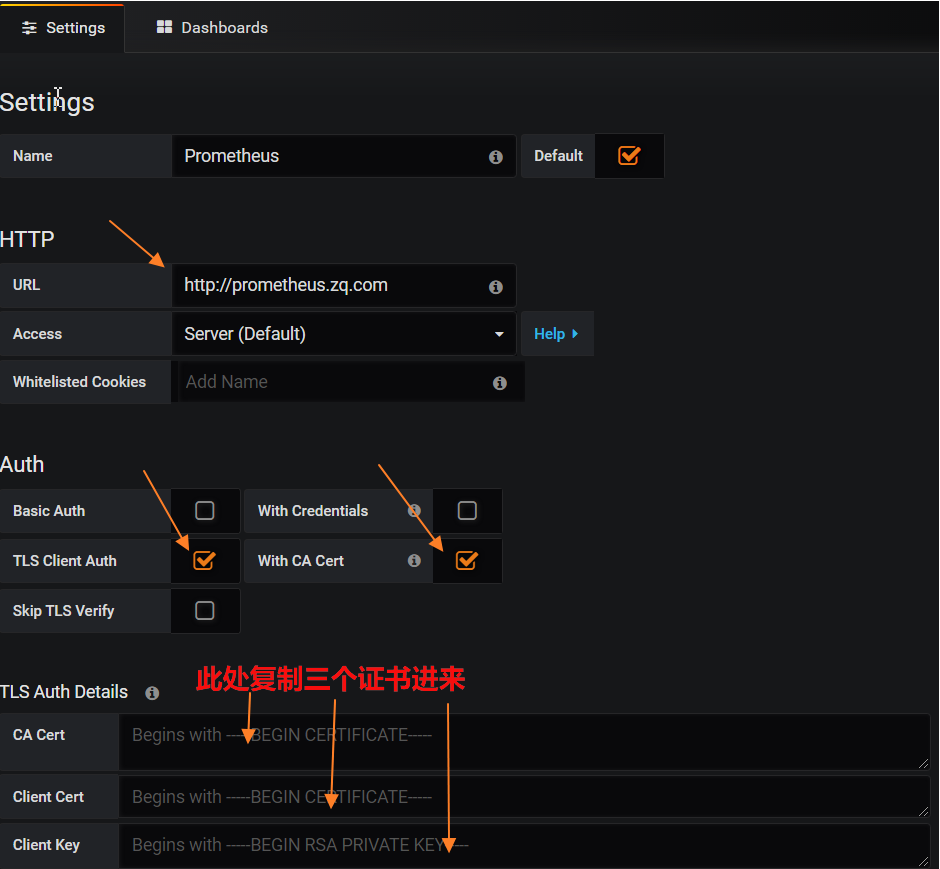

1.2.3 配置数据源

添加数据源,依次点击:左侧锯齿图标—>add data source—>Prometheus

添加完成后重启grafana

kubectl -n infra delete pod grafana-7dd95b4c8d-nj5cx

1.2.4 添加K8S集群信息

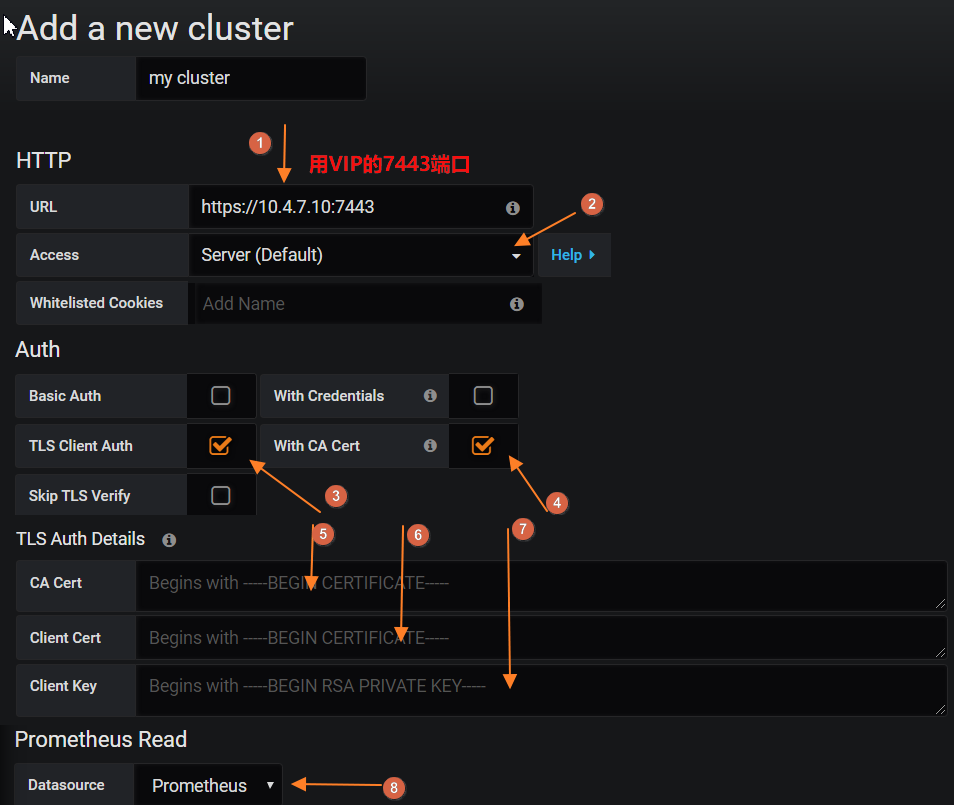

启用K8S插件,依次点击:左侧锯齿图标—>Plugins—>kubernetes—>Enable

新建cluster,依次点击:左侧K8S图标—>New Cluster

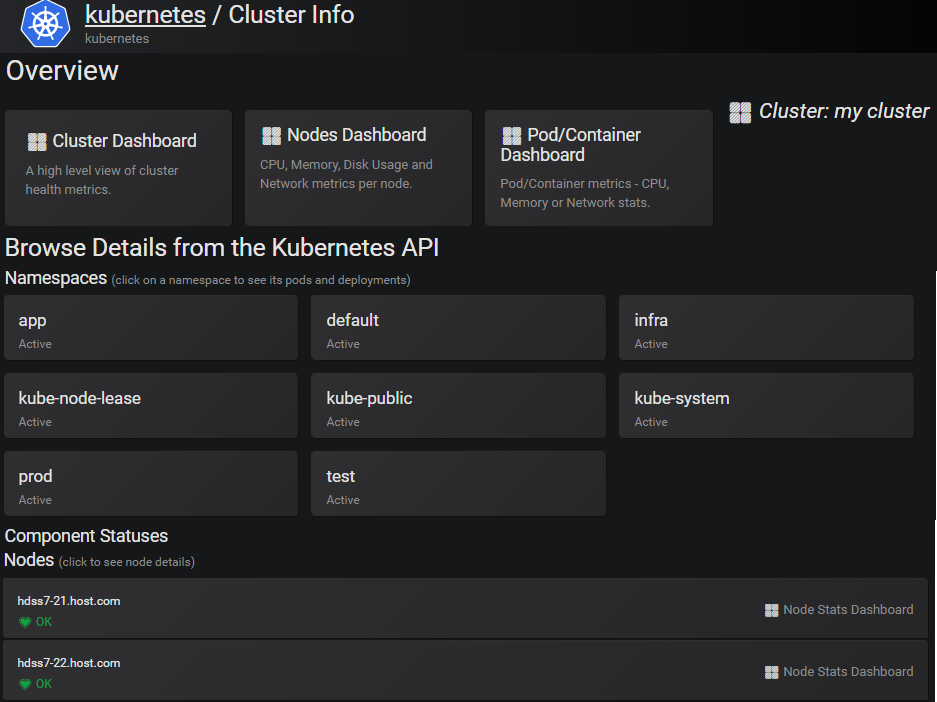

1.2.5 查看k8s集群数据和图表

添加完需要稍等几分钟,在没有取到数据之前,会报http forbidden,没关系,等一会就好。大概2-5分钟。

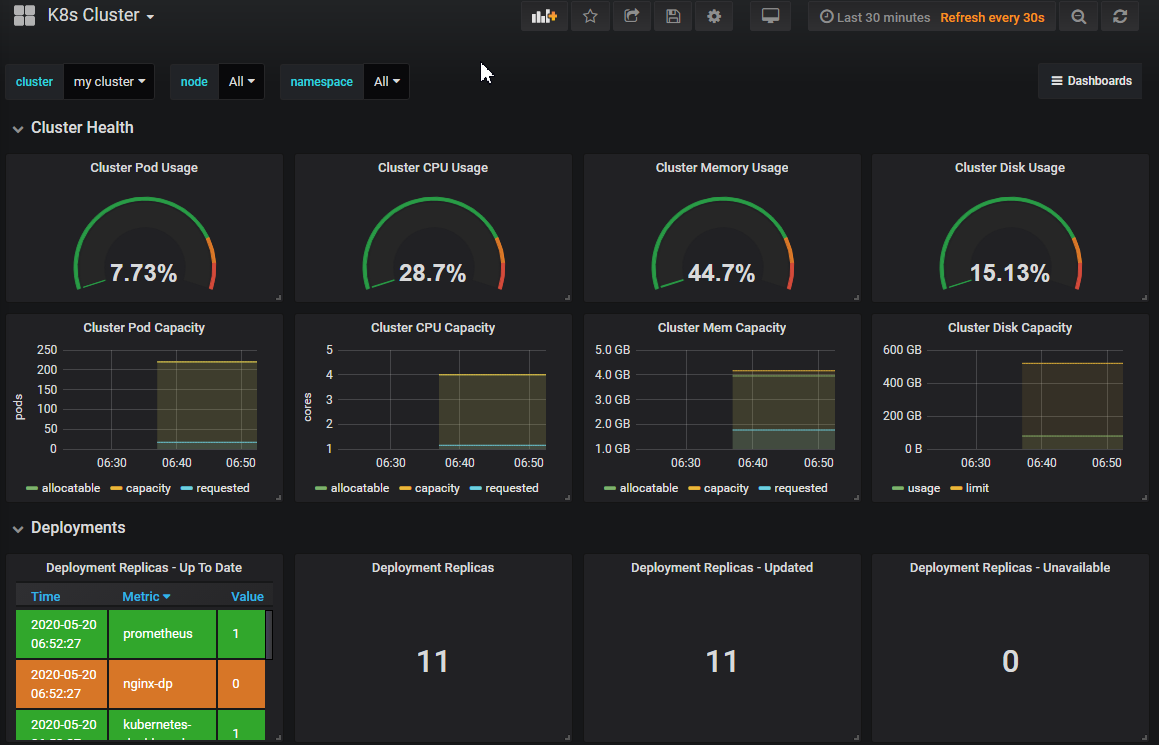

点击Cluster Dashboard

2 配置alert告警插件

2.1 部署alert插件

2.1.1 准备docker镜像

docker pull docker.io/prom/alertmanager:v0.14.0docker tag 23744b2d645c harbor.zq.com/infra/alertmanager:v0.14.0docker push harbor.zq.com/infra/alertmanager:v0.14.0

准备目录

mkdir /data/k8s-yaml/alertmanagercd /data/k8s-yaml/alertmanager

2.1.2 准备cm资源清单

cat >cm.yaml <<'EOF'apiVersion: v1kind: ConfigMapmetadata:name: alertmanager-confignamespace: infradata:config.yml: |-global:# 在没有报警的情况下声明为已解决的时间resolve_timeout: 5m# 配置邮件发送信息smtp_smarthost: 'smtp.163.com:25'smtp_from: 'xxx@163.com'smtp_auth_username: 'xxx@163.com'smtp_auth_password: 'xxxxxx'smtp_require_tls: falsetemplates:- '/etc/alertmanager/*.tmpl'# 所有报警信息进入后的根路由,用来设置报警的分发策略route:# 这里的标签列表是接收到报警信息后的重新分组标签,例如,接收到的报警信息里面有许多具有 cluster=A 和 alertname=LatncyHigh 这样的标签的报警信息将会批量被聚合到一个分组里面group_by: ['alertname', 'cluster']# 当一个新的报警分组被创建后,需要等待至少group_wait时间来初始化通知,这种方式可以确保您能有足够的时间为同一分组来获取多个警报,然后一起触发这个报警信息。group_wait: 30s# 当第一个报警发送后,等待'group_interval'时间来发送新的一组报警信息。group_interval: 5m# 如果一个报警信息已经发送成功了,等待'repeat_interval'时间来重新发送他们repeat_interval: 5m# 默认的receiver:如果一个报警没有被一个route匹配,则发送给默认的接收器receiver: defaultreceivers:- name: 'default'email_configs:- to: 'xxxx@qq.com'send_resolved: truehtml: '{{ template "email.to.html" . }}'headers: { Subject: " {{ .CommonLabels.instance }} {{ .CommonAnnotations.summary }}" }

email.tmpl: |

{ { define “email.to.html” }}

{ {- if gt (len .Alerts.Firing) 0 -}}

{ { range .Alerts }}

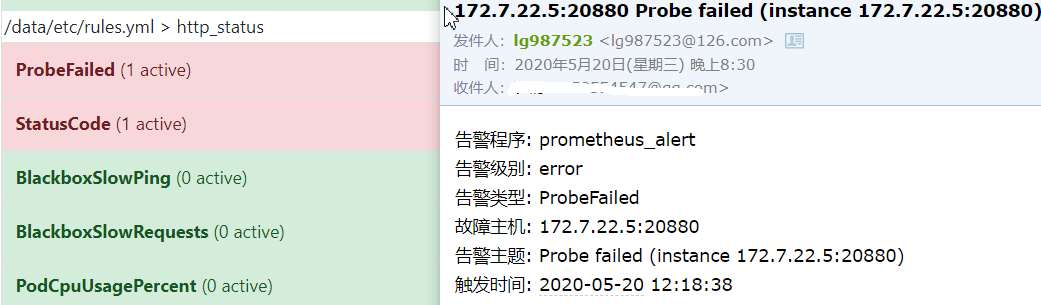

告警程序: prometheus_alert

告警级别: { { .Labels.severity }}

告警类型: { { .Labels.alertname }}

故障主机: { { .Labels.instance }}

告警主题: { { .Annotations.summary }}

触发时间: { { .StartsAt.Format “2006-01-02 15:04:05” }}

{ { end }}{ { end -}}

{{- if gt (len .Alerts.Resolved) 0 -}}{{ range .Alerts }}告警程序: prometheus_alert <br>告警级别: {{ .Labels.severity }} <br>告警类型: {{ .Labels.alertname }} <br>故障主机: {{ .Labels.instance }} <br>告警主题: {{ .Annotations.summary }} <br>触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }} <br>恢复时间: {{ .EndsAt.Format "2006-01-02 15:04:05" }} <br>{{ end }}{{ end -}}{{- end }}

EOF

2.1.3 准备dp资源清单

cat >dp.yaml <<'EOF'apiVersion: extensions/v1beta1kind: Deploymentmetadata:name: alertmanagernamespace: infraspec:replicas: 1selector:matchLabels:app: alertmanagertemplate:metadata:labels:app: alertmanagerspec:containers:- name: alertmanagerimage: harbor.zq.com/infra/alertmanager:v0.14.0args:- "--config.file=/etc/alertmanager/config.yml"- "--storage.path=/alertmanager"ports:- name: alertmanagercontainerPort: 9093volumeMounts:- name: alertmanager-cmmountPath: /etc/alertmanagervolumes:- name: alertmanager-cmconfigMap:name: alertmanager-configimagePullSecrets:- name: harborEOF

2.1.4 准备svc资源清单

cat >svc.yaml <<'EOF'apiVersion: v1kind: Servicemetadata:name: alertmanagernamespace: infraspec:selector:app: alertmanagerports:- port: 80targetPort: 9093EOF

2.1.5 应用资源配置清单

kubectl apply -f http://k8s-yaml.zq.com/alertmanager/cm.yamlkubectl apply -f http://k8s-yaml.zq.com/alertmanager/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/alertmanager/svc.yaml

2.2 K8S使用alert报警

2.2.1 k8s创建基础报警规则文件

cat >/data/nfs-volume/prometheus/etc/rules.yml <<'EOF'groups:- name: hostStatsAlertrules:- alert: hostCpuUsageAlertexpr: sum(avg without (cpu)(irate(node_cpu{mode!='idle'}[5m]))) by (instance) > 0.85for: 5mlabels:severity: warningannotations:summary: "{{ $labels.instance }} CPU usage above 85% (current value: {{ $value }}%)"- alert: hostMemUsageAlertexpr: (node_memory_MemTotal - node_memory_MemAvailable)/node_memory_MemTotal > 0.85for: 5mlabels:severity: warningannotations:summary: "{{ $labels.instance }} MEM usage above 85% (current value: {{ $value }}%)"- alert: OutOfInodesexpr: node_filesystem_free{fstype="overlay",mountpoint ="/"} / node_filesystem_size{fstype="overlay",mountpoint ="/"} * 100 < 10for: 5mlabels:severity: warningannotations:summary: "Out of inodes (instance {{ $labels.instance }})"description: "Disk is almost running out of available inodes (< 10% left) (current value: {{ $value }})"- alert: OutOfDiskSpaceexpr: node_filesystem_free{fstype="overlay",mountpoint ="/rootfs"} / node_filesystem_size{fstype="overlay",mountpoint ="/rootfs"} * 100 < 10for: 5mlabels:severity: warningannotations:summary: "Out of disk space (instance {{ $labels.instance }})"description: "Disk is almost full (< 10% left) (current value: {{ $value }})"- alert: UnusualNetworkThroughputInexpr: sum by (instance) (irate(node_network_receive_bytes[2m])) / 1024 / 1024 > 100for: 5mlabels:severity: warningannotations:summary: "Unusual network throughput in (instance {{ $labels.instance }})"description: "Host network interfaces are probably receiving too much data (> 100 MB/s) (current value: {{ $value }})"- alert: UnusualNetworkThroughputOutexpr: sum by (instance) (irate(node_network_transmit_bytes[2m])) / 1024 / 1024 > 100for: 5mlabels:severity: warningannotations:summary: "Unusual network throughput out (instance {{ $labels.instance }})"description: "Host network interfaces are probably sending too much data (> 100 MB/s) (current value: {{ $value }})"- alert: UnusualDiskReadRateexpr: sum by (instance) (irate(node_disk_bytes_read[2m])) / 1024 / 1024 > 50for: 5mlabels:severity: warningannotations:summary: "Unusual disk read rate (instance {{ $labels.instance }})"description: "Disk is probably reading too much data (> 50 MB/s) (current value: {{ $value }})"- alert: UnusualDiskWriteRateexpr: sum by (instance) (irate(node_disk_bytes_written[2m])) / 1024 / 1024 > 50for: 5mlabels:severity: warningannotations:summary: "Unusual disk write rate (instance {{ $labels.instance }})"description: "Disk is probably writing too much data (> 50 MB/s) (current value: {{ $value }})"- alert: UnusualDiskReadLatencyexpr: rate(node_disk_read_time_ms[1m]) / rate(node_disk_reads_completed[1m]) > 100for: 5mlabels:severity: warningannotations:summary: "Unusual disk read latency (instance {{ $labels.instance }})"description: "Disk latency is growing (read operations > 100ms) (current value: {{ $value }})"- alert: UnusualDiskWriteLatencyexpr: rate(node_disk_write_time_ms[1m]) / rate(node_disk_writes_completedl[1m]) > 100for: 5mlabels:severity: warningannotations:summary: "Unusual disk write latency (instance {{ $labels.instance }})"description: "Disk latency is growing (write operations > 100ms) (current value: {{ $value }})"- name: http_statusrules:- alert: ProbeFailedexpr: probe_success == 0for: 1mlabels:severity: errorannotations:summary: "Probe failed (instance {{ $labels.instance }})"description: "Probe failed (current value: {{ $value }})"- alert: StatusCodeexpr: probe_http_status_code <= 199 OR probe_http_status_code >= 400for: 1mlabels:severity: errorannotations:summary: "Status Code (instance {{ $labels.instance }})"description: "HTTP status code is not 200-399 (current value: {{ $value }})"- alert: SslCertificateWillExpireSoonexpr: probe_ssl_earliest_cert_expiry - time() < 86400 * 30for: 5mlabels:severity: warningannotations:summary: "SSL certificate will expire soon (instance {{ $labels.instance }})"description: "SSL certificate expires in 30 days (current value: {{ $value }})"- alert: SslCertificateHasExpiredexpr: probe_ssl_earliest_cert_expiry - time() <= 0for: 5mlabels:severity: errorannotations:summary: "SSL certificate has expired (instance {{ $labels.instance }})"description: "SSL certificate has expired already (current value: {{ $value }})"- alert: BlackboxSlowPingexpr: probe_icmp_duration_seconds > 2for: 5mlabels:severity: warningannotations:summary: "Blackbox slow ping (instance {{ $labels.instance }})"description: "Blackbox ping took more than 2s (current value: {{ $value }})"- alert: BlackboxSlowRequestsexpr: probe_http_duration_seconds > 2for: 5mlabels:severity: warningannotations:summary: "Blackbox slow requests (instance {{ $labels.instance }})"description: "Blackbox request took more than 2s (current value: {{ $value }})"- alert: PodCpuUsagePercentexpr: sum(sum(label_replace(irate(container_cpu_usage_seconds_total[1m]),"pod","$1","container_label_io_kubernetes_pod_name", "(.*)"))by(pod) / on(pod) group_right kube_pod_container_resource_limits_cpu_cores *100 )by(container,namespace,node,pod,severity) > 80for: 5mlabels:severity: warningannotations:summary: "Pod cpu usage percent has exceeded 80% (current value: {{ $value }}%)"EOF

2.2.2 K8S 更新配置

在prometheus配置文件中追加配置:

cat >>/data/nfs-volume/prometheus/etc/prometheus.yml <<'EOF'alerting:alertmanagers:- static_configs:- targets: ["alertmanager"]rule_files:- "/data/etc/rules.yml"EOF

重载配置:

curl -X POST http://prometheus.zq.com/-/reload

以上这些就是我们的告警规则

2.2.3 测试告警

把test命名空间里的dubbo-demo-service给停掉

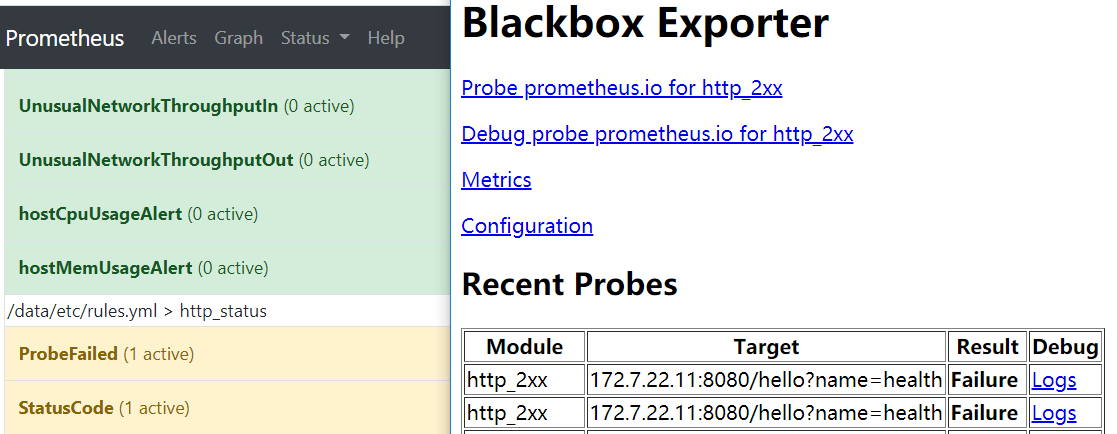

blackbox里信息已报错,alert里面项目变黄了

等到alert中项目变为红色的时候就开会发邮件告警

如果需要自己定制告警规则和告警内容,需要研究一下promql,自己修改配置文件。

还没有评论,来说两句吧...