ConcurrentHashMap源码分析

简介

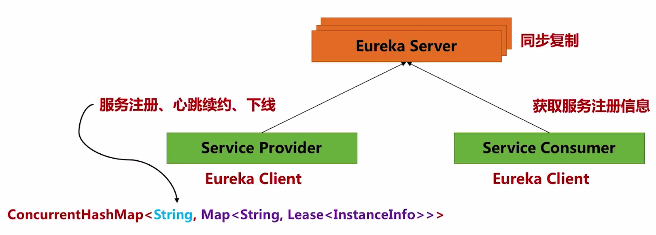

ConcurrentHashMap是HashMap更高效的线程安全版本的实现。不同于Hashtable简单的将所有方法标记为synchronized,它将内部数组分成多个Segment,每个Segment又是一个特殊的hash表,这样设计是为了减少锁粒度。另外它内部通过精巧的实现,让很多读操作(get(),size()等)甚至不需要上锁。

源码解析(基于jdk1.7.0_76)

Unsafe类的使用

下面是ConcurrentHashMap类的内部描述。介绍了Segment设计目的(锁分段)和Unsafe类的引入目的(实现volatile read和putOrderedObject延迟写):

/** The basic strategy is to subdivide the table among Segments,* each of which itself is a concurrently readable hash table. To* reduce footprint, all but one segments are constructed only* when first needed (see ensureSegment). To maintain visibility* in the presence of lazy construction, accesses to segments as* well as elements of segment's table must use volatile access,* which is done via Unsafe within methods segmentAt etc* below. These provide the functionality of AtomicReferenceArrays* but reduce the levels of indirection. Additionally,* volatile-writes of table elements and entry "next" fields* within locked operations use the cheaper "lazySet" forms of* writes (via putOrderedObject) because these writes are always* followed by lock releases that maintain sequential consistency* of table updates.*/

ConcurrentHashMap使用了Unsafe类的4个方法来读/写segments[ ]和table[ ]数组,分别是

getObject() —普通读

getObjectVolatile() —volatile read

putOrderedObject() —有序写(延迟写)

compareAndSwapObject —CAS写,volatile write

/*** Gets the jth element of given segment array (if nonnull) with* volatile element access semantics via Unsafe. (The null check* can trigger harmlessly only during deserialization.) Note:* because each element of segments array is set only once (using* fully ordered writes), some performance-sensitive methods rely* on this method only as a recheck upon null reads.*/@SuppressWarnings("unchecked")static final <K,V> Segment<K,V> segmentAt(Segment<K,V>[] ss, int j) {long u = (j << SSHIFT) + SBASE;return ss == null ? null :(Segment<K,V>) UNSAFE.getObjectVolatile(ss, u);}/*** Gets the ith element of given table (if nonnull) with volatile* read semantics. Note: This is manually integrated into a few* performance-sensitive methods to reduce call overhead.*/@SuppressWarnings("unchecked")static final <K,V> HashEntry<K,V> entryAt(HashEntry<K,V>[] tab, int i) {return (tab == null) ? null :(HashEntry<K,V>) UNSAFE.getObjectVolatile(tab, ((long)i << TSHIFT) + TBASE);}/*** Sets the ith element of given table, with volatile write* semantics. (See above about use of putOrderedObject.)*/static final <K,V> void setEntryAt(HashEntry<K,V>[] tab, int i,HashEntry<K,V> e) {UNSAFE.putOrderedObject(tab, ((long)i << TSHIFT) + TBASE, e);}

UNSAFE类的putOrderedObject()是一个本地方法。它会设置obj对象中offset偏移地址对应的object型field的值为指定值。它是一个有序的、有延迟的putObjectVolatile()方法,并且不保证值的改变被其他线程立即看到。只有在field被volatile修饰(或者是数组)时,使用putOrderedObject()才有用(参见sun.misc.Unsafe源代码该方法描述)。而采用这种廉价的LazySet forms of write的原因,是因为它总是被放到了锁里面来调用的(如前面的ConcurrentHashMap描述所说),不用担心多线程写的顺序问题。

HashEntry定义

其中的setNext()分别被Segment.put/rehash/remove方法调用:

/*** ConcurrentHashMap list entry. Note that this is never exported* out as a user-visible Map.Entry.*/static final class HashEntry<K,V> {final int hash;final K key;volatile V value;volatile HashEntry<K,V> next;HashEntry(int hash, K key, V value, HashEntry<K,V> next) {this.hash = hash;this.key = key;this.value = value;this.next = next;}/*** Sets next field with volatile write semantics. (See above* about use of putOrderedObject.)*/final void setNext(HashEntry<K,V> n) {UNSAFE.putOrderedObject(this, nextOffset, n);}// Unsafe mechanicsstatic final sun.misc.Unsafe UNSAFE;static final long nextOffset;static {try {UNSAFE = sun.misc.Unsafe.getUnsafe();Class k = HashEntry.class;nextOffset = UNSAFE.objectFieldOffset(k.getDeclaredField("next"));} catch (Exception e) {throw new Error(e);}}}

Segment定义

Segment继承了ReentrantLock。它的结构其实就是一个Hash表。

注意Segment类中的count属性没有volatile描述。而对count属性的写操作均上了锁,读操作则使用了volatile read(见isEmpty/size方法)。

/*** Segments are specialized versions of hash tables. This* subclasses from ReentrantLock opportunistically, just to* simplify some locking and avoid separate construction.*/static final class Segment<K,V> extends ReentrantLock implements Serializable {/** Segments maintain a table of entry lists that are always* kept in a consistent state, so can be read (via volatile* reads of segments and tables) without locking. This* requires replicating nodes when necessary during table* resizing, so the old lists can be traversed by readers* still using old version of table.** This class defines only mutative methods requiring locking.* Except as noted, the methods of this class perform the* per-segment versions of ConcurrentHashMap methods. (Other* methods are integrated directly into ConcurrentHashMap* methods.) These mutative methods use a form of controlled* spinning on contention via methods scanAndLock and* scanAndLockForPut. These intersperse tryLocks with* traversals to locate nodes. The main benefit is to absorb* cache misses (which are very common for hash tables) while* obtaining locks so that traversal is faster once* acquired. We do not actually use the found nodes since they* must be re-acquired under lock anyway to ensure sequential* consistency of updates (and in any case may be undetectably* stale), but they will normally be much faster to re-locate.* Also, scanAndLockForPut speculatively creates a fresh node* to use in put if no node is found.*/private static final long serialVersionUID = 2249069246763182397L;/*** The maximum number of times to tryLock in a prescan before* possibly blocking on acquire in preparation for a locked* segment operation. On multiprocessors, using a bounded* number of retries maintains cache acquired while locating* nodes.*/static final int MAX_SCAN_RETRIES =Runtime.getRuntime().availableProcessors() > 1 ? 64 : 1;/*** The per-segment table. Elements are accessed via* entryAt/setEntryAt providing volatile semantics.*/transient volatile HashEntry<K,V>[] table;/*** The number of elements. Accessed only either within locks* or among other volatile reads that maintain visibility.*/transient int count;/*** The total number of mutative operations in this segment.* Even though this may overflows 32 bits, it provides* sufficient accuracy for stability checks in CHM isEmpty()* and size() methods. Accessed only either within locks or* among other volatile reads that maintain visibility.*/transient int modCount;/*** The table is rehashed when its size exceeds this threshold.* (The value of this field is always <tt>(int)(capacity ** loadFactor)</tt>.)*/transient int threshold;/*** The load factor for the hash table. Even though this value* is same for all segments, it is replicated to avoid needing* links to outer object.* @serial*/final float loadFactor;Segment(float lf, int threshold, HashEntry<K,V>[] tab) {this.loadFactor = lf;this.threshold = threshold;this.table = tab;}......}

segments[]数组中的元素采用了延迟初始化,ConcurrentHashMap构造函数中只创建了segments[0],数组中其余元素全为null,而在ensureSegment()方法中才延迟创建Segment对象:

public ConcurrentHashMap(int initialCapacity,float loadFactor, int concurrencyLevel) {......// create segments and segments[0]Segment<K,V> s0 =new Segment<K,V>(loadFactor, (int)(cap * loadFactor),(HashEntry<K,V>[])new HashEntry[cap]);Segment<K,V>[] ss = (Segment<K,V>[])new Segment[ssize];UNSAFE.putOrderedObject(ss, SBASE, s0); // ordered write of segments[0]this.segments = ss;}

ensureSegment()方法功能同segmentAt(),只是增加了延迟初始化数组元素的功能:发现数组元素为null时,创建Segment对象并采用CAS操作放入数组内。

/*** Returns the segment for the given index, creating it and* recording in segment table (via CAS) if not already present.** @param k the index* @return the segment*/@SuppressWarnings("unchecked")private Segment<K,V> ensureSegment(int k) {final Segment<K,V>[] ss = this.segments;long u = (k << SSHIFT) + SBASE; // raw offsetSegment<K,V> seg;if ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u)) == null) {Segment<K,V> proto = ss[0]; // use segment 0 as prototypeint cap = proto.table.length;float lf = proto.loadFactor;int threshold = (int)(cap * lf);HashEntry<K,V>[] tab = (HashEntry<K,V>[])new HashEntry[cap];if ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u))== null) { // recheckSegment<K,V> s = new Segment<K,V>(lf, threshold, tab);while ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u))== null) {if (UNSAFE.compareAndSwapObject(ss, u, null, seg = s))break;}}}return seg;}

get()方法

内部使用了volatile read,遍历segments[ ]—>table[ ]—>HashEntry链表。在这个过程中,是没有显式用到锁的,仅仅是通过Unsafe类的volatile read,避免了阻塞,提高了性能:

/*** Returns the value to which the specified key is mapped,* or {@code null} if this map contains no mapping for the key.** <p>More formally, if this map contains a mapping from a key* {@code k} to a value {@code v} such that {@code key.equals(k)},* then this method returns {@code v}; otherwise it returns* {@code null}. (There can be at most one such mapping.)** @throws NullPointerException if the specified key is null*/public V get(Object key) {Segment<K,V> s; // manually integrate access methods to reduce overheadHashEntry<K,V>[] tab;int h = hash(key);long u = (((h >>> segmentShift) & segmentMask) << SSHIFT) + SBASE;if ((s = (Segment<K,V>)UNSAFE.getObjectVolatile(segments, u)) != null &&(tab = s.table) != null) {for (HashEntry<K,V> e = (HashEntry<K,V>) UNSAFE.getObjectVolatile(tab, ((long)(((tab.length - 1) & h)) << TSHIFT) + TBASE);e != null; e = e.next) {K k;if ((k = e.key) == key || (e.hash == h && key.equals(k)))return e.value;}}return null;}

isEmpty(),size()方法

为了避免上锁,该方法对Segment.modCount进行了2次检查:

public boolean isEmpty() {/** Sum per-segment modCounts to avoid mis-reporting when* elements are concurrently added and removed in one segment* while checking another, in which case the table was never* actually empty at any point. (The sum ensures accuracy up* through at least 1<<31 per-segment modifications before* recheck.) Methods size() and containsValue() use similar* constructions for stability checks.*/long sum = 0L;final Segment<K,V>[] segments = this.segments;for (int j = 0; j < segments.length; ++j) {Segment<K,V> seg = segmentAt(segments, j);if (seg != null) {if (seg.count != 0)return false;sum += seg.modCount;}}if (sum != 0L) { // recheck unless no modificationsfor (int j = 0; j < segments.length; ++j) {Segment<K,V> seg = segmentAt(segments, j);if (seg != null) {if (seg.count != 0)return false;sum -= seg.modCount;}}if (sum != 0L)return false;}return true;}

为了避免上锁,该方法对Segment.modCount进行了2次检查,2次检查失败之后才上锁:

因为这些操作需要全局扫描整个table[ ],正常情况下需要先获得所有Segment实例的锁,然后做相应的查找、计算得到结果,再解锁,返回值。然而为了竟可能的减少锁对性能的影响,Doug Lea在这里并没有直接加锁,而是先尝试的遍历查找、计算2遍,如果两遍遍历过程中整个Map没有发生修改(即两次所有Segment实例中modCount值的和一致),则可以认为整个查找、计算过程中table[ ]没有发生改变,我们计算的结果是正确的,否则,在顺序的在所有Segment实例加锁,计算,解锁,然后返回。

public int size() {// Try a few times to get accurate count. On failure due to// continuous async changes in table, resort to locking.final Segment<K,V>[] segments = this.segments;int size;boolean overflow; // true if size overflows 32 bitslong sum; // sum of modCountslong last = 0L; // previous sumint retries = -1; // first iteration isn't retrytry {for (;;) {if (retries++ == RETRIES_BEFORE_LOCK) { //RETRIES_BEFORE_LOCK = 2for (int j = 0; j < segments.length; ++j)ensureSegment(j).lock(); // force creation}sum = 0L;size = 0;overflow = false;for (int j = 0; j < segments.length; ++j) {Segment<K,V> seg = segmentAt(segments, j);if (seg != null) {sum += seg.modCount;int c = seg.count;if (c < 0 || (size += c) < 0)overflow = true;}}if (sum == last)break;last = sum;}} finally {if (retries > RETRIES_BEFORE_LOCK) {for (int j = 0; j < segments.length; ++j)segmentAt(segments, j).unlock();}}return overflow ? Integer.MAX_VALUE : size;}

Segment中的put()方法

put方法,主要通过scanAndLockForPut()获取锁。如果key已经存在,则更新HashEntry.value,否则创建新HashEntry,放入到链表头部。另外还会根据情况选择性的做rehash扩容。

final V put(K key, int hash, V value, boolean onlyIfAbsent) {HashEntry<K,V> node = tryLock() ? null :scanAndLockForPut(key, hash, value);V oldValue;try {HashEntry<K,V>[] tab = table;int index = (tab.length - 1) & hash;HashEntry<K,V> first = entryAt(tab, index);for (HashEntry<K,V> e = first;;) {if (e != null) {K k;if ((k = e.key) == key ||(e.hash == hash && key.equals(k))) {oldValue = e.value;if (!onlyIfAbsent) {e.value = value;++modCount;}break;}e = e.next;}else {if (node != null)node.setNext(first);elsenode = new HashEntry<K,V>(hash, key, value, first);int c = count + 1;if (c > threshold && tab.length < MAXIMUM_CAPACITY)rehash(node);elsesetEntryAt(tab, index, node);++modCount;count = c;oldValue = null;break;}}} finally {unlock();}return oldValue;}

Segment中的scanAndLockForPut()和scanAndLock()方法

主要目的是获得锁,顺便进行了查找和创建工作(但不保证找到,可能获得了锁,但是查找工作还没结束)。

这里的while循环尝试自旋通过调用ReentrantLock.tryLock()获取锁,重试次数超限之后才会调用ReentrantLock.lock()。

/*** Scans for a node containing given key while trying to* acquire lock, creating and returning one if not found. Upon* return, guarantees that lock is held. UNlike in most* methods, calls to method equals are not screened: Since* traversal speed doesn't matter, we might as well help warm* up the associated code and accesses as well.** @return a new node if key not found, else null*/private HashEntry<K,V> scanAndLockForPut(K key, int hash, V value) {HashEntry<K,V> first = entryForHash(this, hash);HashEntry<K,V> e = first;HashEntry<K,V> node = null;int retries = -1; // negative while locating nodewhile (!tryLock()) {HashEntry<K,V> f; // to recheck first belowif (retries < 0) {if (e == null) {if (node == null) // speculatively create nodenode = new HashEntry<K,V>(hash, key, value, null);retries = 0;}else if (key.equals(e.key))retries = 0;elsee = e.next;}else if (++retries > MAX_SCAN_RETRIES) {lock();break;}else if ((retries & 1) == 0 &&(f = entryForHash(this, hash)) != first) {e = first = f; // re-traverse if entry changedretries = -1;}}return node;}

Segment中的scanAndLock()同scanAndLockForPut()方法类似,只是少了创建new HashEntry()的工作。其主要目的也是为了获取锁,只是不想空跑while循环,所以在循环中找点事情做—顺便遍历链表对key进行查找,如果发现数据不一致的情况,则重新循环:

/*** Scans for a node containing the given key while trying to* acquire lock for a remove or replace operation. Upon* return, guarantees that lock is held. Note that we must* lock even if the key is not found, to ensure sequential* consistency of updates.*/private void scanAndLock(Object key, int hash) {// similar to but simpler than scanAndLockForPutHashEntry<K,V> first = entryForHash(this, hash);HashEntry<K,V> e = first;int retries = -1;while (!tryLock()) {HashEntry<K,V> f;if (retries < 0) {if (e == null || key.equals(e.key))retries = 0;elsee = e.next;}else if (++retries > MAX_SCAN_RETRIES) {lock();break;}else if ((retries & 1) == 0 &&(f = entryForHash(this, hash)) != first) { //发现数据不一致,则重置retries值e = first = f;retries = -1;}}}

Segment中的remove()方法

先entryAt方法定位数组位置,找到链表头,然后遍历链表,找到待删结点HashEntry后,直接修改待删结点pred结点的next指针(前面提到的HashEntry类中的setNext()方法)。另外Segment中的put()/rehash()也都采用了类似方式修改next指针。

/*** Remove; match on key only if value null, else match both.*/final V remove(Object key, int hash, Object value) {if (!tryLock())scanAndLock(key, hash);V oldValue = null;try {HashEntry<K,V>[] tab = table;int index = (tab.length - 1) & hash;HashEntry<K,V> e = entryAt(tab, index);HashEntry<K,V> pred = null;while (e != null) {K k;HashEntry<K,V> next = e.next;if ((k = e.key) == key ||(e.hash == hash && key.equals(k))) {V v = e.value;if (value == null || value == v || value.equals(v)) {if (pred == null)setEntryAt(tab, index, next);elsepred.setNext(next);++modCount;--count;oldValue = v;}break;}pred = e;e = next;}} finally {unlock();}return oldValue;}

Segment中的rehash()方法

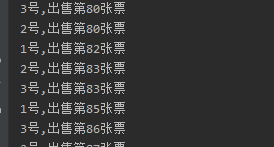

注意,ConcurrentHashMap中的segments[]不会rehash,而只有Segment中的table[]会进行扩容。另外,rehash方法唯一被put方法所调用(所以rehash是在上锁的情况下的进行的)。

这里rehash扩容时有2个特别的地方:

1,对只有一个节点的链,直接将该节点赋值给新数组对应项即可。

2,对有多个节点的链,先遍历该链找到第一个后面所有节点的索引值不变的节点p,然后只重新创建节点p以前的节点即可,此时新节点链和旧节点链同时存在,在p节点相遇,这样即使有其他线程在当前链做遍历也能正常工作。

/*** Doubles size of table and repacks entries, also adding the* given node to new table*/@SuppressWarnings("unchecked")private void rehash(HashEntry<K,V> node) {/** Reclassify nodes in each list to new table. Because we* are using power-of-two expansion, the elements from* each bin must either stay at same index, or move with a* power of two offset. We eliminate unnecessary node* creation by catching cases where old nodes can be* reused because their next fields won't change.* Statistically, at the default threshold, only about* one-sixth of them need cloning when a table* doubles. The nodes they replace will be garbage* collectable as soon as they are no longer referenced by* any reader thread that may be in the midst of* concurrently traversing table. Entry accesses use plain* array indexing because they are followed by volatile* table write.*/HashEntry<K,V>[] oldTable = table;int oldCapacity = oldTable.length;int newCapacity = oldCapacity << 1;threshold = (int)(newCapacity * loadFactor);HashEntry<K,V>[] newTable =(HashEntry<K,V>[]) new HashEntry[newCapacity];int sizeMask = newCapacity - 1;for (int i = 0; i < oldCapacity ; i++) {HashEntry<K,V> e = oldTable[i];if (e != null) {HashEntry<K,V> next = e.next;int idx = e.hash & sizeMask;if (next == null) // Single node on listnewTable[idx] = e;else { // Reuse consecutive sequence at same slotHashEntry<K,V> lastRun = e;int lastIdx = idx;for (HashEntry<K,V> last = next;last != null;last = last.next) {int k = last.hash & sizeMask;if (k != lastIdx) {lastIdx = k;lastRun = last;}}newTable[lastIdx] = lastRun;// Clone remaining nodesfor (HashEntry<K,V> p = e; p != lastRun; p = p.next) {V v = p.value;int h = p.hash;int k = h & sizeMask;HashEntry<K,V> n = newTable[k];newTable[k] = new HashEntry<K,V>(h, p.key, v, n);}}}}int nodeIndex = node.hash & sizeMask; // add the new nodenode.setNext(newTable[nodeIndex]);newTable[nodeIndex] = node;table = newTable;}

参考资料

(1)JDK1.6

探索 ConcurrentHashMap 高并发性的实现机制

聊聊并发(四)——深入分析ConcurrentHashMap

特性描述:

- 分离锁设计,降低锁竞争

- 对写操作(put, remove, clear)的特别设计,减少读锁的使用

Segment对象属性volatile int count,volatile关键字保证多线程操作count的可见性

get方法中不上读锁,只有读到 value 域的值为null 时 , 读线程才需要加锁后重读(如读到value域为null,说明发生了重排序,需加锁后重读。而为何会为null,详细解析参见

为什么ConcurrentHashMap是弱一致的

)

- 与HashMap不同的是,不允许Key和Value为null

ConcurrentHashMap 的高并发性主要来自于三个方面:

用分离锁实现多个线程间的更深层次的共享访问。

用 HashEntery 对象的不变性来降低执行读操作的线程在遍历链表期间对加锁的需求。

通过对同一个 Volatile 变量的写 / 读访问,协调不同线程间读 / 写操作的内存可见性。

(2)JDK1.7

ConcurrentHashMap源码分析整理

Java Core系列之ConcurrentHashMap实现(JDK 1.7)

Java多线程系列—“JUC集合”04之 ConcurrentHashMap

还没有评论,来说两句吧...