人脸检测(二)

2.级联CNN人脸检测方法

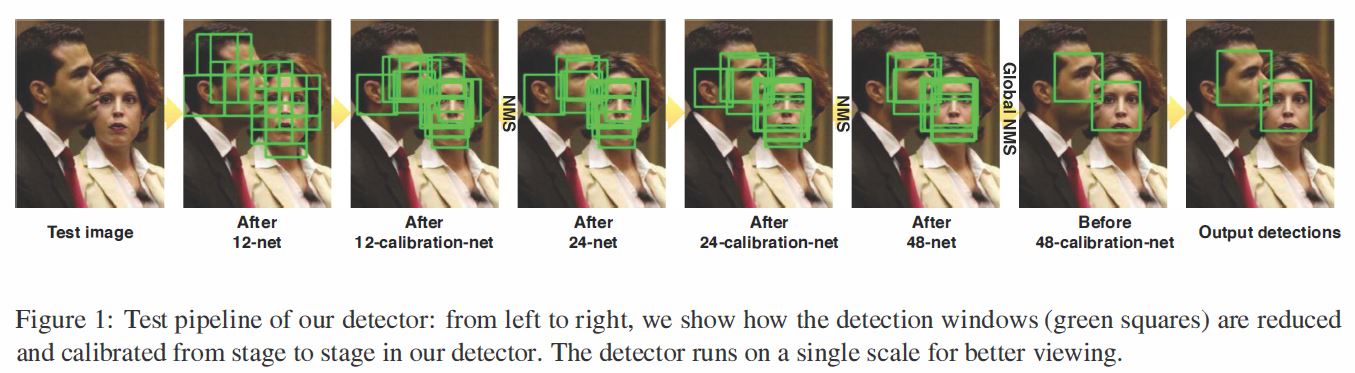

采用级联网络来进行人脸检测,参考2015年CVPR上的一篇论文A Convolution Neural Network Cascade for Face Detection,它采用了12-net,24-net,48-net级联网络用于人脸检测,12-calibration-net,24-calibration,48-calibration边界校订网络用于更好的定位人脸框。它最小能够检测12x12大小的人脸,相比于单个CNN的人脸检测方法,大大加快了人脸检测的速度,并提高了人脸框的准确度,人脸检测的准确率和召回率也很高,在FDDB数据集上达到了当时最高的分数。

论文下载地址

github开源代码

github开源模型

作者的代码写的非常优美易懂,注释简洁明了,其级联CNN人脸检测的基本思路如下:

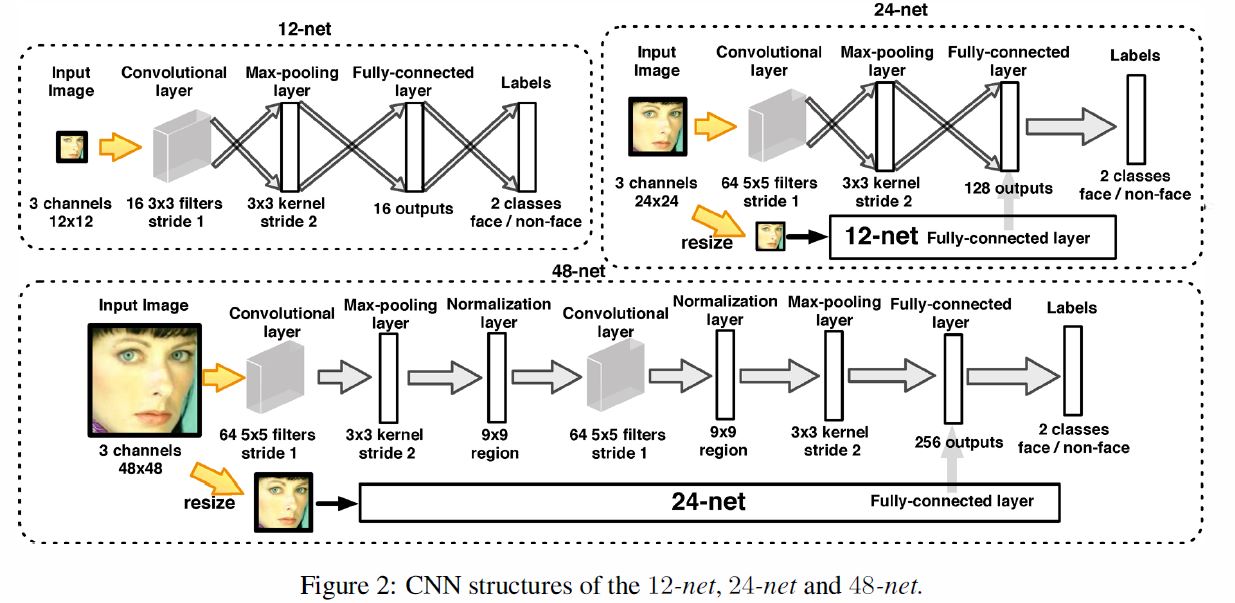

(1)12-net:首先用一个输入为12 x 12图像的小网络来训练人脸非人脸二分类器,将最后的全连接层修改成卷积层,这样的全卷积网络即12-full-conv-net就可以接受任意大小的输入图像,输出的特征图表示对应感受野区域属于人脸的概率。在检测时,假设要检测的最小人连尺寸为K x K,例如40 x 40,将待检测的图像缩放到原来的12/K,然后将整幅图像输入到训练好的12 x 12的全卷积网络,得到特征图,设定阈值过滤掉得分低的,这样就可以去除绝大部分不感兴趣区域,保留一定数量的候选框。

(2)12-calibration-net:训练一个输入为12 x 12图像的校订网络,来矫正上一步12-net得到的人脸框边界,它实质上是一个45类分类器,判断当前图像中包含的人脸是左右偏了、上下偏了还是大了小了,即包括:

x方向上-0.17、0、0.17共3种平移

y方向上-0.17、0、0.17共3三种平移

以及0.83、0.91、1.0、1.1、1.21共5种缩放尺度

检测时,将上一步12-net得到的所有人脸候选框作为12-calibration-net的输入,根据其分类结果对候选框位置进行校订。

(3)localNMS:对上述12-calibration-net矫正后的人脸候选框做局部非极大值抑制,过滤掉其中重叠的得分较低的候选框,保留得分更高的人脸候选框。

(4)24-net:训练输入为24 x 24图像的人脸分类器网络。测试时,以上一步localNMS得到的人脸候选框缩放到24 x 24大小,作为24-net网络输入,判定是否属于人脸,设置阈值保留得分较高的候选框。

(5)24-calibration-net:同样训练一个输入图像为24 x 24大小的边界校订分类网络,来矫正上一步24-net保留的人脸候选框的位置,候选框区域图像缩放到24 x 24大小,其它与12-calibration-net一致。

(6)localNMS:将24-calibration-net矫正后的人脸候选框进行局部非极大值抑制,过滤掉重叠的得分较低的候选框,保留得分更高的。

(7)48-net:训练一个更加准确的输入为48 x 48的人脸非人脸分类器。测试时,将上一步localNMS得到的人脸候选框缩放到48 x 48大小,作为48-net输入,保留得分高的人脸候选框。

(8)globalNMS:将48-net得到的所有人脸候选框进行全局非极大值抑制,保留所有的最佳人脸框

(9)48-calibration-net:训练一个输入为48 x 48的边界校订分类网络。测试时,将globalNMS得到的最佳人脸框缩放到48 x 48作为输入进行人脸框边界校订。

因此,我们需要先单独训练人脸非人脸二分类12-net,24-net,48-net网络,以及人脸框边界校订12-calibration-net,24-calibration,48-calibration网络。测试时,用图像金字塔来做多尺度人脸检测,对于任意的输入图像,依次经过12-net(12-full-conv-net) -> 12-calibration-net -> localNMS -> 24-net -> 24-calibration-net -> localNMS -> 48-net -> globalNMS -> 48-calibration-net,得到最终的人脸框作为检测结果。

其中localNMS和globalNMS(Non-maximum Suppression,NMS,非极大值抑制)的区别主要在于前者仅使用了IoU(Intersection over Union),即交集与并集的比值,而后者还用到了IoM(Intersection over Min-area),即交集与两者中最小面积的比值,来过滤重叠的候选框。

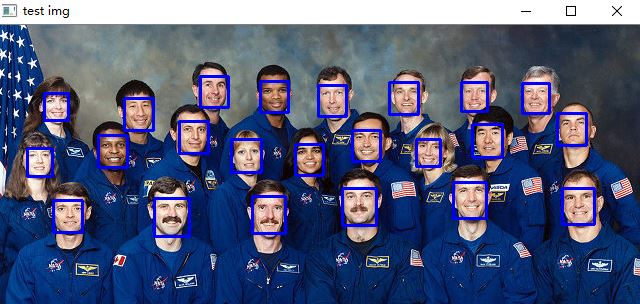

博主没有自己训练,而是直接用了作者训练好的模型。使用CNN_face_detection-master\face_detection文件夹下的face_cascade_fullconv_single_crop_single_image.py脚本可以对但张图像进行测试,代码如下,其中需要注意的是,我们可以根据需求来设置检测最小的人脸尺寸min_face_size,它与检测速度直接相关,如果我们需要检测的人脸比较大,例如在128x128以上时,检测可以达到实时水平。

import numpy as npimport cv2import timeimport os#from operator import itemgetterfrom load_model_functions import *from face_detection_functions import *# ================== caffe ======================================#caffe_root = '/home/anson/caffe-master/' # this file is expected to be in {caffe_root}/examplesimport sys#sys.path.insert(0, caffe_root + 'python')import caffe# ================== load models ======================================net_12c_full_conv, net_12_cal, net_24c, net_24_cal, net_48c, net_48_cal = \load_face_models(loadNet=True)nets = (net_12c_full_conv, net_12_cal, net_24c, net_24_cal, net_48c, net_48_cal)start_time = time.time()read_img_name = 'C:/Users/Administrator/Desktop/caffe/matlab/demo/1.jpg'img = cv2.imread(read_img_name) # BGRprint img.shapemin_face_size = 48 #最小的人脸检测尺寸,设置的越小能检测到更小人脸的同时,速度下降的很快stride = 5 #步长,实际并未使用# caffe_image = np.true_divide(img, 255) # convert to caffe style (0~1 BGR)# caffe_image = caffe_image[:, :, (2, 1, 0)]img_forward = np.array(img, dtype=np.float32)img_forward -= np.array((104, 117, 123))rectangles = detect_faces_net(nets, img_forward, min_face_size, stride, True, 2, 0.05)for rectangle in rectangles: # draw rectanglescv2.rectangle(img, (rectangle[0], rectangle[1]), (rectangle[2], rectangle[3]), (255, 0, 0), 2)end_time = time.time()print 'aver_time = ',(end_time-start_time)*1000,'ms'cv2.imshow('test img', img)cv2.waitKey(0)cv2.destroyAllWindows()

上述脚本文件中的detect_faces_net()函数在CNN_face_detection-master\face_detection文件夹下的face_detection_functions.py脚本中实现,face_detection_functions.py是整个级联网络的检测过程脚本,写的非常优雅,思路和注释清晰,本人针对自己需求进行了修改,代码如下:

import numpy as npimport cv2import timefrom operator import itemgetter# ================== caffe ======================================#caffe_root = '/home/anson/caffe-master/' # this file is expected to be in {caffe_root}/examplesimport sys#sys.path.insert(0, caffe_root + 'python')import caffedef find_initial_scale(net_kind, min_face_size):''':param net_kind: what kind of net (12, 24, or 48):param min_face_size: minimum face size:return: returns scale factor'''return float(min_face_size) / net_kinddef resize_image(img, scale):''':param img: original img:param scale: scale factor:return: resized image'''height, width, channels = img.shapenew_height = int(height / scale) # resized new heightnew_width = int(width / scale) # resized new widthnew_dim = (new_width, new_height)img_resized = cv2.resize(img, new_dim) # resized imagereturn img_resizeddef draw_rectangle(net_kind, img, face):''':param net_kind: what kind of net (12, 24, or 48):param img: image to draw on:param face: # list of info. in format [x, y, scale]:return: nothing'''x = face[0]y = face[1]scale = face[2]original_x = int(x * scale) # corresponding x and y at original imageoriginal_y = int(y * scale)original_x_br = int(x * scale + net_kind * scale) # bottom right x and yoriginal_y_br = int(y * scale + net_kind * scale)cv2.rectangle(img, (original_x, original_y), (original_x_br, original_y_br), (255,0,0), 2)def IoU(rect_1, rect_2):''':param rect_1: list in format [x11, y11, x12, y12, confidence, current_scale]:param rect_2: list in format [x21, y21, x22, y22, confidence, current_scale]:return: returns IoU ratio (intersection over union) of two rectangles'''x11 = rect_1[0] # first rectangle top left xy11 = rect_1[1] # first rectangle top left yx12 = rect_1[2] # first rectangle bottom right xy12 = rect_1[3] # first rectangle bottom right yx21 = rect_2[0] # second rectangle top left xy21 = rect_2[1] # second rectangle top left yx22 = rect_2[2] # second rectangle bottom right xy22 = rect_2[3] # second rectangle bottom right yx_overlap = max(0, min(x12,x22) -max(x11,x21))y_overlap = max(0, min(y12,y22) -max(y11,y21))intersection = x_overlap * y_overlapunion = (x12-x11) * (y12-y11) + (x22-x21) * (y22-y21) - intersectionreturn float(intersection) / uniondef IoM(rect_1, rect_2):''':param rect_1: list in format [x11, y11, x12, y12, confidence, current_scale]:param rect_2: list in format [x21, y21, x22, y22, confidence, current_scale]:return: returns IoM ratio (intersection over min-area) of two rectangles'''x11 = rect_1[0] # first rectangle top left xy11 = rect_1[1] # first rectangle top left yx12 = rect_1[2] # first rectangle bottom right xy12 = rect_1[3] # first rectangle bottom right yx21 = rect_2[0] # second rectangle top left xy21 = rect_2[1] # second rectangle top left yx22 = rect_2[2] # second rectangle bottom right xy22 = rect_2[3] # second rectangle bottom right yx_overlap = max(0, min(x12,x22) -max(x11,x21))y_overlap = max(0, min(y12,y22) -max(y11,y21))intersection = x_overlap * y_overlaprect1_area = (y12 - y11) * (x12 - x11)rect2_area = (y22 - y21) * (x22 - x21)min_area = min(rect1_area, rect2_area)return float(intersection) / min_areadef localNMS(rectangles):''':param rectangles: list of rectangles, which are lists in format [x11, y11, x12, y12, confidence, current_scale],sorted from highest confidence to smallest:return: list of rectangles after local NMS'''result_rectangles = rectangles[:] # list to returnnumber_of_rects = len(result_rectangles)threshold = 0.3 # threshold of IoU of two rectanglescur_rect = 0while cur_rect < number_of_rects - 1: # start from first element to second last elementrects_to_compare = number_of_rects - cur_rect - 1 # elements after current element to comparecur_rect_to_compare = cur_rect + 1 # start comparing with element after currentwhile rects_to_compare > 0: # while there is at least one element after current to compareif (IoU(result_rectangles[cur_rect], result_rectangles[cur_rect_to_compare]) >= threshold) \and (result_rectangles[cur_rect][5] == result_rectangles[cur_rect_to_compare][5]): # scale is samedel result_rectangles[cur_rect_to_compare] # delete the rectanglenumber_of_rects -= 1else:cur_rect_to_compare += 1 # skip to next rectanglerects_to_compare -= 1cur_rect += 1 # finished comparing for current rectanglereturn result_rectanglesdef globalNMS(rectangles):''':param rectangles: list of rectangles, which are lists in format [x11, y11, x12, y12, confidence, current_scale],sorted from highest confidence to smallest:return: list of rectangles after global NMS'''result_rectangles = rectangles[:] # list to returnnumber_of_rects = len(result_rectangles)threshold = 0.3 # threshold of IoU of two rectanglescur_rect = 0while cur_rect < number_of_rects - 1: # start from first element to second last elementrects_to_compare = number_of_rects - cur_rect - 1 # elements after current element to comparecur_rect_to_compare = cur_rect + 1 # start comparing with element ater currentwhile rects_to_compare > 0: # while there is at least one element after current to compareif IoU(result_rectangles[cur_rect], result_rectangles[cur_rect_to_compare]) >= 0.2 \or ((IoM(result_rectangles[cur_rect], result_rectangles[cur_rect_to_compare]) >= threshold)):#and (result_rectangles[cur_rect_to_compare][5] < 0.85)): # if IoU ratio is higher than threshold #10/12=0.8333?del result_rectangles[cur_rect_to_compare] # delete the rectanglenumber_of_rects -= 1else:cur_rect_to_compare += 1 # skip to next rectanglerects_to_compare -= 1cur_rect += 1 # finished comparing for current rectanglereturn result_rectangles# ====== Below functions (12cal ~ 48cal) take images in style of caffe (0~1 BGR)===def detect_face_12c(net_12c_full_conv, img, min_face_size, stride,multiScale=False, scale_factor=1.414, threshold=0.05):''':param img: image to detect faces:param min_face_size: minimum face size to detect (in pixels):param stride: stride (in pixels):param multiScale: whether to find faces under multiple scales or not:param scale_factor: scale to apply for pyramid:param threshold: score of patch must be above this value to pass to next net:return: list of rectangles after global NMS'''net_kind = 12rectangles = [] # list of rectangles [x11, y11, x12, y12, confidence, current_scale] (corresponding to original image)current_scale = find_initial_scale(net_kind, min_face_size) # find initial scalecaffe_img_resized = resize_image(img, current_scale) # resized initial caffe imagecurrent_height, current_width, channels = caffe_img_resized.shapewhile current_height > net_kind and current_width > net_kind:caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1)) # switch from H x W x C to C x H x W# shape for input (data blob is N x C x H x W), set datanet_12c_full_conv.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_12c_full_conv.blobs['data'].data[...] = caffe_img_resized_CHW# run net and take argmax for predictionnet_12c_full_conv.forward()out = net_12c_full_conv.blobs['prob'].data[0][1, :, :]# print out.shapeout_height, out_width = out.shapefor current_y in range(0, out_height):for current_x in range(0, out_width):# total_windows += 1confidence = out[current_y, current_x] # left index is y, right index is x (starting from 0)if confidence >= threshold:current_rectangle = [int(2*current_x*current_scale), int(2*current_y*current_scale),int(2*current_x*current_scale + net_kind*current_scale),int(2*current_y*current_scale + net_kind*current_scale),confidence, current_scale] # find corresponding patch on imagerectangles.append(current_rectangle)if multiScale is False:breakelse:caffe_img_resized = resize_image(caffe_img_resized, scale_factor)current_scale *= scale_factorcurrent_height, current_width, channels = caffe_img_resized.shapereturn rectanglesdef cal_face_12c(net_12_cal, caffe_img, rectangles):''':param caffe_image: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''height, width, channels = caffe_img.shaperesult = []all_cropped_caffe_img = []for cur_rectangle in rectangles:original_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]cropped_caffe_img = caffe_img[original_y1:original_y2, original_x1:original_x2] # crop imageall_cropped_caffe_img.append(cropped_caffe_img)if len(all_cropped_caffe_img) == 0:return []output_all = net_12_cal.predict(all_cropped_caffe_img) # predict through caffefor cur_rect in range(len(rectangles)):cur_rectangle = rectangles[cur_rect]output = output_all[cur_rect]prediction = output[0] # (44, 1) ndarraythreshold = 0.1indices = np.nonzero(prediction > threshold)[0] # ndarray of indices where prediction is larger than thresholdnumber_of_cals = len(indices) # number of calibrations larger than thresholdif number_of_cals == 0: # if no calibration is needed, check next rectangleresult.append(cur_rectangle)continueoriginal_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]original_w = original_x2 - original_x1original_h = original_y2 - original_y1total_s_change = 0total_x_change = 0total_y_change = 0for current_cal in range(number_of_cals): # accumulate changes, and calculate averagecal_label = int(indices[current_cal]) # should be number in 0~44if (cal_label >= 0) and (cal_label <= 8): # decide s changetotal_s_change += 0.83elif (cal_label >= 9) and (cal_label <= 17):total_s_change += 0.91elif (cal_label >= 18) and (cal_label <= 26):total_s_change += 1.0elif (cal_label >= 27) and (cal_label <= 35):total_s_change += 1.10else:total_s_change += 1.21if cal_label % 9 <= 2: # decide x changetotal_x_change += -0.17elif (cal_label % 9 >= 6) and (cal_label % 9 <= 8): # ignore case when 3<=x<=5, since adding 0 doesn't changetotal_x_change += 0.17if cal_label % 3 == 0: # decide y changetotal_y_change += -0.17elif cal_label % 3 == 2: # ignore case when 1, since adding 0 doesn't changetotal_y_change += 0.17s_change = total_s_change / number_of_cals # calculate averagex_change = total_x_change / number_of_calsy_change = total_y_change / number_of_calscur_result = cur_rectangle # inherit format and last two attributes from original rectanglecur_result[0] = int(max(0, original_x1 - original_w * x_change / s_change))cur_result[1] = int(max(0, original_y1 - original_h * y_change / s_change))cur_result[2] = int(min(width, cur_result[0] + original_w / s_change))cur_result[3] = int(min(height, cur_result[1] + original_h / s_change))result.append(cur_result)result = sorted(result, key=itemgetter(4), reverse=True) # sort rectangles according to confidence# reverse, so that it ranks from large to smallreturn resultdef detect_face_24c(net_24c, caffe_img, rectangles):''':param caffe_img: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''result = []all_cropped_caffe_img = []for cur_rectangle in rectangles:x1 = cur_rectangle[0]y1 = cur_rectangle[1]x2 = cur_rectangle[2]y2 = cur_rectangle[3]cropped_caffe_img = caffe_img[y1:y2, x1:x2] # crop imageall_cropped_caffe_img.append(cropped_caffe_img)if len(all_cropped_caffe_img) == 0:return []prediction_all = net_24c.predict(all_cropped_caffe_img) # predict through caffefor cur_rect in range(len(rectangles)):confidence = prediction_all[cur_rect][1]if confidence > 0.05:cur_rectangle = rectangles[cur_rect]cur_rectangle[4] = confidenceresult.append(cur_rectangle)return resultdef cal_face_24c(net_24_cal, caffe_img, rectangles):''':param caffe_image: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''height, width, channels = caffe_img.shaperesult = []for cur_rectangle in rectangles:original_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]original_w = original_x2 - original_x1original_h = original_y2 - original_y1cropped_caffe_img = caffe_img[original_y1:original_y2, original_x1:original_x2] # crop imageoutput = net_24_cal.predict([cropped_caffe_img]) # predict through caffeprediction = output[0] # (44, 1) ndarraythreshold = 0.1indices = np.nonzero(prediction > threshold)[0] # ndarray of indices where prediction is larger than thresholdnumber_of_cals = len(indices) # number of calibrations larger than thresholdif number_of_cals == 0: # if no calibration is needed, check next rectangleresult.append(cur_rectangle)continuetotal_s_change = 0total_x_change = 0total_y_change = 0for current_cal in range(number_of_cals): # accumulate changes, and calculate averagecal_label = int(indices[current_cal]) # should be number in 0~44if (cal_label >= 0) and (cal_label <= 8): # decide s changetotal_s_change += 0.83elif (cal_label >= 9) and (cal_label <= 17):total_s_change += 0.91elif (cal_label >= 18) and (cal_label <= 26):total_s_change += 1.0elif (cal_label >= 27) and (cal_label <= 35):total_s_change += 1.10else:total_s_change += 1.21if cal_label % 9 <= 2: # decide x changetotal_x_change += -0.17elif (cal_label % 9 >= 6) and (cal_label % 9 <= 8): # ignore case when 3<=x<=5, since adding 0 doesn't changetotal_x_change += 0.17if cal_label % 3 == 0: # decide y changetotal_y_change += -0.17elif cal_label % 3 == 2: # ignore case when 1, since adding 0 doesn't changetotal_y_change += 0.17s_change = total_s_change / number_of_cals # calculate averagex_change = total_x_change / number_of_calsy_change = total_y_change / number_of_calscur_result = cur_rectangle # inherit format and last two attributes from original rectanglecur_result[0] = int(max(0, original_x1 - original_w * x_change / s_change))cur_result[1] = int(max(0, original_y1 - original_h * y_change / s_change))cur_result[2] = int(min(width, cur_result[0] + original_w / s_change))cur_result[3] = int(min(height, cur_result[1] + original_h / s_change))result.append(cur_result)return resultdef detect_face_48c(net_48c, caffe_img, rectangles):''':param caffe_img: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''result = []all_cropped_caffe_img = []for cur_rectangle in rectangles:x1 = cur_rectangle[0]y1 = cur_rectangle[1]x2 = cur_rectangle[2]y2 = cur_rectangle[3]cropped_caffe_img = caffe_img[y1:y2, x1:x2] # crop imageall_cropped_caffe_img.append(cropped_caffe_img)prediction = net_48c.predict([cropped_caffe_img]) # predict through caffeconfidence = prediction[0][1]if confidence > 0.3:cur_rectangle[4] = confidenceresult.append(cur_rectangle)result = sorted(result, key=itemgetter(4), reverse=True) # sort rectangles according to confidence# reverse, so that it ranks from large to smallreturn resultdef cal_face_48c(net_48_cal, caffe_img, rectangles):''':param caffe_image: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''height, width, channels = caffe_img.shaperesult = []for cur_rectangle in rectangles:original_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]original_w = original_x2 - original_x1original_h = original_y2 - original_y1cropped_caffe_img = caffe_img[original_y1:original_y2, original_x1:original_x2] # crop imageoutput = net_48_cal.predict([cropped_caffe_img]) # predict through caffeprediction = output[0] # (44, 1) ndarraythreshold = 0.1indices = np.nonzero(prediction > threshold)[0] # ndarray of indices where prediction is larger than thresholdnumber_of_cals = len(indices) # number of calibrations larger than thresholdif number_of_cals == 0: # if no calibration is needed, check next rectangleresult.append(cur_rectangle)continuetotal_s_change = 0total_x_change = 0total_y_change = 0for current_cal in range(number_of_cals): # accumulate changes, and calculate averagecal_label = int(indices[current_cal]) # should be number in 0~44if (cal_label >= 0) and (cal_label <= 8): # decide s changetotal_s_change += 0.83elif (cal_label >= 9) and (cal_label <= 17):total_s_change += 0.91elif (cal_label >= 18) and (cal_label <= 26):total_s_change += 1.0elif (cal_label >= 27) and (cal_label <= 35):total_s_change += 1.10else:total_s_change += 1.21if cal_label % 9 <= 2: # decide x changetotal_x_change += -0.17elif (cal_label % 9 >= 6) and (cal_label % 9 <= 8): # ignore case when 3<=x<=5, since adding 0 doesn't changetotal_x_change += 0.17if cal_label % 3 == 0: # decide y changetotal_y_change += -0.17elif cal_label % 3 == 2: # ignore case when 1, since adding 0 doesn't changetotal_y_change += 0.17s_change = total_s_change / number_of_cals # calculate averagex_change = total_x_change / number_of_calsy_change = total_y_change / number_of_calscur_result = cur_rectangle # inherit format and last two attributes from original rectanglecur_result[0] = int(max(0, original_x1 - original_w * x_change / s_change))cur_result[1] = int(max(0, original_y1 - 1.1 * original_h * y_change / s_change))cur_result[2] = int(min(width, cur_result[0] + original_w / s_change))cur_result[3] = int(min(height, cur_result[1] + 1.1 * original_h / s_change))result.append(cur_result)return resultdef detect_faces(nets, img_forward, caffe_image, min_face_size, stride,multiScale=False, scale_factor=1.414, threshold=0.05):'''Complete flow of face cascade detection:param nets: 6 nets as a tuple:param img_forward: image in normal style after subtracting mean pixel value:param caffe_image: image in style of caffe (0~1 BGR):param min_face_size::param stride::param multiScale::param scale_factor::param threshold::return: list of rectangles'''net_12c_full_conv = nets[0]net_12_cal = nets[1]net_24c = nets[2]net_24_cal = nets[3]net_48c = nets[4]net_48_cal = nets[5]rectangles = detect_face_12c(net_12c_full_conv, img_forward, min_face_size,stride, multiScale, scale_factor, threshold) # detect facesrectangles = cal_face_12c(net_12_cal, caffe_image, rectangles) # calibrationrectangles = localNMS(rectangles) # apply local NMSrectangles = detect_face_24c(net_24c, caffe_image, rectangles)rectangles = cal_face_24c(net_24_cal, caffe_image, rectangles) # calibrationrectangles = localNMS(rectangles) # apply local NMSrectangles = detect_face_48c(net_48c, caffe_image, rectangles)rectangles = globalNMS(rectangles) # apply global NMSrectangles = cal_face_48c(net_48_cal, caffe_image, rectangles) # calibrationreturn rectangles# ========== Adjusts net to take one crop of image only during test time ==========# ====== Below functions take images in normal style after subtracting mean pixel value===def detect_face_12c_net(net_12c_full_conv, img_forward, min_face_size, stride,multiScale=False, scale_factor=1.414, threshold=0.05):'''Adjusts net to take one crop of image only during test time:param img: image in caffe style to detect faces:param min_face_size: minimum face size to detect (in pixels):param stride: stride (in pixels):param multiScale: whether to find faces under multiple scales or not:param scale_factor: scale to apply for pyramid:param threshold: score of patch must be above this value to pass to next net:return: list of rectangles after global NMS'''net_kind = 12rectangles = [] # list of rectangles [x11, y11, x12, y12, confidence, current_scale] (corresponding to original image)current_scale = find_initial_scale(net_kind, min_face_size) # find initial scalecaffe_img_resized = resize_image(img_forward, current_scale) # resized initial caffe imagecurrent_height, current_width, channels = caffe_img_resized.shape# print "Shape after resizing : " + str(caffe_img_resized.shape)while current_height > net_kind and current_width > net_kind:caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1)) # switch from H x W x C to C x H x W# shape for input (data blob is N x C x H x W), set datanet_12c_full_conv.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_12c_full_conv.blobs['data'].data[...] = caffe_img_resized_CHW# run net and take argmax for predictionnet_12c_full_conv.forward()out = net_12c_full_conv.blobs['prob'].data[0][1, :, :]# print out.shapeout_height, out_width = out.shape# threshold = 0.02# idx = out[:, :] >= threshold# out[idx] = 1# idx = out[:, :] < threshold# out[idx] = 0# cv2.imshow('img', out*255)# cv2.waitKey(0)# print "Shape of output after resizing " + str(caffe_img_resized.shape) + " : " + str(out.shape)for current_y in range(0, out_height):for current_x in range(0, out_width):# total_windows += 1confidence = out[current_y, current_x] # left index is y, right index is x (starting from 0)if confidence >= threshold:current_rectangle = [int(2*current_x*current_scale), int(2*current_y*current_scale),int(2*current_x*current_scale + net_kind*current_scale),int(2*current_y*current_scale + net_kind*current_scale),confidence, current_scale] # find corresponding patch on imagerectangles.append(current_rectangle)if multiScale is False:breakelse:caffe_img_resized = resize_image(caffe_img_resized, scale_factor)current_scale *= scale_factorcurrent_height, current_width, channels = caffe_img_resized.shapereturn rectanglesdef cal_face_12c_net(net_12_cal, img_forward, rectangles):'''Adjusts net to take one crop of image only during test time:param caffe_image: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''height, width, channels = img_forward.shaperesult = []for cur_rectangle in rectangles:original_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]original_w = original_x2 - original_x1original_h = original_y2 - original_y1cropped_caffe_img = img_forward[original_y1:original_y2, original_x1:original_x2] # crop imagecaffe_img_resized = cv2.resize(cropped_caffe_img, (12, 12))caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1))net_12_cal.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_12_cal.blobs['data'].data[...] = caffe_img_resized_CHWnet_12_cal.forward()output = net_12_cal.blobs['prob'].data# output = net_12_cal.predict([cropped_caffe_img]) # predict through caffeprediction = output[0] # (44, 1) ndarraythreshold = 0.1indices = np.nonzero(prediction > threshold)[0] # ndarray of indices where prediction is larger than thresholdnumber_of_cals = len(indices) # number of calibrations larger than thresholdif number_of_cals == 0: # if no calibration is needed, check next rectangleresult.append(cur_rectangle)continuetotal_s_change = 0total_x_change = 0total_y_change = 0for current_cal in range(number_of_cals): # accumulate changes, and calculate averagecal_label = int(indices[current_cal]) # should be number in 0~44if (cal_label >= 0) and (cal_label <= 8): # decide s changetotal_s_change += 0.83elif (cal_label >= 9) and (cal_label <= 17):total_s_change += 0.91elif (cal_label >= 18) and (cal_label <= 26):total_s_change += 1.0elif (cal_label >= 27) and (cal_label <= 35):total_s_change += 1.10else:total_s_change += 1.21if cal_label % 9 <= 2: # decide x changetotal_x_change += -0.17elif (cal_label % 9 >= 6) and (cal_label % 9 <= 8): # ignore case when 3<=x<=5, since adding 0 doesn't changetotal_x_change += 0.17if cal_label % 3 == 0: # decide y changetotal_y_change += -0.17elif cal_label % 3 == 2: # ignore case when 1, since adding 0 doesn't changetotal_y_change += 0.17s_change = total_s_change / number_of_cals # calculate averagex_change = total_x_change / number_of_calsy_change = total_y_change / number_of_calscur_result = cur_rectangle # inherit format and last two attributes from original rectanglecur_result[0] = int(max(0, original_x1 - original_w * x_change / s_change))cur_result[1] = int(max(0, original_y1 - original_h * y_change / s_change))cur_result[2] = int(min(width, cur_result[0] + original_w / s_change))cur_result[3] = int(min(height, cur_result[1] + original_h / s_change))result.append(cur_result)result = sorted(result, key=itemgetter(4), reverse=True) # sort rectangles according to confidence# reverse, so that it ranks from large to smallreturn resultdef detect_face_24c_net(net_24c, img_forward, rectangles):'''Adjusts net to take one crop of image only during test time:param caffe_img: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''result = []for cur_rectangle in rectangles:x1 = cur_rectangle[0]y1 = cur_rectangle[1]x2 = cur_rectangle[2]y2 = cur_rectangle[3]cropped_caffe_img = img_forward[y1:y2, x1:x2] # crop imagecaffe_img_resized = cv2.resize(cropped_caffe_img, (24, 24))caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1))net_24c.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_24c.blobs['data'].data[...] = caffe_img_resized_CHWnet_24c.forward()prediction = net_24c.blobs['prob'].dataconfidence = prediction[0][1]if confidence > 0.9:#0.05:cur_rectangle[4] = confidenceresult.append(cur_rectangle)return resultdef cal_face_24c_net(net_24_cal, img_forward, rectangles):'''Adjusts net to take one crop of image only during test time:param caffe_image: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''height, width, channels = img_forward.shaperesult = []for cur_rectangle in rectangles:original_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]original_w = original_x2 - original_x1original_h = original_y2 - original_y1cropped_caffe_img = img_forward[original_y1:original_y2, original_x1:original_x2] # crop imagecaffe_img_resized = cv2.resize(cropped_caffe_img, (24, 24))caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1))net_24_cal.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_24_cal.blobs['data'].data[...] = caffe_img_resized_CHWnet_24_cal.forward()output = net_24_cal.blobs['prob'].dataprediction = output[0] # (44, 1) ndarraythreshold = 0.1indices = np.nonzero(prediction > threshold)[0] # ndarray of indices where prediction is larger than thresholdnumber_of_cals = len(indices) # number of calibrations larger than thresholdif number_of_cals == 0: # if no calibration is needed, check next rectangleresult.append(cur_rectangle)continuetotal_s_change = 0total_x_change = 0total_y_change = 0for current_cal in range(number_of_cals): # accumulate changes, and calculate averagecal_label = int(indices[current_cal]) # should be number in 0~44if (cal_label >= 0) and (cal_label <= 8): # decide s changetotal_s_change += 0.83elif (cal_label >= 9) and (cal_label <= 17):total_s_change += 0.91elif (cal_label >= 18) and (cal_label <= 26):total_s_change += 1.0elif (cal_label >= 27) and (cal_label <= 35):total_s_change += 1.10else:total_s_change += 1.21if cal_label % 9 <= 2: # decide x changetotal_x_change += -0.17elif (cal_label % 9 >= 6) and (cal_label % 9 <= 8): # ignore case when 3<=x<=5, since adding 0 doesn't changetotal_x_change += 0.17if cal_label % 3 == 0: # decide y changetotal_y_change += -0.17elif cal_label % 3 == 2: # ignore case when 1, since adding 0 doesn't changetotal_y_change += 0.17s_change = total_s_change / number_of_cals # calculate averagex_change = total_x_change / number_of_calsy_change = total_y_change / number_of_calscur_result = cur_rectangle # inherit format and last two attributes from original rectanglecur_result[0] = int(max(0, original_x1 - original_w * x_change / s_change))cur_result[1] = int(max(0, original_y1 - original_h * y_change / s_change))cur_result[2] = int(min(width, cur_result[0] + original_w / s_change))cur_result[3] = int(min(height, cur_result[1] + original_h / s_change))result.append(cur_result)result = sorted(result, key=itemgetter(4), reverse=True) # sort rectangles according to confidence # reverse, so that it ranks from large to smallreturn resultdef detect_face_48c_net(net_48c, img_forward, rectangles):'''Adjusts net to take one crop of image only during test time:param caffe_img: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''result = []for cur_rectangle in rectangles:x1 = cur_rectangle[0]y1 = cur_rectangle[1]x2 = cur_rectangle[2]y2 = cur_rectangle[3]cropped_caffe_img = img_forward[y1:y2, x1:x2] # crop imagecaffe_img_resized = cv2.resize(cropped_caffe_img, (48, 48))caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1))net_48c.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_48c.blobs['data'].data[...] = caffe_img_resized_CHWnet_48c.forward()prediction = net_48c.blobs['prob'].dataconfidence = prediction[0][1]if confidence > 0.95:#0.1:cur_rectangle[4] = confidenceresult.append(cur_rectangle)result = sorted(result, key=itemgetter(4), reverse=True) # sort rectangles according to confidence# reverse, so that it ranks from large to small #after sorter and globalnms the best faces will be detectedreturn resultdef cal_face_48c_net(net_48_cal, img_forward, rectangles):'''Adjusts net to take one crop of image only during test time:param caffe_image: image in caffe style to detect faces:param rectangles: rectangles in form [x11, y11, x12, y12, confidence, current_scale]:return: rectangles after calibration'''height, width, channels = img_forward.shaperesult = []for cur_rectangle in rectangles:original_x1 = cur_rectangle[0]original_y1 = cur_rectangle[1]original_x2 = cur_rectangle[2]original_y2 = cur_rectangle[3]original_w = original_x2 - original_x1original_h = original_y2 - original_y1cropped_caffe_img = img_forward[original_y1:original_y2, original_x1:original_x2] # crop imagecaffe_img_resized = cv2.resize(cropped_caffe_img, (48, 48))caffe_img_resized_CHW = caffe_img_resized.transpose((2, 0, 1))net_48_cal.blobs['data'].reshape(1, *caffe_img_resized_CHW.shape)net_48_cal.blobs['data'].data[...] = caffe_img_resized_CHWnet_48_cal.forward()output = net_48_cal.blobs['prob'].dataprediction = output[0] # (44, 1) ndarraythreshold = 0.1indices = np.nonzero(prediction > threshold)[0] # ndarray of indices where prediction is larger than thresholdnumber_of_cals = len(indices) # number of calibrations larger than thresholdif number_of_cals == 0: # if no calibration is needed, check next rectangleresult.append(cur_rectangle)continuetotal_s_change = 0total_x_change = 0total_y_change = 0for current_cal in range(number_of_cals): # accumulate changes, and calculate averagecal_label = int(indices[current_cal]) # should be number in 0~44if (cal_label >= 0) and (cal_label <= 8): # decide s changetotal_s_change += 0.83elif (cal_label >= 9) and (cal_label <= 17):total_s_change += 0.91elif (cal_label >= 18) and (cal_label <= 26):total_s_change += 1.0elif (cal_label >= 27) and (cal_label <= 35):total_s_change += 1.10else:total_s_change += 1.21if cal_label % 9 <= 2: # decide x changetotal_x_change += -0.17elif (cal_label % 9 >= 6) and (cal_label % 9 <= 8): # ignore case when 3<=x<=5, since adding 0 doesn't changetotal_x_change += 0.17if cal_label % 3 == 0: # decide y changetotal_y_change += -0.17elif cal_label % 3 == 2: # ignore case when 1, since adding 0 doesn't changetotal_y_change += 0.17s_change = total_s_change / number_of_cals # calculate averagex_change = total_x_change / number_of_calsy_change = total_y_change / number_of_calscur_result = cur_rectangle # inherit format and last two attributes from original rectanglecur_result[0] = int(max(0, original_x1 - original_w * x_change / s_change))cur_result[1] = int(max(0, original_y1 - 1.1 * original_h * y_change / s_change))cur_result[2] = int(min(width, cur_result[0] + original_w / s_change))cur_result[3] = int(min(height, cur_result[1] + 1.1 * original_h / s_change))result.append(cur_result)return resultdef detect_faces_net(nets, img_forward, min_face_size, stride,multiScale=False, scale_factor=1.414, threshold=0.05):'''Complete flow of face cascade detection:param nets: 6 nets as a tuple:param img_forward: image in normal style after subtracting mean pixel value:param min_face_size::param stride::param multiScale::param scale_factor::param threshold::return: list of rectangles'''net_12c_full_conv = nets[0]net_12_cal = nets[1]net_24c = nets[2]net_24_cal = nets[3]net_48c = nets[4]net_48_cal = nets[5]rectangles = detect_face_12c_net(net_12c_full_conv, img_forward, min_face_size,stride, multiScale, scale_factor, threshold) # detect facesrectangles = cal_face_12c_net(net_12_cal, img_forward, rectangles) # calibrationrectangles = localNMS(rectangles) # apply local NMSrectangles = detect_face_24c_net(net_24c, img_forward, rectangles)rectangles = cal_face_24c_net(net_24_cal, img_forward, rectangles) # calibrationrectangles = localNMS(rectangles) # apply local NMSrectangles = detect_face_48c_net(net_48c, img_forward, rectangles)rectangles = globalNMS(rectangles) # apply global NMSrectangles = cal_face_48c_net(net_48_cal, img_forward, rectangles) # calibrationreturn rectangles

还没有评论,来说两句吧...