Ceph集群生产环境安装部署

前言

ceph的组件以及工作流程非常的复杂,是一个庞大的系统,在尝试ceph之前尽量多查阅官方的文档,理解ceph的mon/osd/mds/pg/pool等各组件/Unit的协同工作方式

Ceph官方文档

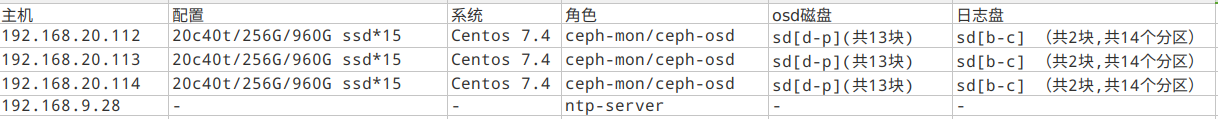

一、配置规划:

二、部署

1. ntp-server开启ntp服务:

apt-get install ntp ntpdate ntp-docsystemctl enable ntpsystemctl start ntp

2. ceph node

三台node全部执行如下操作:

磁盘分区规划如顶部表格,按照规划写的磁盘划分脚本,分别在3台node上执行脚本:

# cat ~/parted.sh#!/bin/bashset -eif [ ! -x "/usr/sbin/parted" ]; thenecho "This script requires /sbin/parted to run!" >&2exit 1fiDISKS="d e f g h i j k l m n o p"for i in ${DISKS}; doecho "Creating partitions on /dev/sd${i} ..."parted -a optimal --script /dev/sd${i} -- mktable gptparted -a optimal --script /dev/sd${i} -- mkpart primary xfs 0% 100%sleep 1#echo "Formatting /dev/sd${i}1 ..."mkfs.xfs -f /dev/sd${i}1 &doneSSDS="b c"for i in ${SSDS}; doparted -s /dev/sd${i} mklabel gptparted -s /dev/sd${i} mkpart primary 0% 10%parted -s /dev/sd${i} mkpart primary 11% 20%parted -s /dev/sd${i} mkpart primary 21% 30%parted -s /dev/sd${i} mkpart primary 31% 40%parted -s /dev/sd${i} mkpart primary 41% 50%parted -s /dev/sd${i} mkpart primary 51% 60%parted -s /dev/sd${i} mkpart primary 61% 70%donechown -R ceph:ceph /dev/sdb[1-7]chown -R ceph:ceph /dev/sdc[1-7]

添加 /etc/hosts解析,并scp到3台node上:

192.168.20.112 h020112192.168.20.113 h020113192.168.20.114 h020114

关闭防火墙、selinux,添加定时同步时间计划任务:

sed -i 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/configsetenforce 0systemctl stop firewalldsystemctl disable firewalldcat >>/etc/crontab<<EOF× */1 * * * root ntpdate 192.168.9.28 && --systohcEOFcd /etc/yum.repos.dmv CentOS-Base.repo CentOS-Base.repo.bakwget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repoyum -y install ntp ntpdateyum -y install ceph

在node1(192.168.20.112)上执行:

wget https://download.ceph.com/rpm-kraken/el7/noarch/ceph-deploy-1.5.37-0.noarch.rpmyum -y install ceph-deploy-1.5.37-0.noarch.rpm# 创建秘钥,传送到各节点,实现无秘钥登录ssh-keygenssh-copy-id h020112ssh-copy-id h020113ssh-copy-id h020114# 新建集群,生成配置文件mkdir ceph-cluster && cd ceph-clusterceph-deploy new h020112 h020113 h020114## 修改默认生成的ceph.conf,增加如下配置段:# 80G日志盘osd_journal_size = 81920public_network= 192.168.20.0/24# 副本pg数为2,默认为3,最小工作size为默认size - (默认size/2)osd pool default size = 2# 官方建议平均每个osd 的pg数量不小于30,即pg num > (osd_num) * 30 / 2(副本数)osd pool default pg num = 1024osd pool default pgp num = 1024# 传送ceph.confceph-deploy --overwrite-conf config push h020112 h020113 h020114# 若有RuntimeError: bootstrap-mds keyring not found; run 'gatherkeys'报错,则执行如下命令传送keyceph-deploy gatherkeys yh020112 h020113 h020114# 初始化mon节点ceph-deploy mon create-initialceph -s # 查看mon是否添加成功# ceph 集群加入OSDcd /etc/ceph # 一定要进入这个目录下# 执行如下脚本,日志盘和数据盘的对应关系要再三确认[root@h20112 ceph]# cat add_osd.sh#!/bin/bashfor ip in $(cat ~/ceph-cluster/cephosd.txt)doecho ----$ip-----------;ceph-deploy --overwrite-conf osd prepare $ip:sdd1:/dev/sdb1 $ip:sde1:/dev/sdb2 $ip:sdf1:/dev/sdb3 $ip:sdg1:/dev/sdb4 $ip:sdh1:/dev/sdb5 $ip:sdi1:/dev/sdb6 \$ip:sdj1:/dev/sdc1 $ip:sdk1:/dev/sdc2 $ip:sdl1:/dev/sdc3 $ip:sdm1:/dev/sdc4 $ip:sdn1:/dev/sdc5 $ip:sdo1:/dev/sdc6 $ip:sdp1:/dev/sdc7donefor ip in $(cat ~/ceph-cluster/cephosd.txt)doecho ----$ip-----------;ceph-deploy osd activate $ip:sdd1:/dev/sdb1 $ip:sde1:/dev/sdb2 $ip:sdf1:/dev/sdb3 $ip:sdg1:/dev/sdb4 $ip:sdh1:/dev/sdb5 $ip:sdi1:/dev/sdb6 \$ip:sdj1:/dev/sdc1 $ip:sdk1:/dev/sdc2 $ip:sdl1:/dev/sdc3 $ip:sdm1:/dev/sdc4 $ip:sdn1:/dev/sdc5 $ip:sdo1:/dev/sdc6 $ip:sdp1:/dev/sdc7done

查看结果:

[root@h20112 ceph]# ceph osd treeID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY-1 34.04288 root default-2 11.34763 host h0201120 0.87289 osd.0 up 1.00000 1.000003 0.87289 osd.3 up 1.00000 1.000004 0.87289 osd.4 up 1.00000 1.000005 0.87289 osd.5 up 1.00000 1.000006 0.87289 osd.6 up 1.00000 1.000007 0.87289 osd.7 up 1.00000 1.000008 0.87289 osd.8 up 1.00000 1.000009 0.87289 osd.9 up 1.00000 1.0000010 0.87289 osd.10 up 1.00000 1.0000011 0.87289 osd.11 up 1.00000 1.0000012 0.87289 osd.12 up 1.00000 1.0000013 0.87289 osd.13 up 1.00000 1.0000014 0.87289 osd.14 up 1.00000 1.00000-3 11.34763 host h0201131 0.87289 osd.1 up 1.00000 1.0000015 0.87289 osd.15 up 1.00000 1.0000016 0.87289 osd.16 up 1.00000 1.0000017 0.87289 osd.17 up 1.00000 1.0000018 0.87289 osd.18 up 1.00000 1.0000019 0.87289 osd.19 up 1.00000 1.0000020 0.87289 osd.20 up 1.00000 1.0000021 0.87289 osd.21 up 1.00000 1.0000022 0.87289 osd.22 up 1.00000 1.0000023 0.87289 osd.23 up 1.00000 1.0000024 0.87289 osd.24 up 1.00000 1.0000025 0.87289 osd.25 up 1.00000 1.0000026 0.87289 osd.26 up 1.00000 1.00000-4 11.34763 host h0201142 0.87289 osd.2 up 1.00000 1.0000027 0.87289 osd.27 up 1.00000 1.0000028 0.87289 osd.28 up 1.00000 1.0000029 0.87289 osd.29 up 1.00000 1.0000030 0.87289 osd.30 up 1.00000 1.0000031 0.87289 osd.31 up 1.00000 1.0000032 0.87289 osd.32 up 1.00000 1.0000033 0.87289 osd.33 up 1.00000 1.0000034 0.87289 osd.34 up 1.00000 1.0000035 0.87289 osd.35 up 1.00000 1.0000036 0.87289 osd.36 up 1.00000 1.0000037 0.87289 osd.37 up 1.00000 1.0000038 0.87289 osd.38 up 1.00000 1.00000[root@h20112 ceph]# ceph -scluster 6661d89d-5895-4bcb-9b11-9400638afc85health HEALTH_OKmonmap e1: 3 mons at {h020112=192.168.20.112:6789/0,h020113=192.168.20.113:6789/0,h020114=192.168.20.114:6789/0}election epoch 6, quorum 0,1,2 h020112,h020113,h020114osdmap e199: 39 osds: 39 up, 39 inflags sortbitwise,require_jewel_osdspgmap v497: 1024 pgs, 1 pools, 0 bytes data, 0 objects4385 MB used, 34854 GB / 34858 GB avail1024 active+clean# 若配置文件里指定的pg_num 和 php_num未生效,使用命令指定:sudo ceph osd pool set rbd pg_num 1024sudo ceph osd pool set rbd pgp_num 1024

自定义crush分布式调度规则:

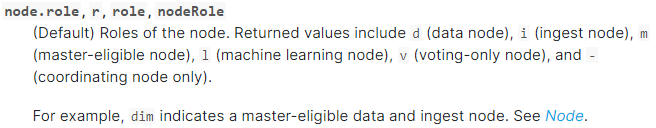

ceph一共有如下层级的管理单位,从上到下层级依次提升,可以灵活地按照物理的逻辑粒度,将osd关联到不同的主机、机位、机架、pdu、机房、区域等管理单位,每一层级的整体权重值等于该层级下所有OSD的权重之和。

type 0 osdtype 1 hosttype 2 chassistype 3 racktype 4 rowtype 5 pdutype 6 podtype 7 roomtype 8 datacentertype 9 regiontype 10 root

自定义crush视图:

这里只写操作方法,不作实施,本次操作的环境硬盘数目、硬盘规格、主机位置均一致,暂时不作crush调整

ceph osd crush add-bucket hnc root #添加root层级名为hnc的bucketceph osd crush add-bucket rack0 rack #添加rack层级名为rack0的bucketceph osd crush add-bucket rack1 rack #添加rack层级名为rack1的bucketceph osd crush add-bucket rack2 rack #添加rack层级名为rack2的bucketceph osd crush move rack0 root=hnc #将rack0移入hnc之下ceph osd crush move rack0 root=hnc #将rack1移入hnc之下ceph osd crush move rack0 root=hnc #将rack2移入hnc之下ceph ods crush move h020112 rack=rack0 #将host h020112移入rack0层级下ceph ods crush move h020113 rack=rack1 #将host h020113移入rack1层级下ceph ods crush move h020114 rack=rack2 #将host h020114移入rack2层级下ceph osd getcrushmap -o map_old 导出mapcrushtool -d map_old -o map_old.txt 转化成可编辑格式crushtool -c map_new.txt -o map_new 还原为mapceph osd setcrushmap -i map_new 将map导入ceph

修改配置文件,防止ceph自动更新crushmap

echo ‘osd_crush_update_on_start = false‘ >> /etc/ceph/ceph.conf/etc/init.d/ceph restart

使用如下样例crash(map_new.txt)配置:

--------------------------------------# begin crush maptunable choose_local_tries 0tunable choose_local_fallback_tries 0tunable choose_total_tries 50tunable chooseleaf_descend_once 1tunable straw_calc_version 1# devicesdevice 0 osd.0device 1 osd.1device 2 osd.2device 3 osd.3device 4 osd.4device 5 osd.5device 6 osd.6device 7 osd.7device 8 osd.8device 9 osd.9device 10 osd.10device 11 osd.11# typestype 0 osdtype 1 hosttype 2 chassistype 3 racktype 4 rowtype 5 pdutype 6 podtype 7 roomtype 8 datacentertype 9 regiontype 10 root# bucketsroot default {id -1 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1}# 权重值一般根据磁盘容量(基数)与性能(倍率)调整,例如设置1T为1.00,2T为2.00,hdd倍数为1,ssd倍数为2host h020112 {id -2 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item osd.0 weight 2.000item osd.1 weight 2.000item osd.2 weight 2.000item osd.3 weight 2.000# 需写出全部osd,这里省略不写这么多了}host h020113 {id -3 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item osd.4 weight 2.000item osd.5 weight 2.000item osd.6 weight 2.000item osd.7 weight 2.000}host h020114 {id -4 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item osd.8 weight 2.000item osd.9 weight 2.000item osd.10 weight 2.000item osd.11 weight 2.000}rack rack0 {id -6 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item h020112 weight 8.000}rack rack1 {id -7 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item h020113 weight 8.000}rack rack2 {id -8 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item h020114 weight 8.000}root hnc {id -5 # do not change unnecessarily# weight 0.000alg strawhash 0 # rjenkins1item rack0 weight 8.000item rack1 weight 8.000item rack2 weight 8.000}# rulesrule replicated_ruleset { #规则集的命名,创建pool时可以指定rule集ruleset 0 #rules集的编号,顺序编即可type replicated #定义pool类型为replicated(还有esurecode模式)min_size 1 #pool中最小指定的副本数量不能小1max_size 10 #pool中最大指定的副本数量不能大于10step take hnc #定义pg查找副本的入口点step chooseleaf firstn 0 type rack #选叶子节点、深度优先、隔离rackstep emit}# end crush map

将修改后的crushmap编译并且注入集群中

crushtool -c map_new.txt -o map_newceph osd setcrushmap -i map_newceph osd treeceph osd crush rm default # 删除默认crush mapsystemctl stop ceph\*.service ceph\*.target #关闭所有服务systemctl start ceph.target #启动服务

还没有评论,来说两句吧...