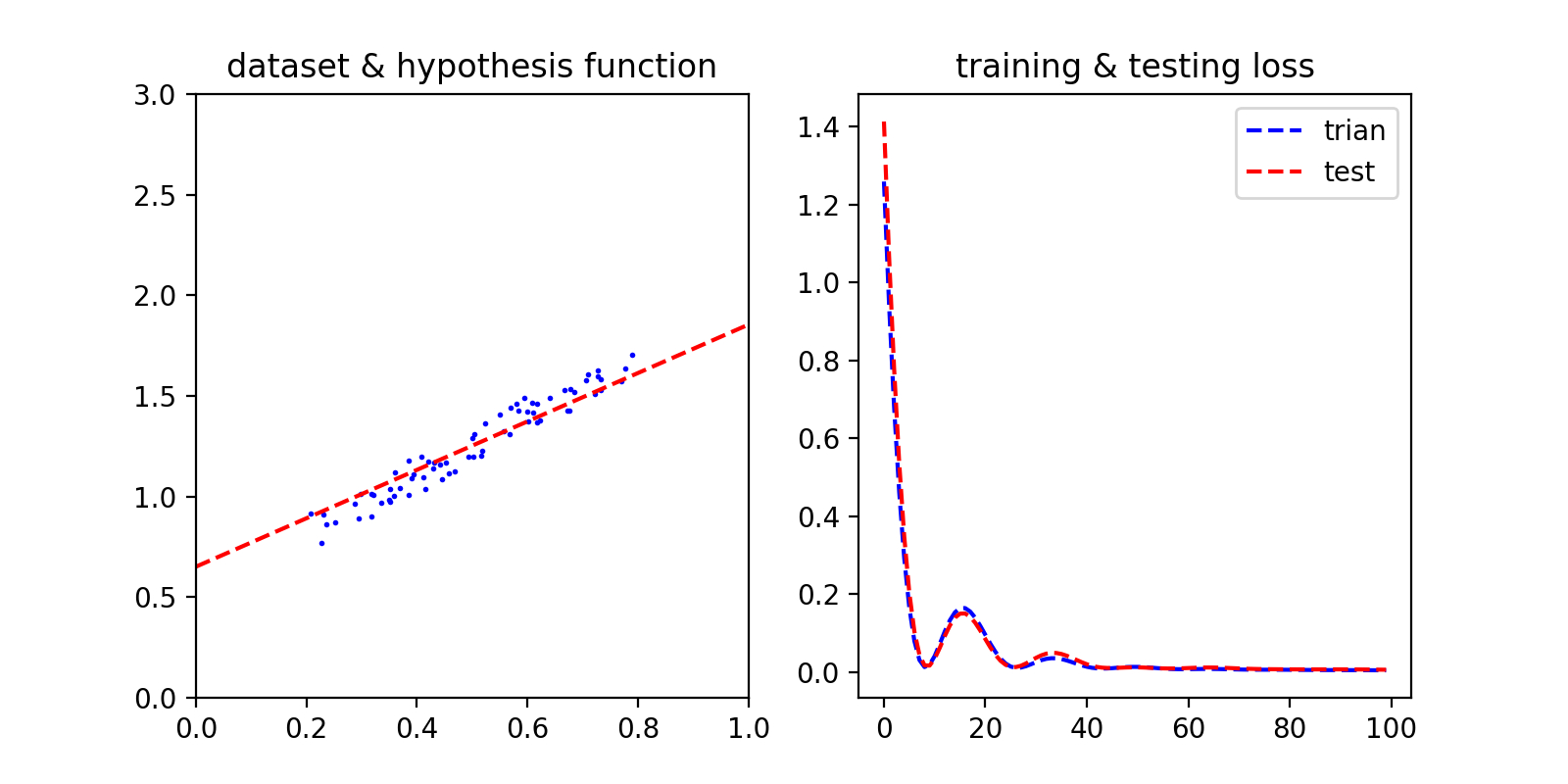

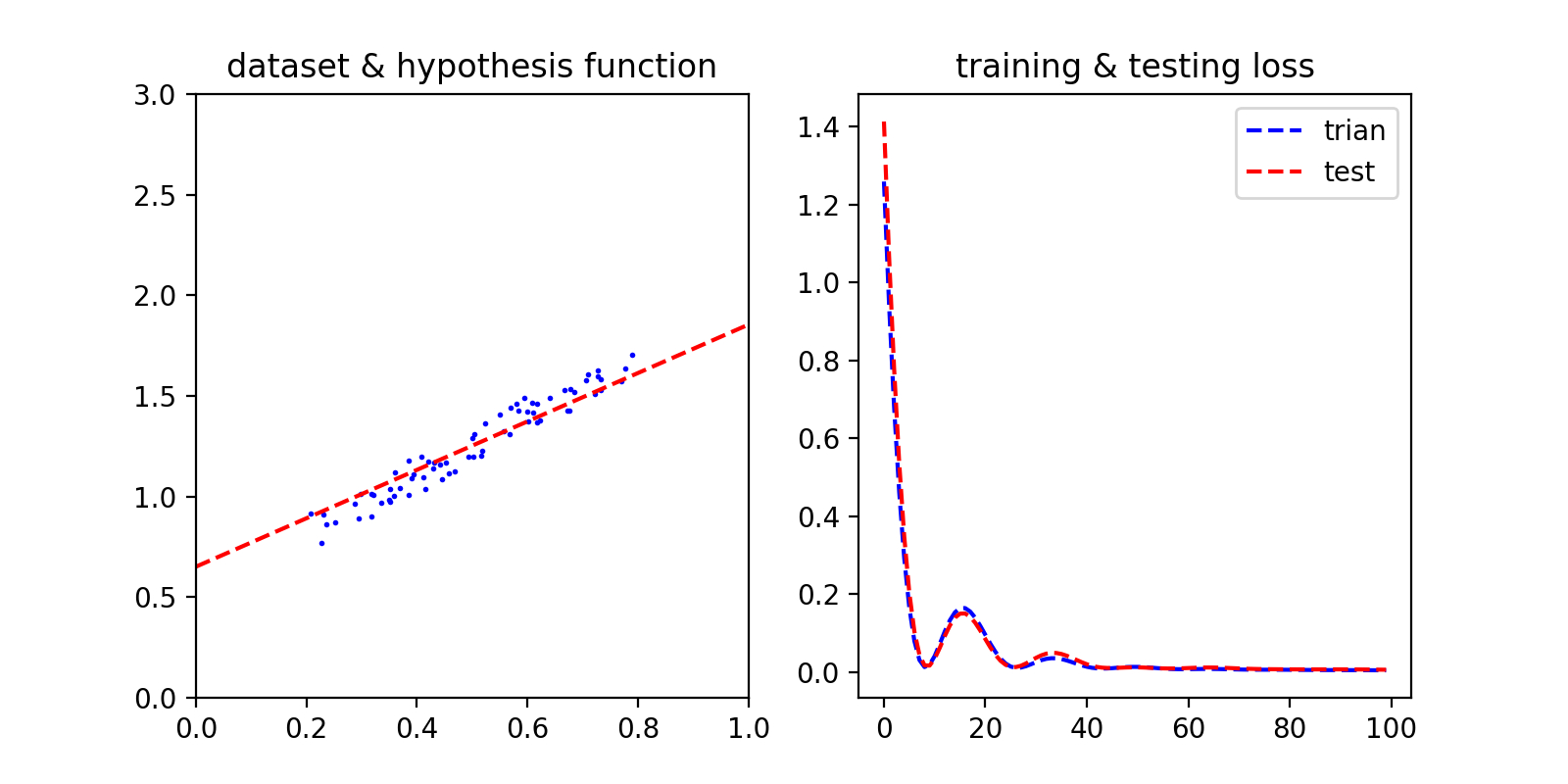

TensorFlow 单变量线性回归

代码

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt # generate datadata_size = 100x_data = np.random.rand(data_size) * 0.6 + 0.2noise = np.random.rand(data_size)noise = (noise - noise.mean()) * 0.2w = 1.5b = 0.5y_data = x_data * w + b + noisetrain_test_boundary = int(0.7 * data_size)x_train = x_data[:train_test_boundary]y_train = y_data[:train_test_boundary]x_test = x_data[train_test_boundary:]y_test = y_data[train_test_boundary:]# my modeltrain_loss_history = []test_loss_history = []nb_epoch = 100my_w = 0my_b = 0learning_rate = 0.1with tf.Graph().as_default(): x = tf.placeholder(dtype = tf.float32, shape = [None]) y_ = tf.placeholder(dtype = tf.float32, shape = [None]) w = tf.Variable(0, dtype = tf.float32) b = tf.Variable(0, dtype = tf.float32) y = x * w + b loss = tf.reduce_mean((y - y_) ** 2) train = tf.train.AdamOptimizer(learning_rate).minimize(loss) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) for i in range(nb_epoch): sess.run(train, feed_dict = { x: x_train, y_: y_train}) l = sess.run(loss, feed_dict = { x: x_train, y_:y_train}) train_loss_history.append(l) l = sess.run(loss, feed_dict = { x: x_test, y_: y_test}) test_loss_history.append(l) my_w = w.eval() my_b = b.eval()# displayplt.figure(figsize=(8, 4))plt.subplot(1, 2, 1)plt.xlim([0, 1])plt.ylim([0, 3])plt.plot(np.linspace(0, 1, 100), my_w * np.linspace(0, 1, 100) + my_b, 'r--')plt.scatter(x_train, y_train, 1.0, 'b', marker='o')plt.title('dataset & hypothesis function')plt.subplot(1, 2, 2)plt.title('training & testing loss')plt.plot(train_loss_history, 'b--')plt.plot(test_loss_history, 'r--')plt.legend(['trian', 'test'])plt.show()

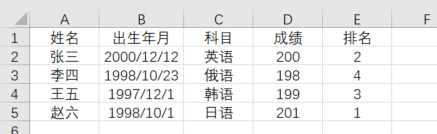

效果图

还没有评论,来说两句吧...