大数据入门介绍二

1.hdfs三个进程要以hadoop002启动

etc/hadoop 这里是版本的不同

conf

很多数大数据组建几乎是一层目录 以前的版本是没有的,现在是ect/hadoop

[hadoop@hadoop002 hadoop]$ lltotal 140-rw-r--r-- 1 hadoop hadoop 884 Feb 13 22:34 core-site.xml hadoop三个组建hdfs mapreduce yarn 核心的共有的东西拿出来-rw-r--r-- 1 hadoop hadoop 4294 Feb 13 22:30 hadoop-env.sh JDK目录和 hadoop家目录-rw-r--r-- 1 hadoop hadoop 867 Feb 13 22:34 hdfs-site.xml-rw-r--r-- 1 hadoop hadoop 11291 Mar 24 2016 log4j.properties-rw-r--r-- 1 hadoop hadoop 758 Mar 24 2016 mapred-site.xml.template-rw-r--r-- 1 hadoop hadoop 10 Mar 24 2016 slaves-rw-r--r-- 1 hadoop hadoop 690 Mar 24 2016 yarn-site.xml[hadoop@hadoop002 hadoop]$

hadoop: hdfs mapreduce yarn 有这3个组建

需要部署 不需要部署 需要部署

生产 学习: 不用ip部署,统一机器名称hostname部署

只需要/etc/hosts 修改即可(第一行 第二行不要删除)

在vi /etc/hosts 文件做好映射,

例如:172.31.236.240 hadoop002 以后再网段变更的时候,只要把前面改掉就可以了。

里面的文件第一行和第二行不要删掉,否则会出现问题

更改的是:namenode进程:

[hadoop@hadoop002 hadoop]$ vi core-site.xml<configuration><property><name>fs.defaultFS</name><value>hdfs://hadoop002:9000</value></property></configuration>

datanode进程:

[hadoop@hadoop002 hadoop]$ vi slaves 这里是配置namenode的小弟hadoop002更改成机器名字,如果有很多用逗号分隔,secondarynamenode进程:<property><name>dfs.namenode.secondary.http-address</name><value>hadoop002:50090</value></property><property><name>dfs.namenode.secondary.https-address</name><value>hadoop002:50091</value></property>

他的配置在哪里寻找官网

百度:cdh tar

搜索你的hadoop的版本带cdh的

在最下面找到配置文件

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ cd[hadoop@hadoop002 ~]$[hadoop@hadoop002 ~]$[hadoop@hadoop002 ~]$[hadoop@hadoop002 ~]$ lltotal 8drwxrwxr-x 3 hadoop hadoop 4096 Feb 13 22:21 appdrwxrwxr-x 8 hadoop hadoop 4096 Feb 13 20:46 d5[hadoop@hadoop002 ~]$ ll -atotal 68drwx------ 7 hadoop hadoop 4096 Feb 16 20:25 .drwxr-xr-x. 5 root root 4096 Oct 22 10:45 ..drwxrwxr-x 3 hadoop hadoop 4096 Feb 13 22:21 app-rw------- 1 hadoop hadoop 15167 Feb 13 23:29 .bash_history-rw-r--r-- 1 hadoop hadoop 18 Mar 23 2017 .bash_logout-rw-r--r-- 1 hadoop hadoop 293 Sep 19 23:22 .bash_profile-rw-r--r-- 1 hadoop hadoop 124 Mar 23 2017 .bashrcdrwxrwxr-x 8 hadoop hadoop 4096 Feb 13 20:46 d5drwxrw---- 3 hadoop hadoop 4096 Sep 19 17:01 .pkidrwx------ 2 hadoop hadoop 4096 Feb 13 22:39 .sshdrwxr-xr-x 2 hadoop hadoop 4096 Oct 14 20:57 .vim-rw------- 1 hadoop hadoop 8995 Feb 16 20:25 .viminfo[hadoop@hadoop002 ~]$ ll .sshtotal 16-rw------- 1 hadoop hadoop 398 Feb 13 22:37 authorized_keys-rw------- 1 hadoop hadoop 1675 Feb 13 22:36 id_rsa-rw-r--r-- 1 hadoop hadoop 398 Feb 13 22:36 id_rsa.pub-rw-r--r-- 1 hadoop hadoop 780 Feb 13 22:49 known_hosts

重新构建ssh信任关系 之前的配置也是可以的,但是我们为了统一 重新再来一次配置

[hadoop@hadoop002 ~]$ rm -rf .ssh[hadoop@hadoop002 ~]$ ssh-keygenGenerating public/private rsa key pair.Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):Created directory '/home/hadoop/.ssh'.Enter passphrase (empty for no passphrase):Enter same passphrase again:Your identification has been saved in /home/hadoop/.ssh/id_rsa.Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.The key fingerprint is:ca:e4:a1:fc:9f:e2:86:e7:9c:ab:f2:19:7a:70:c5:3d hadoop@hadoop002The key's randomart image is:+--[ RSA 2048]----+| || || . . || o E || .o S. || ...= o || o+.+ || ..o=+. . || .++*B+o |+-----------------+[hadoop@hadoop002 ~]$ cd .ssh[hadoop@hadoop002 .ssh]$ lltotal 8-rw------- 1 hadoop hadoop 1675 Feb 16 20:27 id_rsa-rw-r--r-- 1 hadoop hadoop 398 Feb 16 20:27 id_rsa.pub[hadoop@hadoop002 .ssh]$ cat id_rsa.pub >> authorized_keys[hadoop@hadoop002 .ssh]$[hadoop@hadoop002 .ssh]$ lltotal 12-rw-rw-r-- 1 hadoop hadoop 398 Feb 16 20:27 authorized_keys-rw------- 1 hadoop hadoop 1675 Feb 16 20:27 id_rsa-rw-r--r-- 1 hadoop hadoop 398 Feb 16 20:27 id_rsa.pub[hadoop@hadoop002 .ssh]$[hadoop@hadoop002 .ssh]$[hadoop@hadoop002 .ssh]$[hadoop@hadoop002 .ssh]$[hadoop@hadoop002 .ssh]$ cd[hadoop@hadoop002 ~]$ cd app/hadoop-2.6.0-cdh5.7.0[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ sbin/start-dfs.sh19/02/16 20:28:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableStarting namenodes on [hadoop002]The authenticity of host 'hadoop002 (172.31.236.240)' can't be established.RSA key fingerprint is b1:94:33:ec:95:89:bf:06:3b:ef:30:2f:d7:8e:d2:4c.Are you sure you want to continue connecting (yes/no)? yeshadoop002: Warning: Permanently added 'hadoop002,172.31.236.240' (RSA) to the list of known hosts.hadoop@hadoop002's password:[2]+ Stopped sbin/start-dfs.sh[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ ^C[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ jps962 Jps[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ ps -ef|grep start-dfs.shhadoop 790 349 0 20:25 pts/0 00:00:00 bash sbin/start-dfs.shhadoop 887 349 0 20:28 pts/0 00:00:00 bash sbin/start-dfs.shhadoop 977 349 0 20:28 pts/0 00:00:00 grep start-dfs.sh[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ kill -9 790 887[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ cd[1]- Killed sbin/start-dfs.sh (wd: ~/app/hadoop-2.6.0-cdh5.7.0)(wd now: ~)[2]+ Killed sbin/start-dfs.sh (wd: ~/app/hadoop-2.6.0-cdh5.7.0)(wd now: ~)[hadoop@hadoop002 ~]$ cd .ssh[hadoop@hadoop002 .ssh]$ lltotal 16-rw-rw-r-- 1 hadoop hadoop 398 Feb 16 20:27 authorized_keys-rw------- 1 hadoop hadoop 1675 Feb 16 20:27 id_rsa-rw-r--r-- 1 hadoop hadoop 398 Feb 16 20:27 id_rsa.pub-rw-r--r-- 1 hadoop hadoop 406 Feb 16 20:28 known_hosts[hadoop@hadoop002 .ssh]$ chmod 600 authorized_keys[hadoop@hadoop002 .ssh]$[hadoop@hadoop002 .ssh]$ cd -/home/hadoop[hadoop@hadoop002 ~]$ cd app/hadoop-2.6.0-cdh5.7.0

启动hdfs 发现三个进程都以hadoop002启动

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ sbin/start-dfs.sh19/02/16 20:29:33 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableStarting namenodes on [hadoop002]hadoop002: starting namenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-namenode-hadoop002.outhadoop002: starting datanode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-datanode-hadoop002.outStarting secondary namenodes [hadoop002]hadoop002: starting secondarynamenode, logging to /home/hadoop/app/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-secondarynamenode-hadoop002.out19/02/16 20:29:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$

2.jps命令的真相

2.1位置哪里的

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ which jps/usr/java/jdk1.8.0_45/bin/jps

2.2对应的进程的标识文件在哪 /tmp/hsperfdata_进程用户名称

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ cd /tmp

在ll

找到这个hsperfdata_hadoop

[hadoop@hadoop002 hsperfdata_hadoop]$ pwd/tmp/hsperfdata_hadoop[hadoop@hadoop002 hsperfdata_hadoop]$ lltotal 96-rw------- 1 hadoop hadoop 32768 Feb 16 20:35 1086-rw------- 1 hadoop hadoop 32768 Feb 16 20:35 1210-rw------- 1 hadoop hadoop 32768 Feb 16 20:35 1378[hadoop@hadoop002 hsperfdata_hadoop]$

2.3

root用户看所有用户的jps结果

普通用户只能看自己的当前用户jps进程,,

2.4 process information unavailable 信息不可用

真假判断: ps -ef|grep namenode 真正判断进程是否可用

出来有进程,就是真的,这里最好使用namenode 来代替1378

[root@hadoop002 ~]# jps1520 Jps1378 -- process information unavailable1210 -- process information unavailable1086 -- process information unavailable[root@hadoop002 ~]#[root@hadoop002 ~]#[root@hadoop002 ~]#[root@hadoop002 ~]#

生产环境:

hadoop: hdfs组件 hdfs用户

root用户或sudo权限的用户取获取

kill: 人为 进程在Linux看来是耗内存最大的 自动给你kill

[root@hadoop002 tmp]# rm -rf hsperfdata_hadoop[root@hadoop002 tmp]#[root@hadoop002 tmp]# jps1906 Jps[root@hadoop002 tmp]#

3.pid文件 集群进程启动和停止要的文件

-rw-rw-r-- 1 hadoop hadoop 5 Feb 16 20:56 hadoop-hadoop-datanode.pid-rw-rw-r-- 1 hadoop hadoop 5 Feb 16 20:56 hadoop-hadoop-namenode.pid-rw-rw-r-- 1 hadoop hadoop 5 Feb 16 20:57 hadoop-hadoop-secondarynamenode.pid

Linux在tmp命令 定期删除一些文件和文件夹 30天周期

解决:

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ vi /etc/hadoop/hdaoop_env .sh# The directory where pid files are stored. /tmp by default.# NOTE: this should be set to a directory that can only be written to by# the user that will run the hadoop daemons. Otherwise there is the# potential for a symlink attack.export HADOOP_PID_DIR=${HADOOP_PID_DIR}mkdir /data/tmpchmod -R 777 /data/tmpexport HADOOP_PID_DIR=/data/tmp

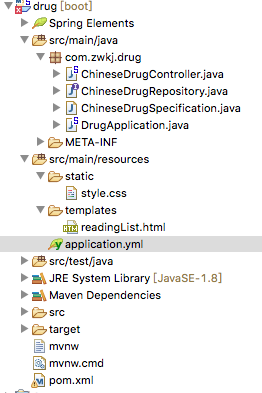

MapReduce: 计算的 是jar包提交的Yarn上 本身不需要部署

Yarn: 资源CPU和内存 和作业调度 是需要部署的

MapReduce on YarnConfigure parameters as follows:etc/hadoop/mapred-site.xml:mapred-site.xml这个文件需要cp一份<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>这个配置说明 MapReduce跑在yarn上面etc/hadoop/yarn-site.xml:<configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property></configuration>

Start

ResourceManager daemon 老大 资源管理者

NodeManager daemon 小弟 节点管理者

$ sbin/start-yarn.sh 启动

Browse the web interface for the ResourceManager; by default it is available at:ResourceManager - http://localhost:8088/[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ jps4001 NodeManager3254 SecondaryNameNode3910 ResourceManager3563 NameNode4317 Jps3087 DataNode[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$

http://47.75.249.8:8088/ 打开yarn的网页浏览器

hadoop-hadoop-datanode-hadoop002.log 日志文件

hadoop-用户-进程名称-机器名称

hdfs

排查错误;

vi :/搜索 对于小的文件大小

tail -200f xxx.log 到看200行 这里只能小写f

另外窗口重启进程 为了再现这个错误

sz 上传到windows editplus去定位查看 备份

你的错误一定是最新的,直接拉到最下面就可以看到了,

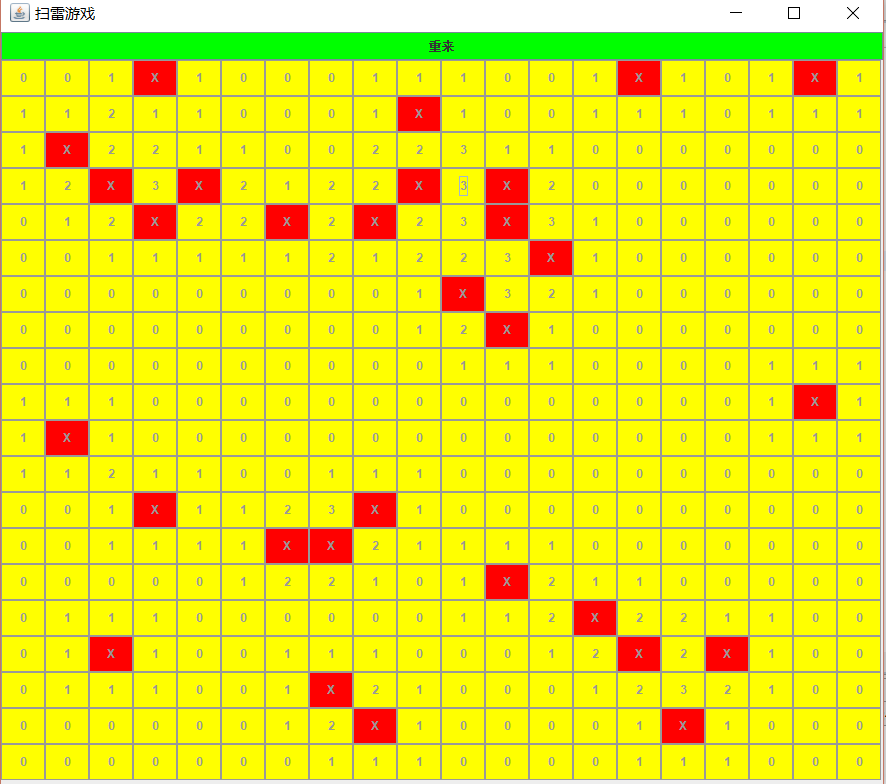

5.运行mr mapreduce

map 映射

reduce 规约

map和reduce在运行的时候,map不是运行完,reduce在运行的,map是先运行,reduce也会运行的

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hadoop jar ./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.7.0.jar pi 5 10

词频统计 例子:mapreduce 用它来算出单词出现的个数

[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ vi a.log 创建一个a文件ruozejepsonwww.ruozedata.comdashuadaifanren1abca b c ruoze jepon[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ vi b.txta b d e f ruoze1 1 3 5[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -mkdir /wordcount[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -mkdir /wordcount/input 输入文件夹[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -put a.log /wordcount/input 文件上传[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -put b.txt /wordcount/input 文件上传[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -ls /wordcount/input/Found 2 items-rw-r--r-- 1 hadoop supergroup 76 2019-02-16 21:59 /wordcount/input/a.log-rw-r--r-- 1 hadoop supergroup 24 2019-02-16 21:59 /wordcount/input/b.txt[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hadoop jar \./share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.7.0.jar \wordcount /wordcount/input /wordcount/output1 1代表第一次[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -cat /wordcount/output1/part-r-00000-cat是查看19/02/16 22:05:46 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable1 33 15 1a 3adai 1b 3c 2d 1dashu 1e 1f 1fanren 1jepon 1jepson 1ruoze 3www.ruozedata.com 1[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ hdfs dfs -get /wordcount/output1/part-r-00000 ./-get是下载[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$ cat part-r-000001 33 15 1a 3adai 1b 3c 2d 1dashu 1e 1f 1fanren 1jepon 1jepson 1ruoze 3www.ruozedata.com 1[hadoop@hadoop002 hadoop-2.6.0-cdh5.7.0]$

还没有评论,来说两句吧...