Redis 集群搭建与原理

Redis Cluster集群

随着业务量的不断增大,QPS随之而然也不断扩大,单台Master与多台slave的主从复制架构在性能上已经出现瓶颈了,因此我们考虑到让多个master(一个master配置多个slave)同时工作,来提高整体redis的性能,因此我们需要搭建redis集群。

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-McVsqNPP-1586789405412)(media/48.png)\]](https://image.dandelioncloud.cn/images/20230528/4a5d0b489c9d461ca336bd91c900e477.png)

1.1 Redis Cluster配置

是否开启集群配置

cluster-enabled yes|no

集群产生的配置文件名称

cluster-config-file

redis节点之间的连接超时时间

cluster-node-timeout

一个master节点最多可以配置几个slave节点

cluster-migration-barrier

完整的配置如下:

bind 0.0.0.0port 6379#logfile "6379.log"dir "/root/redis-4.0.11/data"dbfilename "drum-6379.rdb"daemonize noappendonly yesappendfsync everysecappendfilename "appendonly-6379.aof"# redis集群配置cluster-enabled yescluster-config-file nodes-6379.confcluster-node-timeout 10000cluster-migration-barrier 1

1.2 搭建Redis Cluster

1.2.1 编写配置

搭建4主4从的项目环境

准备8个配置文件,配置文件内容如下:

redis-6379.conf(主):

bind 0.0.0.0port 6379#logfile "6379.log"dir "/root/redis-4.0.11/data"dbfilename "drum-6379.rdb"daemonize noappendonly yesappendfsync everysecappendfilename "appendonly-6379.aof"cluster-enabled yescluster-config-file nodes-6379.confcluster-node-timeout 10000cluster-migration-barrier 1

redis-6380.conf(主)(修改一下端口、生成的rdb、aof、nodes文件的名称即可):

bind 0.0.0.0port 6380#logfile "6379.log"dir "/root/redis-4.0.11/data"dbfilename "drum-6380.rdb"daemonize noappendonly yesappendfsync everysecappendfilename "appendonly-6380.aof"cluster-enabled yescluster-config-file nodes-6380.confcluster-node-timeout 10000cluster-migration-barrier 1

redis-6381.conf(主)、redis-6382.conf(主)、

redis-6479.conf(从)、redis-6480.conf(从)、redis-6481.conf(从)、redis-6482.conf(从)

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-oMFo4314-1586789405415)(media/52.png)\]](https://image.dandelioncloud.cn/images/20230528/b99fa818f82245f89e853413bb16b894.png)

把所有的服务器都启动

/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6379.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6380.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6381.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6382.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6479.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6480.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6481.conf/root/redis-4.0.11/src/redis-server /root/redis-4.0.11/config/redis-6482.conf

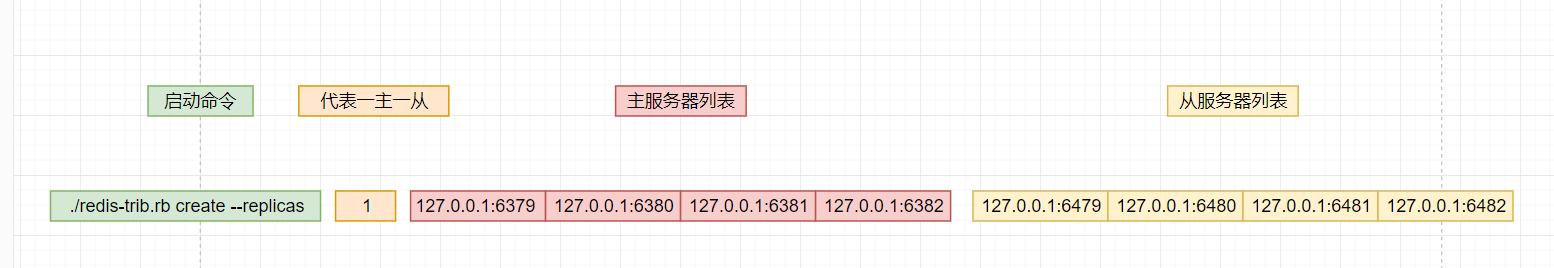

启动集群命令:

[root@localhost src]# ./redis-trib.rb create --replicas 1 127.0.0.1:6379 127.0.0.1:6380 127.0.0.1:6381 127.0.0.1:6382 127.0.0.1:6479 127.0.0.1:6480 127.0.0.1:6481 127.0.0.1:6482

命令图解:

日志如下:

>>> Creating cluster>>> Performing hash slots allocation on 8 nodes...Using 4 masters:127.0.0.1:6379127.0.0.1:6380127.0.0.1:6381127.0.0.1:6382Adding replica 127.0.0.1:6480 to 127.0.0.1:6379Adding replica 127.0.0.1:6481 to 127.0.0.1:6380Adding replica 127.0.0.1:6482 to 127.0.0.1:6381Adding replica 127.0.0.1:6479 to 127.0.0.1:6382>>> Trying to optimize slaves allocation for anti-affinity[WARNING] Some slaves are in the same host as their masterM: 87d16eeaf9fa13defbe1d88636e366c0ab92f9a0 127.0.0.1:6379slots:0-4095 (4096 slots) masterM: 622a17c3e9274364be68c48f749560b40f647805 127.0.0.1:6380slots:4096-8191 (4096 slots) masterM: 35b0566b4b3f7021513215b44a18615e682e91ad 127.0.0.1:6381slots:8192-12287 (4096 slots) masterM: 19e827d6fb6699d84203d2bf0f2cff105a7f7497 127.0.0.1:6382slots:12288-16383 (4096 slots) masterS: e8690128ebc818aa1aadcd5973180d17b83f314d 127.0.0.1:6479replicates 35b0566b4b3f7021513215b44a18615e682e91adS: ea3eab3ef0af136c536a9521fe0a1f1dafb780f7 127.0.0.1:6480replicates 622a17c3e9274364be68c48f749560b40f647805S: 38d361f91febab028f62f7580bf9898b387a2a65 127.0.0.1:6481replicates 87d16eeaf9fa13defbe1d88636e366c0ab92f9a0S: f91c6c6a37857a85162b655fae1bf87de7b4b83c 127.0.0.1:6482replicates 19e827d6fb6699d84203d2bf0f2cff105a7f7497Can I set the above configuration? (type 'yes' to accept): yes>>> Nodes configuration updated>>> Assign a different config epoch to each node>>> Sending CLUSTER MEET messages to join the clusterWaiting for the cluster to join.....>>> Performing Cluster Check (using node 127.0.0.1:6379)M: 87d16eeaf9fa13defbe1d88636e366c0ab92f9a0 127.0.0.1:6379slots:0-4095 (4096 slots) master1 additional replica(s)M: 35b0566b4b3f7021513215b44a18615e682e91ad 127.0.0.1:6381slots:8192-12287 (4096 slots) master1 additional replica(s)S: 38d361f91febab028f62f7580bf9898b387a2a65 127.0.0.1:6481slots: (0 slots) slavereplicates 87d16eeaf9fa13defbe1d88636e366c0ab92f9a0S: e8690128ebc818aa1aadcd5973180d17b83f314d 127.0.0.1:6479slots: (0 slots) slavereplicates 35b0566b4b3f7021513215b44a18615e682e91adS: ea3eab3ef0af136c536a9521fe0a1f1dafb780f7 127.0.0.1:6480slots: (0 slots) slavereplicates 622a17c3e9274364be68c48f749560b40f647805M: 622a17c3e9274364be68c48f749560b40f647805 127.0.0.1:6380slots:4096-8191 (4096 slots) master1 additional replica(s)S: f91c6c6a37857a85162b655fae1bf87de7b4b83c 127.0.0.1:6482slots: (0 slots) slavereplicates 19e827d6fb6699d84203d2bf0f2cff105a7f7497M: 19e827d6fb6699d84203d2bf0f2cff105a7f7497 127.0.0.1:6382slots:12288-16383 (4096 slots) master1 additional replica(s)[OK] All nodes agree about slots configuration.>>> Check for open slots...>>> Check slots coverage...[OK] All 16384 slots covered.[root@localhost src]#

1.3 Redis Cluster工作原理

1.3.1 Redis Cluster内部存储原理

在集群环境中,每个master的数据应该是共享的,但是用户发送一个set命令来到redis服务不可能多台master同时执行set来保证数据的同步,这样效率未免太过低下。多台master之间也不可能做”主从复制”操作,那么redis是如何实现这一点的呢?

在集群环境中,redis的存储结构由16384个虚拟槽(slot)组成,这16384个槽被若干个master均分,当有新的key需要存储到redis服务器中,redis底层会调用CRC方法将key计算成一个值,然后%16384的到一个具体的槽位,根据此槽位选择要存储的redis服务器。

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Ejvl8GIg-1586789405415)(media/53.png)\]](https://image.dandelioncloud.cn/images/20230528/f3d38909c66b43dfb390a7f98ccc512d.png)

注意:槽只是入口,代表新的key要存储的位置,一个槽可以存放N多个Key

观察集群启动时的日志信息:

当有新的master加入时,redis内部会重新分配槽。

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Vi7JcTsx-1586789405415)(media/54.png)\]](https://image.dandelioncloud.cn/images/20230528/09125829808a4def8fbdfa0d22511fd3.png)

在redis cluster中,每个master都会保存一份”注册表”,此注册表包含了所有的master节点的槽信息,当用户发送set/get命令来到对应的slave,slave发现自己这里没有用户需要的数据,则会查看注册表查到对应的key在哪个槽位,并且重定向到此槽位来获取/存储数据。

连接客户端:cluster环境中,连接客户端加上参数(

-c)[root@localhost src] redis-cli

127.0.0.1:6379> set name zs

(error) MOVED 5798 127.0.0.1:6380 # 提示name key计算出来的槽在6380服务器上

127.0.0.1:6379>

[root@localhost src] redis-cli -c # 代表是cluster环境连接服务器

127.0.0.1:6379> set name zs

-> Redirected to slot [5798] located at 127.0.0.1:6380 # 重定向到6380进行存储

OK

127.0.0.1:6380>

获取数据:

[root@localhost src]redis-cli -c -p 6381 # 连接6381服务器127.0.0.1:6381> get name-> Redirected to slot [5798] located at 127.0.0.1:6380"zs"127.0.0.1:6380>

1.3.2 Cluster切换原理

1.3.2.1 slave切换

在Redis Cluster环境中,如果中途有slave节点宕机,那么流程如下:

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-VQwhLrXb-1586789405416)(media/49.png)\]](https://image.dandelioncloud.cn/images/20230528/60d90feb88364a5ca269e87a13d93d0b.png)

在redis集群环境下,每个master节点会每隔cluster-node-timeout时间检测所有的slave节点,当某台master节点检测到slave宕机后,会通知其他master节点与slave节点,其他slave节点接收到master发送过来的信息则将此slave节点(6481)标注为如下信息:

表示接收到来自6382的6481节点宕机信息

FAIL message received from master-node-id(6382) about slave-node-id(6481)

- master-node-id:通知信息的master节点的node-id

- slave-node-id:宕机的slave节点的node-id

master节点收到slave节点宕机的信息后有的master节点会和slave节点一样,代表”相信”该master节点的判断,有的则会去宕机的slave节点在此检测一下,此时master节点标注信息为:

表示标记6481节点宕机

Marking node slave-node-id(6481) as failing (quorum reached).

- slave-node-id:表示宕机的那台slave节点的node-id

当slave节点恢复正常后,其他所有节点都标注为:

代表清除此slave节点的宕机信息,slave可以重新访问了

Clear FAIL state for node slave-node-id: slave is reachable again.

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-PrbLZyEx-1586789405416)(media/50.png)\]](https://image.dandelioncloud.cn/images/20230528/ec58e628f6f04ee4929e9015db51122e.png)

1.3.2.2 master切换

在Redis Cluster环境中,如果中途有master节点宕机,流程如下:

![\[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-F2BpRZHe-1586789405416)(media/51.png)\]](https://image.dandelioncloud.cn/images/20230528/3deba8b79ffb4b3db375430991746f4c.png)

在Redis Cluster环境中,每个master节点会每隔cluster-node-timeout时间检测一下所有的slave节点,另外每个master与master直接每隔cluster-node-timeout时间ping一下,用于检测对方是否存活,当6379宕机后,首先6379的slave节点每秒会尝试连接一次master,直到其他master节点(假设6382)检测到6379宕机后告知自己,那么自己则会自己充当master节点,后续如果6379节点恢复正常则充当6479的slave节点。6382检测到6379宕机后,除了会通知6479,还会通知其他的slave节点,与其他master节点,这些节点接收到来自6382的通知后,标注6379为如下:

表示已经接收到来自6382关于6379宕机的消息

FAIL message received from master-node-id about(6382) master-node-id(6379)

当6439完成slave–>master的切换之后告其他所有节点,表示自己已经切换成功,其他节点标注信息如下:

表示故障迁移到6479上,并且已经迁移完毕

Failover auth granted to slave-node-id(6479) for epoch 9Cluster state changed: ok

当6379恢复正常后,充当原slave(6479)的从节点,其他节点标注6379如下:

表示已经清除6379宕机的信息,6379可以重新访问,但已经没有了槽信息(也就是不再是master了)

Clear FAIL state for node slave-node-id(6379): master without slots is reachable again.

还没有评论,来说两句吧...