《Kubernetes部署篇:Centos7.6部署kubernetes1.17.4单master集群》

文章目录

- 一、架构图

- 二、部署环境

- 三、环境初始化

- 3.1、内核升级

- 3.2、系统初始化配置

- 四、Docker部署

- 五、kubernetes部署

- 5.1、设置kubernetes源

- 5.2、安装kubelet、kubeadm和kubectl

- 5.3、k8s相关镜像下载

- 5.4、kubeadm初始化master节点

- 5.5、配置kubectl

- 5.6、配置flannel网络

- 5.7、master节点kubelet服务启动

- 5.8、worker节点部署

- 总结:整理不易,如果对你有帮助,可否点赞关注一下?

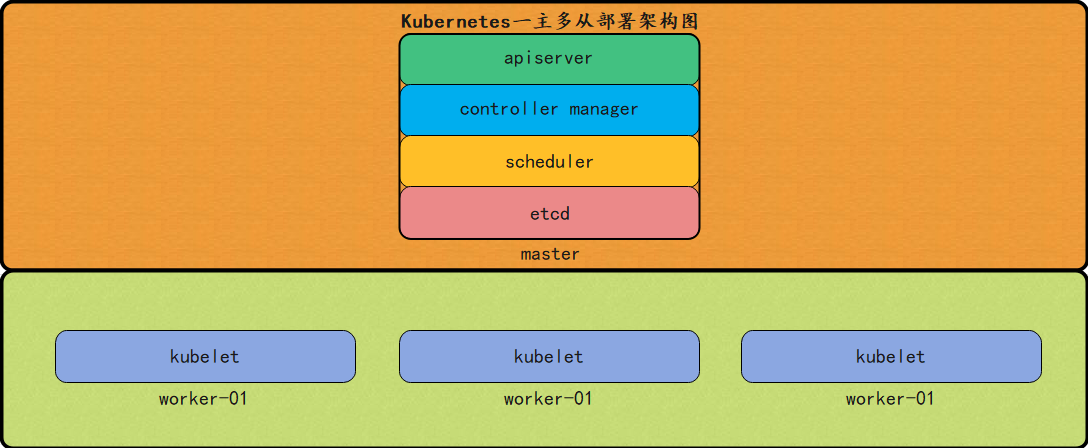

一、架构图

二、部署环境

| 主机名 | 系统版本 | 内核版本 | IP地址 | 备注 |

|---|---|---|---|---|

| k8s-master-211 | centos7.6.1810 | 5.11.16 | 192.168.1.211 | master节点 |

| k8s-worker-213 | centos7.6.1810 | 5.11.16 | 192.168.1.213 | worker节点 |

| k8s-worker-214 | centos7.6.1810 | 5.11.16 | 192.168.1.214 | worker节点 |

说明:建议操作系统选择centos7.5或centos7.6,centos7.2,centos7.3,centos7.4版本存在一定几率的kubelet无法启动问题。

三、环境初始化

说明:以下操作无论是master节点和worker节点均需要执行。

3.1、内核升级

说明:centos7.6系统内核默认是3.10.0,这里建议内核版本为5.11.16。

内核选择:kernel-lt(lt=long-term)长期有效kernel-ml(ml=mainline) 主流版本

升级步骤如下:

# 1、下载内核CSDN下载地址:https://download.csdn.net/download/m0_37814112/17047556wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-ml-5.11.16-1.el7.elrepo.x86_64.rpm# 2、安装内核yum localinstall kernel-ml-5.11.16-1.el7.elrepo.x86_64.rpm -y# 3、查看当前内核版本grub2-editenv list# 4、查看所有内核启动grub2awk -F \' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg# 5、修改为最新的内核启动grub2-set-default 'CentOS Linux (5.11.16-1.el7.elrepo.x86_64) 7 (Core)'# 6、再次查看内核版本grub2-editenv list# 7、reboot重启服务器(必须)

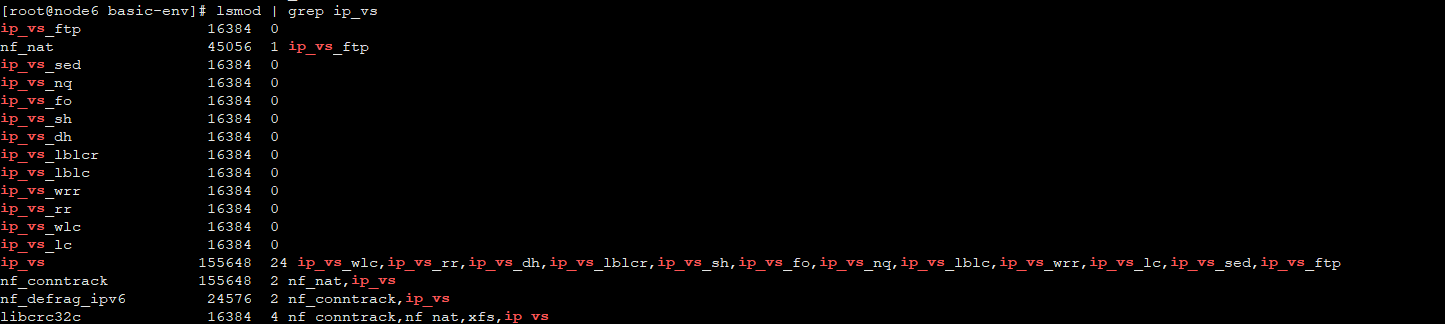

3.2、系统初始化配置

# 1、分别三个节点修改主机名master节点: hostnamectl set-hostname k8s-master-211worker1节点:hostnamectl set-hostname k8s-worker-213worker2节点:hostnamectl set-hostname k8s-worker-214# 2、内核参数修改cat > /etc/sysctl.d/k8s.conf <<EOF vm.swappiness = 0 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-arptables = 1 EOFsysctl --system# 3、永久新增br_netfilter模块# k8s网络使用flannel,该网络需要设置内核参数bridge-nf-call-iptables=1,修改这个参数需要系统有br_netfilter模块。cat > /etc/rc.sysinit << EOF #!/bin/bash for file in /etc/sysconfig/modules/*.modules ; do [ -x $file ] && $file done EOFcat > /etc/sysconfig/modules/br_netfilter.modules << EOF modprobe br_netfilter EOFchmod 755 /etc/sysconfig/modules/br_netfilter.modules# 4、关闭防火墙systemctl stop firewalld && systemctl disable firewalld# 5、永久关闭selinuxsed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinuxsed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config# 6、关闭swapswapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab# 7、安装ipvsadmyum install ipvsadm -ycat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in \${ipvs_modules}; do /sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1 if [ $? -eq 0 ]; then /sbin/modprobe \${kernel_module} fi done EOFchmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep --color ip_vs >/dev/nulllsmod | grep ip_vs# 8、修改文件描述符和进程数(根据实际情况修改)vim /etc/security/limits.confroot soft nofile 65535root hard nofile 65535root soft nproc 65535root hard nproc 65535root soft memlock unlimitedroot hard memlock unlimited* soft nofile 65535* hard nofile 65535* soft nproc 65535* hard nproc 65535* soft memlock unlimited* hard memlock unlimited# 9、reboot重启服务器(必须)

如下图所示:

四、Docker部署

说明:以下操作无论是master节点和worker节点均需要执行。

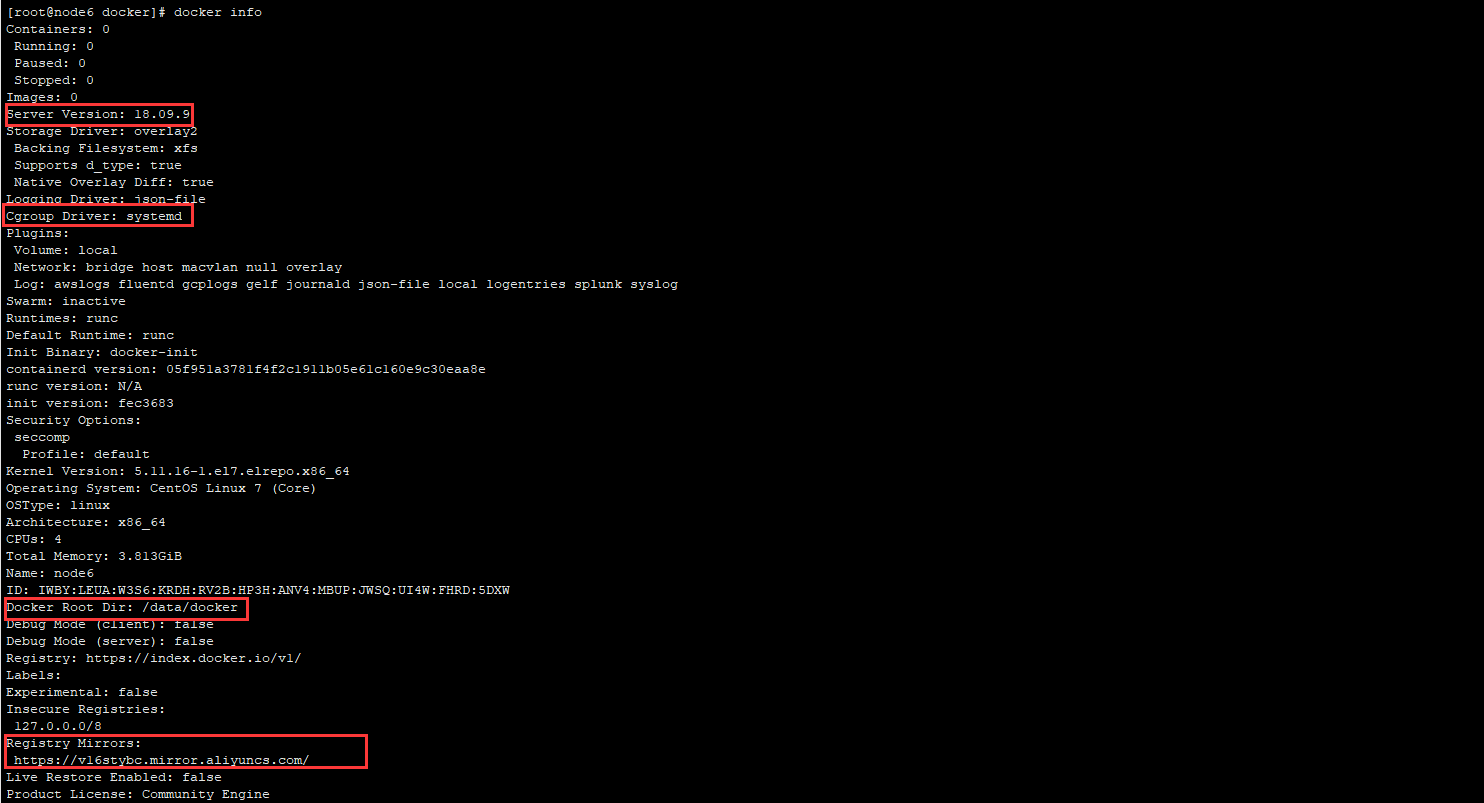

# 1、安装依赖包yum install yum-utils device-mapper-persistent-data lvm2 -y# 2、设置Docker源yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo或yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 3、docker安装版本查看yum list docker-ce --showduplicates | sort -r# 4、指定安装的docker版本为18.09.9yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y# 5、启动Dockersystemctl start docker && systemctl enable docker# 6、修改Cgroup Driver# 修改docker数据目录,默认是/var/lib/docker,建议数据目录为主机上最大磁盘空间目录或子目录# 配置阿里云加速器vim /etc/docker/daemon.json{"registry-mirrors": ["https://v16stybc.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"],"graph": "/data/docker"}systemctl daemon-reload && systemctl restart docker

如下图所示:

五、kubernetes部署

5.1、设置kubernetes源

说明:以下操作无论是master节点和worker节点均需要执行。

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOFyum clean all && yum -y makecache

5.2、安装kubelet、kubeadm和kubectl

说明:以下操作无论是master节点和worker节点均需要执行。

# 1、版本查看yum list kubelet --showduplicates | sort -r# 2、安装1.17.4版本yum install kubelet-1.17.4 kubeadm-1.17.4 kubectl-1.17.4 -y

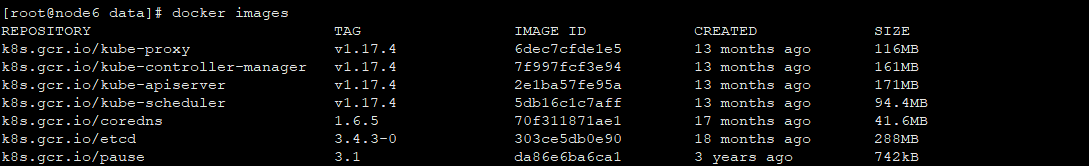

5.3、k8s相关镜像下载

说明:以下操作无论是master节点和worker节点均需要执行。

vim get_image.sh#!/bin/bashurl=registry.cn-hangzhou.aliyuncs.com/google_containersversion=v1.17.4images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)for imagename in ${images[@]} ; dodocker pull $url/$imagenamedocker tag $url/$imagename k8s.gcr.io/$imagenamedocker rmi -f $url/$imagenamedone

如下图所示:

5.4、kubeadm初始化master节点

说明:以下操作只需要在master节点执行。

# 1、创建初始化配置文件kubeadm config print init-defaults > kubeadm-config.yaml# 根据实际部署环境修改信息:vim kubeadm-config.yamlapiVersion: kubeadm.k8s.io/v1beta1kind: ClusterConfigurationnetworking:serviceSubnet: "10.96.0.0/16" #service网段podSubnet: "10.48.0.0/16" #pod网段kubernetesVersion: "v1.17.4" #kubernetes版本controlPlaneEndpoint: "192.168.1.211:6443" #apiserver ip和端口apiServer:extraArgs:authorization-mode: "Node,RBAC"service-node-port-range: 30000-36000 #service端口范围imageRepository: ""---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationmode: "ipvs"# 3、初始化masterkubeadm init --config=kubeadm-config.yaml --upload-certs --ignore-preflight-errors=all

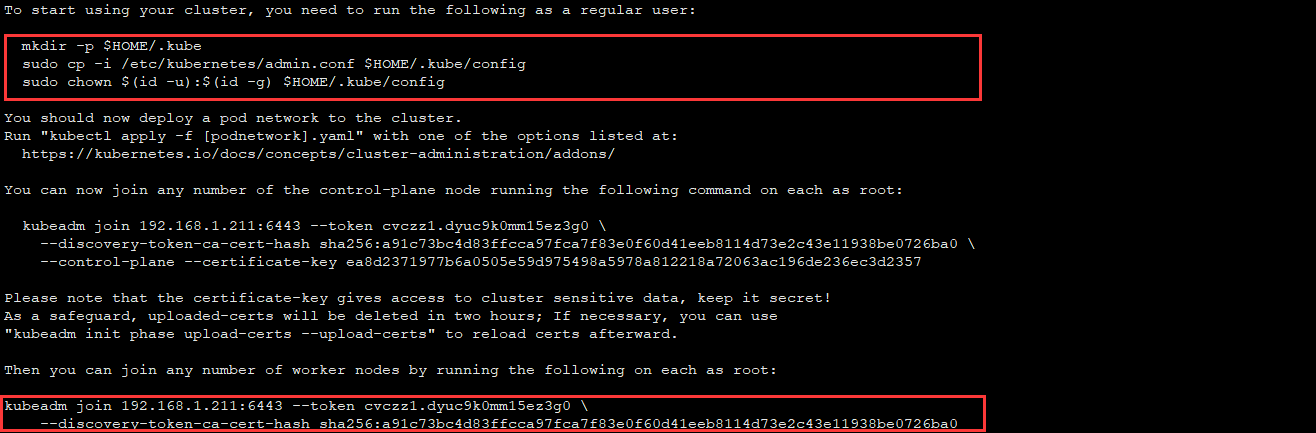

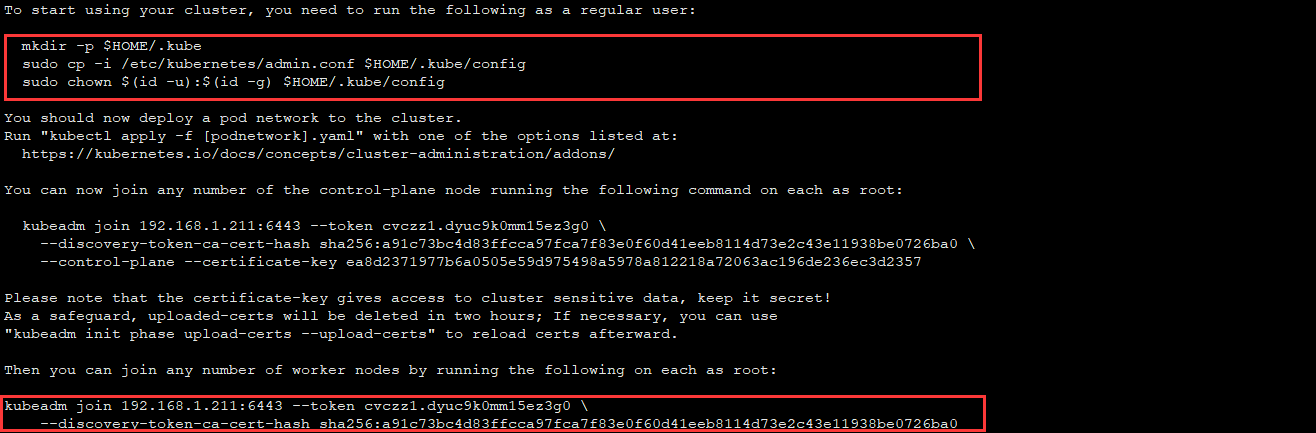

如下图所示:

5.5、配置kubectl

说明:以下操作只需要在master节点执行。

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

5.6、配置flannel网络

说明:以下操作只需要在master节点执行。

# 1、下载官方模板文件wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml# 2、部署flannel网络kubectl create -f kube-flannel.yml# 3、根据实际情况修改kube-flannel.ymlvim kube-flannel.yml---apiVersion: policy/v1beta1kind: PodSecurityPolicymetadata:name: psp.flannel.unprivilegedannotations:seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/defaultseccomp.security.alpha.kubernetes.io/defaultProfileName: docker/defaultapparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/defaultapparmor.security.beta.kubernetes.io/defaultProfileName: runtime/defaultspec:privileged: falsevolumes:- configMap- secret- emptyDir- hostPathallowedHostPaths:- pathPrefix: "/etc/cni/net.d"- pathPrefix: "/etc/kube-flannel"- pathPrefix: "/run/flannel"readOnlyRootFilesystem: false# Users and groupsrunAsUser:rule: RunAsAnysupplementalGroups:rule: RunAsAnyfsGroup:rule: RunAsAny# Privilege EscalationallowPrivilegeEscalation: falsedefaultAllowPrivilegeEscalation: false# CapabilitiesallowedCapabilities: ['NET_ADMIN', 'NET_RAW']defaultAddCapabilities: []requiredDropCapabilities: []# Host namespaceshostPID: falsehostIPC: falsehostNetwork: truehostPorts:- min: 0max: 65535# SELinuxseLinux:# SELinux is unused in CaaSPrule: 'RunAsAny'---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: flannelrules:- apiGroups: ['extensions']resources: ['podsecuritypolicies']verbs: ['use']resourceNames: ['psp.flannel.unprivileged']- apiGroups:- ""resources:- podsverbs:- get- apiGroups:- ""resources:- nodesverbs:- list- watch- apiGroups:- ""resources:- nodes/statusverbs:- patch---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: flannelroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannelsubjects:- kind: ServiceAccountname: flannelnamespace: kube-system---apiVersion: v1kind: ServiceAccountmetadata:name: flannelnamespace: kube-system---kind: ConfigMapapiVersion: v1metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flanneldata:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.48.0.0/16", #这里的网段地址需要与kubeadm-config.yaml配置文件定义的必须保持一致"Backend": {"Type": "vxlan"}}---apiVersion: apps/v1kind: DaemonSetmetadata:name: kube-flannel-dsnamespace: kube-systemlabels:tier: nodeapp: flannelspec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.14.0-rc1command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.14.0-rc1command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

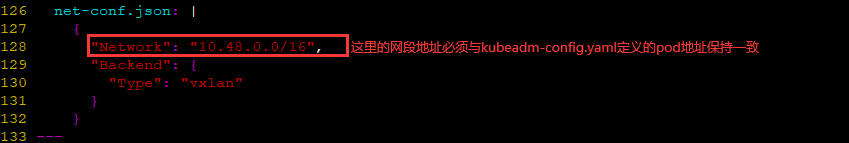

说明:官方模板文件只需要修改”Network”: “10.48.0.0/16”这一字段,且这里的网段地址需要与kubeadm-config.yaml配置文件定义的必须保持一致。

如下图所示:

5.7、master节点kubelet服务启动

说明:以下操作只需要在master节点执行。

systemctl restart kubelet && systemctl enable kubelet[root@node6 install-kubernetes]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master-211 Ready master 17m v1.17.4# 如果需要k8s的master节点允许调度,则执行如下操作,如果不需要可以忽略下操作kubectl taint nodes --all node-role.kubernetes.io/master-

5.8、worker节点部署

说明:以下操作只需要在worker节点执行。

如上图所示,操作如下:

kubeadm join 192.168.1.211:6443 --token cvczz1.dyuc9k0mm15ez3g0 --discovery-token-ca-cert-hash sha256:a91c73bc4d83ffcca97fca7f83e0f60d41eeb8114d73e2c43e11938be0726ba0

如果你忘了token和公钥,可以通过如下命令执行:

执行命令生成tokenkubeadm token list获取CA(证书)公钥哈希值openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //'kubeadm join 192.168.1.211:6443 --token 新生成的Token填写此处 --discovery-token-ca-cert-hash sha256:获取的公钥哈希值填写此处

如下所示,kubernetes一主多从,就部署完成了。

[root@k8s-master-211 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master-211 Ready master 126m v1.17.4k8s-worker-213 Ready <none> 105m v1.17.4k8s-worker-214 Ready <none> 105m v1.17.4

如下所示,kubernetes一主多从,就部署完成了。

下一章:《Kubernets部署篇:Centos7.6部署kubernetes1.17.4高可用集群(方案一)》

总结:整理不易,如果对你有帮助,可否点赞关注一下?

更多详细内容请参考:企业级K8s集群运维实战

还没有评论,来说两句吧...