redis读写分离之lettuce

问题

redis使用过程中,很多情况都是读多写少,而不管是主从、哨兵、集群,从节点都只是用来备份,为了最大化节约用户成本,我们需要利用从节点来进行读,分担主节点压力,这里我们继续上一章的jedis的读写分离,由于springboot现在redis集群默认用的是lettuce,所以介绍下lettuce读写分离

读写分离

主从读写分离

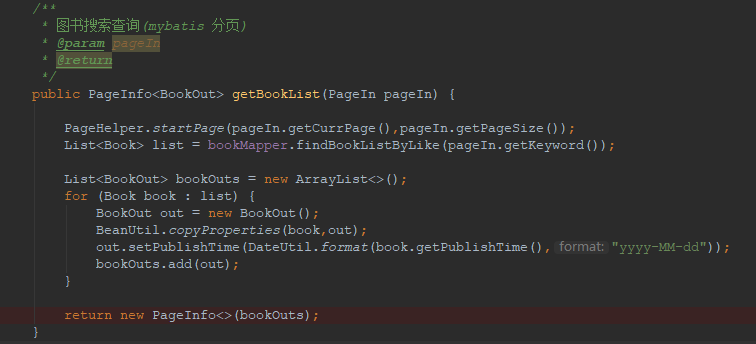

这里先建一个主从集群,1主3从,一般情况下只需要进行相关配置如下:

spring:redis:host: redisMastHostport: 6379lettuce:pool:max-active: 512max-idle: 256min-idle: 256max-wait: -1

这样就可以直接注入redisTemplate,读写数据了,但是这个默认只能读写主,如果需要设置readfrom,则需要自定义factory,下面给出两种方案

方案一(适用于非aws)

只需要配置主节点,从节点会信息会自动从主节点获取

@Configurationclass WriteToMasterReadFromReplicaConfiguration {@Beanpublic LettuceConnectionFactory redisConnectionFactory() {LettuceClientConfiguration clientConfig = LettuceClientConfiguration.builder().readFrom(ReadFrom.SLAVE_PREFERRED).build();RedisStandaloneConfiguration serverConfig = new RedisStandaloneConfiguration("server", 6379);return new LettuceConnectionFactory(serverConfig, clientConfig);}}

方案二(云上redis,比如aws)

下面给个demo

import io.lettuce.core.ReadFrom;import io.lettuce.core.models.role.RedisNodeDescription;import org.apache.commons.lang3.StringUtils;import org.apache.commons.pool2.impl.GenericObjectPoolConfig;import org.slf4j.Logger;import org.slf4j.LoggerFactory;import org.springframework.beans.factory.annotation.Qualifier;import org.springframework.beans.factory.annotation.Value;import org.springframework.context.annotation.Bean;import org.springframework.context.annotation.Configuration;import org.springframework.data.redis.connection.RedisStaticMasterReplicaConfiguration;import org.springframework.data.redis.connection.lettuce.LettuceClientConfiguration;import org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory;import org.springframework.data.redis.connection.lettuce.LettucePoolingClientConfiguration;import org.springframework.data.redis.core.RedisTemplate;import org.springframework.data.redis.serializer.StringRedisSerializer;import java.time.Duration;import java.util.List;import java.util.concurrent.atomic.AtomicInteger;import java.util.stream.Collectors;import java.util.stream.IntStream;import java.util.stream.Stream;@Configurationpublic class RedisConfig {@Value("${spring.redis1.master}")private String master;@Value("${spring.redis1.slaves:}")private String slaves;@Value("${spring.redis1.port}")private int port;@Value("${spring.redis1.timeout:200}")private long timeout;@Value("${spring.redis1.lettuce.pool.max-idle:256}")private int maxIdle;@Value("${spring.redis1.lettuce.pool.min-idle:256}")private int minIdle;@Value("${spring.redis1.lettuce.pool.max-active:512}")private int maxActive;@Value("${spring.redis1.lettuce.pool.max-wait:-1}")private long maxWait;private static Logger logger = LoggerFactory.getLogger(RedisConfig.class);private final AtomicInteger index = new AtomicInteger(-1);@Bean(value = "lettuceConnectionFactory1")LettuceConnectionFactory lettuceConnectionFactory1(GenericObjectPoolConfig genericObjectPoolConfig) {RedisStaticMasterReplicaConfiguration configuration = new RedisStaticMasterReplicaConfiguration(this.master, this.port);if(StringUtils.isNotBlank(slaves)){String[] slaveHosts=slaves.split(",");for (int i=0;i<slaveHosts.length;i++){configuration.addNode(slaveHosts[i], this.port);}}LettuceClientConfiguration clientConfig =LettucePoolingClientConfiguration.builder().readFrom(ReadFrom.SLAVE).commandTimeout(Duration.ofMillis(timeout)).poolConfig(genericObjectPoolConfig).build();return new LettuceConnectionFactory(configuration, clientConfig);}/*** GenericObjectPoolConfig 连接池配置* @return*/@Beanpublic GenericObjectPoolConfig genericObjectPoolConfig() {GenericObjectPoolConfig genericObjectPoolConfig = new GenericObjectPoolConfig();genericObjectPoolConfig.setMaxIdle(maxIdle);genericObjectPoolConfig.setMinIdle(minIdle);genericObjectPoolConfig.setMaxTotal(maxActive);genericObjectPoolConfig.setMaxWaitMillis(maxWait);return genericObjectPoolConfig;}@Bean(name = "redisTemplate1")public RedisTemplate redisTemplate(@Qualifier("lettuceConnectionFactory1") LettuceConnectionFactory connectionFactory) {RedisTemplate<String,String> template = new RedisTemplate<String,String>();template.setConnectionFactory(connectionFactory);template.setKeySerializer(new StringRedisSerializer());template.setValueSerializer(new StringRedisSerializer());template.setHashKeySerializer(new StringRedisSerializer());template.setHashValueSerializer(new StringRedisSerializer());logger.info("redis 连接成功");return template;}}

这里的核心代码在readfrom的设置,lettuce提供了5中选项,分别是

- MASTER

- MASTER_PREFERRED

- SLAVE_PREFERRED

- SLAVE

NEAREST

最新的版本SLAVE改成了ReadFrom.REPLICA

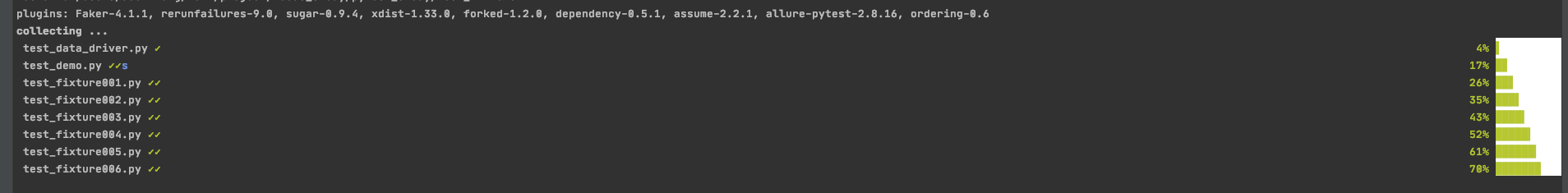

这里设置为SlAVE,那么读请求都会走从节点,但是这里有个bug,每次都会读取最后一个从节点,其他从节点都不会有请求过去,跟踪源代码发现节点顺序是一定的,但是每次getConnection时每次都会获取最后一个,下面是缓存命令情况

解决方案就是自定义一个readFrom,如下LettuceClientConfiguration clientConfig =

LettucePoolingClientConfiguration.builder().readFrom(new ReadFrom() {@Overridepublic List<RedisNodeDescription> select(Nodes nodes) {List<RedisNodeDescription> allNodes = nodes.getNodes();int ind = Math.abs(index.incrementAndGet() % allNodes.size());RedisNodeDescription selected = allNodes.get(ind);logger.info("Selected random node {} with uri {}", ind, selected.getUri());List<RedisNodeDescription> remaining = IntStream.range(0, allNodes.size()).filter(i -> i != ind).mapToObj(allNodes::get).collect(Collectors.toList());return Stream.concat(Stream.of(selected),remaining.stream()).collect(Collectors.toList());}}).commandTimeout(Duration.ofMillis(timeout)).poolConfig(genericObjectPoolConfig).build();return new LettuceConnectionFactory(configuration, clientConfig);

手动实现顺序读各个从节点,修改后调用情况如下,由于还有其他应用连接该redis,所以监控图中非绝对均衡

哨兵模式

这个我就提供一个简单demo

@Configuration@ComponentScan("com.redis")public class RedisConfig {@Beanpublic LettuceConnectionFactory redisConnectionFactory() {// return new LettuceConnectionFactory(new RedisStandaloneConfiguration("192.168.80.130", 6379));RedisSentinelConfiguration sentinelConfig = new RedisSentinelConfiguration().master("mymaster")// 哨兵地址.sentinel("192.168.80.130", 26379).sentinel("192.168.80.130", 26380).sentinel("192.168.80.130", 26381);LettuceClientConfiguration clientConfig = LettuceClientConfiguration.builder().readFrom(ReadFrom.SLAVE_PREFERRED).build();return new LettuceConnectionFactory(sentinelConfig, clientConfig);}@Beanpublic RedisTemplate redisTemplate(RedisConnectionFactory redisConnectionFactory) {RedisTemplate redisTemplate = new RedisTemplate();redisTemplate.setConnectionFactory(redisConnectionFactory);// 可以配置对象的转换规则,比如使用json格式对object进行存储。// Object --> 序列化 --> 二进制流 --> redis-server存储redisTemplate.setKeySerializer(new StringRedisSerializer());redisTemplate.setValueSerializer(new JdkSerializationRedisSerializer());return redisTemplate;}}

集群模式

集群模式就比较简单了,直接套用下面demo

import io.lettuce.core.ReadFrom;import io.lettuce.core.resource.ClientResources;import lombok.extern.slf4j.Slf4j;import org.apache.commons.lang3.StringUtils;import org.springframework.beans.factory.annotation.Qualifier;import org.springframework.beans.factory.annotation.Value;import org.springframework.context.annotation.Bean;import org.springframework.context.annotation.Configuration;import org.springframework.data.redis.connection.RedisClusterConfiguration;import org.springframework.data.redis.connection.RedisConnectionFactory;import org.springframework.data.redis.connection.RedisNode;import org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory;import org.springframework.data.redis.connection.lettuce.LettucePoolingClientConfiguration;import org.springframework.data.redis.core.RedisTemplate;import org.springframework.data.redis.serializer.StringRedisSerializer;import java.time.Duration;import java.util.HashSet;import java.util.Set;@Slf4j@Configurationpublic class Redis2Config {@Value("${spring.redis2.cluster.nodes: com:9736}")public String REDIS_HOST;@Value("${spring.redis2.cluster.port:9736}")public int REDIS_PORT;@Value("${spring.redis2.cluster.type:}")public String REDIS_TYPE;@Value("${spring.redis2.cluster.read-from:master}")public String READ_FROM;@Value("${spring.redis2.cluster.max-redirects:1}")public int REDIS_MAX_REDIRECTS;@Value("${spring.redis2.cluster.share-native-connection:true}")public boolean REDIS_SHARE_NATIVE_CONNECTION;@Value("${spring.redis2.cluster.validate-connection:false}")public boolean VALIDATE_CONNECTION;@Value("${spring.redis2.cluster.shutdown-timeout:100}")public long SHUTDOWN_TIMEOUT;@Bean(value = "myRedisConnectionFactory")public RedisConnectionFactory connectionFactory(ClientResources clientResources) {RedisClusterConfiguration clusterConfiguration = new RedisClusterConfiguration();if (StringUtils.isNotEmpty(REDIS_HOST)) {String[] serverArray = REDIS_HOST.split(",");Set<RedisNode> nodes = new HashSet<RedisNode>();for (String ipPort : serverArray) {String[] ipAndPort = ipPort.split(":");nodes.add(new RedisNode(ipAndPort[0].trim(), Integer.valueOf(ipAndPort[1])));}clusterConfiguration.setClusterNodes(nodes);}if (REDIS_MAX_REDIRECTS > 0) {clusterConfiguration.setMaxRedirects(REDIS_MAX_REDIRECTS);}LettucePoolingClientConfiguration.LettucePoolingClientConfigurationBuilder clientConfigurationBuilder = LettucePoolingClientConfiguration.builder().clientResources(clientResources).shutdownTimeout(Duration.ofMillis(SHUTDOWN_TIMEOUT));if (READ_FROM.equals("slave")) {clientConfigurationBuilder.readFrom(ReadFrom.SLAVE_PREFERRED);} else if (READ_FROM.equals("nearest")) {clientConfigurationBuilder.readFrom(ReadFrom.NEAREST);} else if (READ_FROM.equals("master")) {clientConfigurationBuilder.readFrom(ReadFrom.MASTER_PREFERRED);}LettuceConnectionFactory lettuceConnectionFactory = new LettuceConnectionFactory(clusterConfiguration, clientConfigurationBuilder.build());lettuceConnectionFactory.afterPropertiesSet();return lettuceConnectionFactory;}@Bean(name = "myRedisTemplate")public RedisTemplate myRedisTemplate(@Qualifier("myRedisConnectionFactory") RedisConnectionFactory connectionFactory) {RedisTemplate template = new RedisTemplate();template.setConnectionFactory(connectionFactory);template.setKeySerializer(new StringRedisSerializer());template.setValueSerializer(new StringRedisSerializer());return template;}}

不过这里集群模式不推荐读取从节点,因为在生产中有可能导致某一分片挂掉以至于整个集群都不可用,可以考虑从节点整多个,然后配置读写分离。

还没有评论,来说两句吧...