Docker搭建Hadoop集群

文章转自: https://blog.csdn.net/lizongti/article/details/102756472

目录

环境准备

依赖

安装Docker

单例模式(Without Docker)

安装

安装JDK

安装Hadoop

配置

环境变量

设置免密登录

修改 hadoop-env.sh

HDFS

创建目录

修改core-site.xml

修改hdfs-site.xml

格式化HDFS

启动HDFS

HDFS Web

HDFS 测试

YARN

修改mapred-site.xml

修改yarn-site.xml

启动Yarn

Yarn Web

Yarn 测试

集群搭建(Without Docker)

准备

配置master

配置单例

修改hdfs-site.xml

修改masters

删除slaves

拷贝到slaves节点

修改slaves

HDFS

清空目录

格式化HDFS

启动HDFS

测试HDFS

YARN

启动YARN

测试YARN

环境准备

依赖

CentOS7.6

安装Docker

参照安装(点击)

单例模式(Without Docker)

安装

安装JDK

去官网上下载1.8版本的tar.gz ,如果使用yum安装或者下载rpm包安装,则会缺少Scala2.11需要的部分文件。

tar xf jdk-8u221-linux-x64.tar -C /usr/lib/jvmrm -rf /usr/bin/javaln -s /usr/lib/jvm/jdk1.8.0_221/bin/java /usr/bin/java

编辑文件

vim /etc/profile.d/java.sh

添加

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_221export JRE_HOME=${JAVA_HOME}/jreexport CLASSPATH=${JAVA_HOME}/lib:${JRE_HOME}/lib:$CLASSPATHexport PATH=${JAVA_HOME}/bin:$PATH

然后使环境变量生效

source /etc/profile

执行以下命令检查环境变量

[root@vm1 bin]# echo $JAVA_HOME/usr/lib/jvm/jdk1.8.0_221[root@vm1 bin]# echo $JAVA_HOME/usr/lib/jvm/jdk1.8.0_221

安装Hadoop

为了和另一篇的Spark达到版本兼容,使用官网hadoop2.7版本

wget https://archive.apache.org/dist/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

解压

tar xf hadoop-2.7.7.tar.gz -C /opt/

配置

环境变量

编辑文件

vim /etc/profile.d/hadoop.sh

添加

export HADOOP_HOME=/opt/hadoop-2.7.7export PATH=$PATH:$HADOOP_HOME/bin

然后使环境变量生效

source /etc/profile

设置免密登录

本机也需要配置免密登录

参照这里

修改 hadoop-env.sh

配置启动脚本内的JAVA_HOME

vi /opt/hadoop-2.7.7/etc/hadoop/hadoop-env.sh

使用

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_221

替换

export JAVA_HOME=${JAVA_HOME}

HDFS

创建目录

mkdir -p /opt/hadoop-2.7.7/hdfs/namemkdir -p /opt/hadoop-2.7.7/hdfs/data

修改core-site.xml

配置访问节点

vi /opt/hadoop-2.7.7/etc/hadoop/core-site.xml

替换

<configuration></configuration>

为以下配置

<configuration><property><name>hadoop.tmp.dir</name><value>file:/opt/hadoop-2.7.7/tmp</value></property><property><name>fs.defaultFS</name><value>hdfs://vm1:9000</value></property></configuration>

- 通过hadoop.tmp.dir指定hadoop数据存储的临时文件夹,如没有配置hadoop.tmp.dir参数,此时系统默认的临时目录为:/tmp/hadoop-root。而这个目录在每次重启后都会被删除。

- 通过fs.defaultFS指定默认访问文件系统的地址,否则默认访问本地文件,而非HDFS上的文件

修改hdfs-site.xml

配置副本个数

vi /opt/hadoop-2.7.7/etc/hadoop/hdfs-site.xml

替换

<configuration></configuration>

为以下配置

<configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.name.dir</name><value>/opt/hadoop-2.7.7/hdfs/name</value></property><property><name>dfs.data.dir</name><value>/opt/hadoop-2.7.7/hdfs/data</value></property></configuration>

- 通过dfs.replication指定HDFS的备份因子为1

- 通过dfs.name.dir指定namenode节点的文件存储目录,这个参数用于确定将HDFS文件系统的元信息保存在什么目录下。如果这个参数设置为多个目录,那么这些目录下都保存着元信息的多个备份。

- 通过dfs.data.dir指定datanode节点的文件存储目录,这个参数用于确定将HDFS文件系统的数据保存在什么目录下。

我们可以将这个参数设置为多个分区上目录,即可将HDFS建立在不同分区上

格式化HDFS

cd /opt/hadoop-2.7.7/binhdfs namenode -format

启动HDFS

cd /opt/hadoop-2.7.7/sbin./start-dfs.sh

运行结果

Starting namenodes on [vm1]vm1: starting namenode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-namenode-vm1.outlocalhost: starting datanode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-datanode-vm1.outStarting secondary namenodes [0.0.0.0]0.0.0.0: starting secondarynamenode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-secondarynamenode-vm1.out

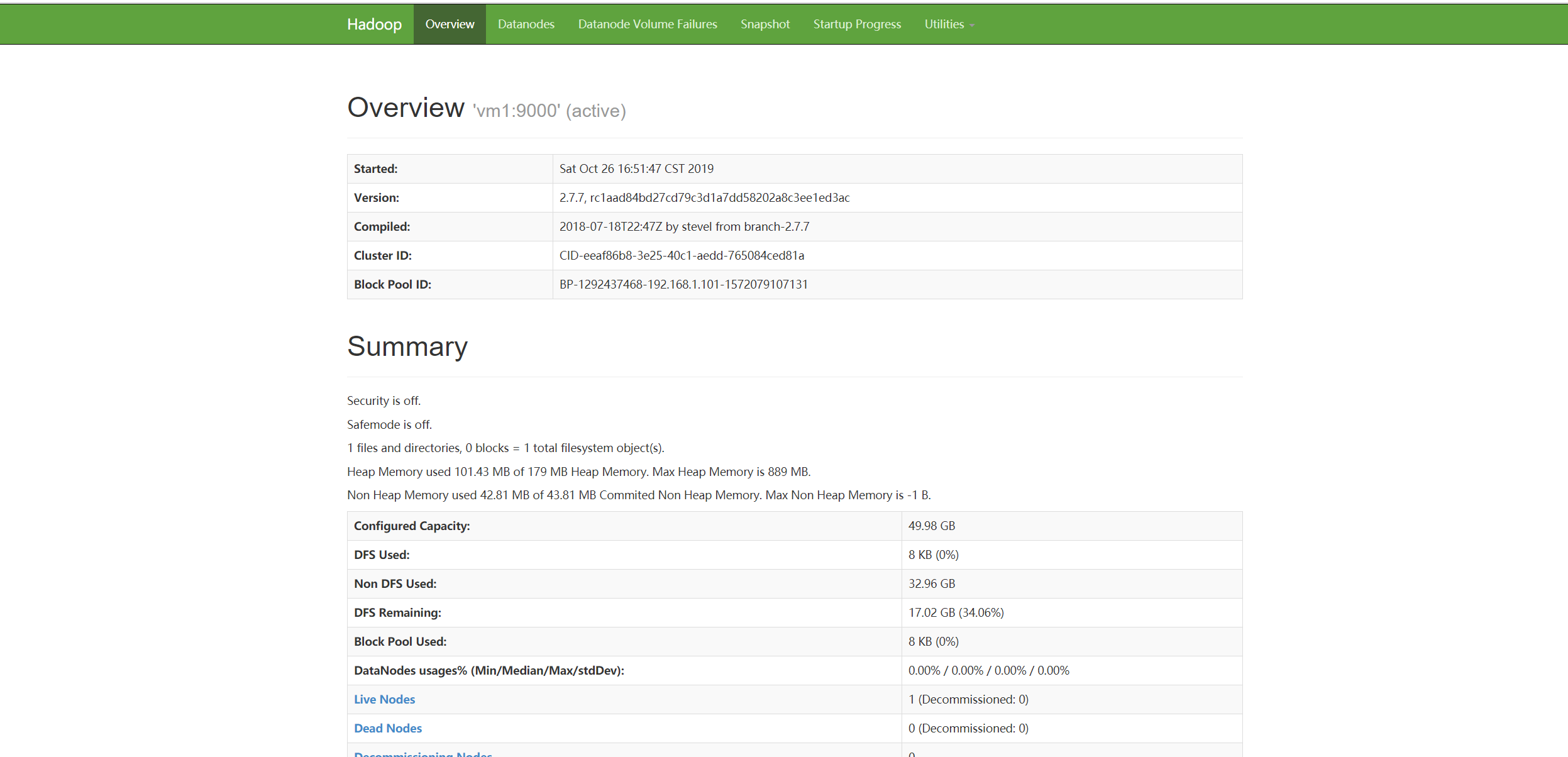

HDFS Web

访问HDFS web界面 http://vm1:50070

HDFS 测试

生成测试数据

mkdir -p /tmp/inputvi /tmp/input/1

加入

abahadoop fs -mkdir -p /tmp/inputhadoop fs -put /tmp/input/1 /tmp/inputhadoop fs -ls /tmp/inputFound 1 items-rw-r--r-- 1 root supergroup 6 2019-10-28 11:34 /tmp/input/1

YARN

修改mapred-site.xml

设置调度器为yarn

cp /opt/hadoop-2.7.7/etc/hadoop/mapred-site.xml.template /opt/hadoop-2.7.7/etc/hadoop/mapred-site.xmlvi /opt/hadoop-2.7.7/etc/hadoop/mapred-site.xml

替换

<configuration></configuration>

为以下配置

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapred.job.tracker</name><value>http://vm1:9001</value></property></configuration>

- 通过指定mapreduce.framework.name来设置map-reduce任务使用yarn的调度系统。如果设置为local表示本地运行,设置为classic表示经典mapreduce框架。

- 通过指定mapred.job.tracker来设置map-reduce任务的job tracker的IP和Port。

修改yarn-site.xml

vi /opt/hadoop-2.7.7/etc/hadoop/yarn-site.xml

替换

<configuration></configuration>

为以下配置

<configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.resourcemanager.hostname</name><value>vm1</value></property></configuration>

- 通过指定yarn.nodemanager.aux-services为mapreduce_shuffle来避免 “The auxService:mapreduce_shuffle does not exist” 错误

- 通过指定yarn.resourcemanager.hostname来设置rm所在的主机。

启动Yarn

./start-yarn.sh

显示

starting yarn daemonsstarting resourcemanager, logging to /opt/hadoop-2.7.7/logs/yarn-root-resourcemanager-vm1.outlocalhost: starting nodemanager, logging to /opt/hadoop-2.7.7/logs/yarn-root-nodemanager-vm1.out

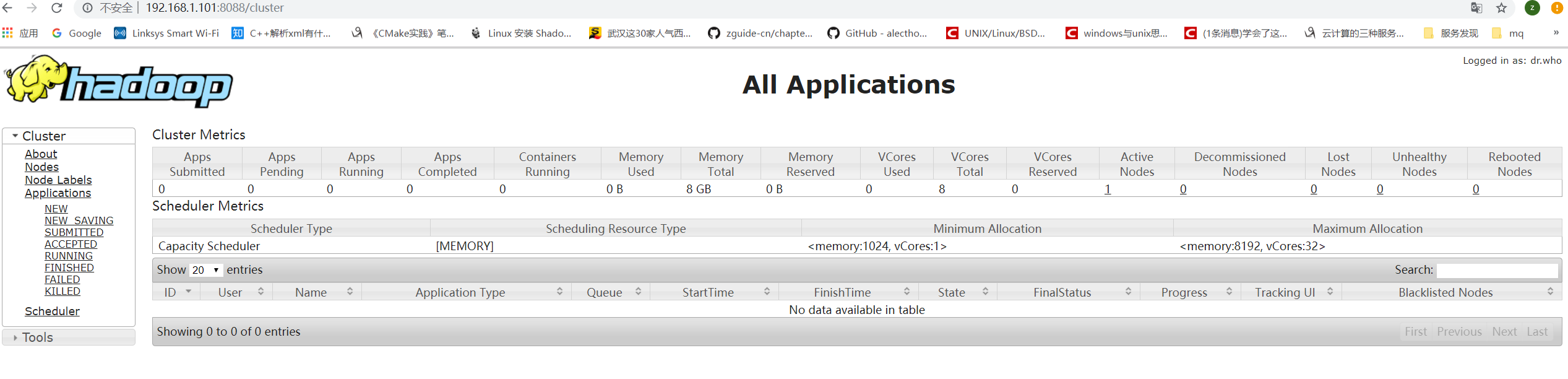

Yarn Web

访问http://vm1:8088

Yarn 测试

执行命令

```shellhadoop jar /opt/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.7.jar wordcount /tmp/input /tmp/result

执行日志

19/10/28 11:34:52 INFO client.RMProxy: Connecting to ResourceManager at vm1/192.168.1.101:803219/10/28 11:34:53 INFO input.FileInputFormat: Total input paths to process : 119/10/28 11:34:53 INFO mapreduce.JobSubmitter: number of splits:119/10/28 11:34:54 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1572232474055_000219/10/28 11:34:54 INFO impl.YarnClientImpl: Submitted application application_1572232474055_000219/10/28 11:34:54 INFO mapreduce.Job: The url to track the job: http://vm1:8088/proxy/application_1572232474055_0002/19/10/28 11:34:54 INFO mapreduce.Job: Running job: job_1572232474055_000219/10/28 11:35:06 INFO mapreduce.Job: Job job_1572232474055_0002 running in uber mode : false19/10/28 11:35:06 INFO mapreduce.Job: map 0% reduce 0%19/10/28 11:35:11 INFO mapreduce.Job: map 100% reduce 0%19/10/28 11:35:16 INFO mapreduce.Job: map 100% reduce 100%19/10/28 11:35:17 INFO mapreduce.Job: Job job_1572232474055_0002 completed successfully19/10/28 11:35:18 INFO mapreduce.Job: Counters: 49File System CountersFILE: Number of bytes read=22FILE: Number of bytes written=245617FILE: Number of read operations=0FILE: Number of large read operations=0FILE: Number of write operations=0HDFS: Number of bytes read=98HDFS: Number of bytes written=8HDFS: Number of read operations=6HDFS: Number of large read operations=0HDFS: Number of write operations=2Job CountersLaunched map tasks=1Launched reduce tasks=1Data-local map tasks=1Total time spent by all maps in occupied slots (ms)=2576Total time spent by all reduces in occupied slots (ms)=3148Total time spent by all map tasks (ms)=2576Total time spent by all reduce tasks (ms)=3148Total vcore-milliseconds taken by all map tasks=2576Total vcore-milliseconds taken by all reduce tasks=3148Total megabyte-milliseconds taken by all map tasks=2637824Total megabyte-milliseconds taken by all reduce tasks=3223552Map-Reduce FrameworkMap input records=3Map output records=3Map output bytes=18Map output materialized bytes=22Input split bytes=92Combine input records=3Combine output records=2Reduce input groups=2Reduce shuffle bytes=22Reduce input records=2Reduce output records=2Spilled Records=4Shuffled Maps =1Failed Shuffles=0Merged Map outputs=1GC time elapsed (ms)=425CPU time spent (ms)=1400Physical memory (bytes) snapshot=432537600Virtual memory (bytes) snapshot=4235526144Total committed heap usage (bytes)=304087040Shuffle ErrorsBAD_ID=0CONNECTION=0IO_ERROR=0WRONG_LENGTH=0WRONG_MAP=0WRONG_REDUCE=0File Input Format CountersBytes Read=6File Output Format CountersBytes Written=8

查看运行结果

hadoop fs -cat /tmp/result/part-r-00000

显示

a 2b 1

集群搭建(Without Docker)

准备

- 部署三台机器vm1, vm2,vm3在一个子网当中。

配置master

配置单例

先在vm1上执行与单例配置完全一样的配置过程

修改hdfs-site.xml

vi /opt/hadoop-2.7.7/etc/hadoop/hdfs-site.xml

替换

<property><name>dfs.replication</name><value>1</value></property>

为以下配置

<property><name>dfs.replication</name><value>2</value></property>

这里的副本数dfs.replication配置成2

修改masters

echo "vm1" > /opt/hadoop-2.7.7/etc/hadoop/masters

删除slaves

rm /opt/hadoop-2.7.7/etc/hadoop/slaves

拷贝到slaves节点

scp -r /opt/hadoop-2.7.7 root@vm2:/opt/scp -r /opt/hadoop-2.7.7 root@vm3:/opt/

修改slaves

cat > /opt/hadoop-2.7.7/etc/hadoop/slaves <<EOFvm1vm2vm3EOF

把vm2和vm3写入到slaves里面去

HDFS

清空目录

rm -rf /opt/hadoop-2.7.7/hdfs/data/*rm -rf /opt/hadoop-2.7.7/hdfs/name/*

格式化HDFS

重新格式化dfs

hadoop namenode -format

启动HDFS

/opt/hadoop-2.7.7/sbin./start-dfs.sh

显示

Starting namenodes on [vm1]vm1: starting namenode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-namenode-vm1.outvm3: starting datanode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-datanode-vm3.outvm2: starting datanode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-datanode-vm2.outvm1: starting datanode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-datanode-vm1.outStarting secondary namenodes [0.0.0.0]0.0.0.0: starting secondarynamenode, logging to /opt/hadoop-2.7.7/logs/hadoop-root-secondarynamenode-vm1.out

比如namenode的日志就在/opt/hadoop-2.7.7/logs/hadoop-root-namenode-vm1.log中

检查master进程

$ jps75991 DataNode76408 Jps76270 SecondaryNameNode

检查slave进程

$ jps29379 DataNode29494 Jps

查看集群状态

hdfs dfsadmin -report -safemode

显示

[root@vm1 sbin]#Configured Capacity: 160982630400 (149.93 GB)Present Capacity: 101929107456 (94.93 GB)DFS Remaining: 101929095168 (94.93 GB)DFS Used: 12288 (12 KB)DFS Used%: 0.00%Under replicated blocks: 0Blocks with corrupt replicas: 0Missing blocks: 0Missing blocks (with replication factor 1): 0-------------------------------------------------Live datanodes (3):Name: 192.168.1.103:50010 (vm3)Hostname: vm3Decommission Status : NormalConfigured Capacity: 53660876800 (49.98 GB)DFS Used: 4096 (4 KB)Non DFS Used: 10321448960 (9.61 GB)DFS Remaining: 43339423744 (40.36 GB)DFS Used%: 0.00%DFS Remaining%: 80.77%Configured Cache Capacity: 0 (0 B)Cache Used: 0 (0 B)Cache Remaining: 0 (0 B)Cache Used%: 100.00%Cache Remaining%: 0.00%Xceivers: 1Last contact: Mon Oct 28 13:42:20 CST 2019Name: 192.168.1.102:50010 (vm2)Hostname: vm2Decommission Status : NormalConfigured Capacity: 53660876800 (49.98 GB)DFS Used: 4096 (4 KB)Non DFS Used: 13661077504 (12.72 GB)DFS Remaining: 39999795200 (37.25 GB)DFS Used%: 0.00%DFS Remaining%: 74.54%Configured Cache Capacity: 0 (0 B)Cache Used: 0 (0 B)Cache Remaining: 0 (0 B)Cache Used%: 100.00%Cache Remaining%: 0.00%Xceivers: 1Last contact: Mon Oct 28 13:42:21 CST 2019Name: 192.168.1.101:50010 (vm1)Hostname: vm1Decommission Status : NormalConfigured Capacity: 53660876800 (49.98 GB)DFS Used: 4096 (4 KB)Non DFS Used: 35070996480 (32.66 GB)DFS Remaining: 18589876224 (17.31 GB)DFS Used%: 0.00%DFS Remaining%: 34.64%Configured Cache Capacity: 0 (0 B)Cache Used: 0 (0 B)Cache Remaining: 0 (0 B)Cache Used%: 100.00%Cache Remaining%: 0.00%Xceivers: 1Last contact: Mon Oct 28 13:42:21 CST 2019

测试HDFS

hadoop fs -mkdir -p /tmp/inputhadoop fs -put /tmp/input/1 /tmp/inputhadoop fs -ls /tmp/inputFound 1 items-rw-r--r-- 1 root supergroup 6 2019-10-28 11:34 /tmp/input/1

YARN

启动YARN

/opt/hadoop-2.7.7/sbin./start-yarn.sh

显示

starting yarn daemonsstarting resourcemanager, logging to /opt/hadoop-2.7.7/logs/yarn-root-resourcemanager-vm1.outvm2: starting nodemanager, logging to /opt/hadoop-2.7.7/logs/yarn-root-nodemanager-vm2.outvm3: starting nodemanager, logging to /opt/hadoop-2.7.7/logs/yarn-root-nodemanager-vm3.outvm1: starting nodemanager, logging to /opt/hadoop-2.7.7/logs/yarn-root-nodemanager-vm1.out

比如resourcemanager的日志就在/opt/hadoop-2.7.7/logs/yarn-root-resourcemanager-vm1.log中

检查master进程

$ jps100464 ResourceManager101746 Jps53786 QuorumPeerMain

检查slave进程

$jps36893 NodeManager37181 Jps

测试YARN

hadoop jar /opt/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.7.jar wordcount /tmp/input /tmp/result

结果同上。

更多参考:

https://cloud.tencent.com/developer/article/1084166

https://www.jianshu.com/p/7ab2b6168cc9

https://www.cnblogs.com/onetwo/p/6419925.html

转载文章,未经验证,一经验证如有问题将进行不当更正。

![洛谷 P1169 [ZJOI2007]棋盘制作 洛谷 P1169 [ZJOI2007]棋盘制作](https://image.dandelioncloud.cn/images/20230808/72ba490c52904facb1bad28940d1f12a.png)

还没有评论,来说两句吧...