RocketMq4.7源码解析之五(消息存储)

文章目录

- 消息队列与索引文件恢复

- 加载Commitlog文件

- 加载ConsumeQueue

- 加载索引文件

- 根据Broker是否是正常停止执行不同的恢复策略

- 恢复所有ConsumeQueue文件

- 正常停止commitLog文件恢复

- 异常停止commitLog文件恢复

- 存储流程

- 校验msg

- 异步存储消息(默认)

- 同步存储消息

- CommitLog刷盘

- 同步刷盘

- 异步刷盘

- 实时更新消息消费队列与索引文件

- 根据消息更新ConumeQueue

- 异步创建文件

- 同步创建文件 (默认)

- 根据消息更新Index 索引文件

- 获取或创建IndexFile 文件

- 添加到Hash索引文件中

- 过期文件删除机制

- 总结

消息队列与索引文件恢复

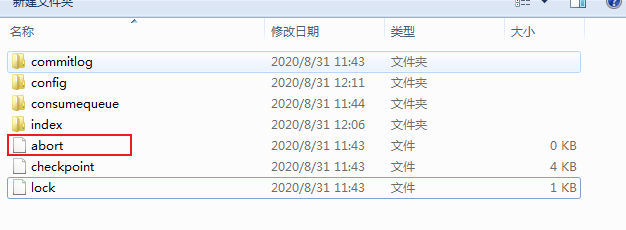

假设broker上次启动后,由于某些原因宕机了,导致ommitlog 、ConsumeQueue 、IndexFile 文件数据不一致,所以broker初次启动时,会去加载文件,然后尝试故障修复,让数据达到最终一致性.

加载存储文件

public boolean load() {boolean result = true;try {//判断上一次退出是否正常。/*其实现机制是Broker 在启动时创建${ ROCKET_HOME} /store/abort 文件,在退出时通过注册NM 钩子函数删除abort 文件。如果下一次启动时存在abort文件。说明Broker 是异常退出的,Commitlog 与Consumequeue数据有可能不一致,需要进行修复。*/boolean lastExitOK = !this.isTempFileExist();log.info("last shutdown {}", lastExitOK ? "normally" : "abnormally");//加载延迟队列, RocketMQ 定时消息相关if (null != scheduleMessageService) {result = result && this.scheduleMessageService.load();}// load Commit Log 加载Commitlog 文件result = result && this.commitLog.load();// load Consume Queue 加载消息消费队列result = result && this.loadConsumeQueue();if (result) {//加载存储检测点,检测点主要记录commitlog 文件、Consumequeue 文件、//Index 索引文件的刷盘点,将在下文的文件刷盘机制中再次提交。this.storeCheckpoint =new StoreCheckpoint(StorePathConfigHelper.getStoreCheckpoint(this.messageStoreConfig.getStorePathRootDir()));//加载索引文件this.indexService.load(lastExitOK);//根据Broker 是否是正常停止执行不同的恢复策略this.recover(lastExitOK);log.info("load over, and the max phy offset = {}", this.getMaxPhyOffset());}} catch (Exception e) {log.error("load exception", e);result = false;}//如果没能加载成功if (!result) {//关闭分配请求this.allocateMappedFileService.shutdown();}return result;}

判断上次退出是否正常

private boolean isTempFileExist() {String fileName = StorePathConfigHelper.getAbortFile(this.messageStoreConfig.getStorePathRootDir());File file = new File(fileName);return file.exists();}

也就是这个文件

加载Commitlog文件

public boolean load() {boolean result = this.mappedFileQueue.load();log.info("load commit log " + (result ? "OK" : "Failed"));return result;}public boolean load() {//加载$ { ROCKET_HOME }/store/commitlog 目录下所有文件File dir = new File(this.storePath);File[] files = dir.listFiles();if (files != null) {// ascending order//按照文件名排序Arrays.sort(files);for (File file : files) {//如果文件大小与配置文件的单个文件大小不一致,将忽略该目录下所有文件,if (file.length() != this.mappedFileSize) {log.warn(file + "\t" + file.length()+ " length not matched message store config value, please check it manually");return false;}try {//创建MappedFile 对象MappedFile mappedFile = new MappedFile(file.getPath(), mappedFileSize);//将wrotePosition 、flushedPosition ,//committedPosition 三个指针都设置为文件大小。mappedFile.setWrotePosition(this.mappedFileSize);mappedFile.setFlushedPosition(this.mappedFileSize);mappedFile.setCommittedPosition(this.mappedFileSize);this.mappedFiles.add(mappedFile);log.info("load " + file.getPath() + " OK");} catch (IOException e) {log.error("load file " + file + " error", e);return false;}}}return true;}

加载ConsumeQueue

private boolean loadConsumeQueue() {File dirLogic = new File(StorePathConfigHelper.getStorePathConsumeQueue(this.messageStoreConfig.getStorePathRootDir()));File[] fileTopicList = dirLogic.listFiles();if (fileTopicList != null) {//遍历消息消费队列根目录for (File fileTopic : fileTopicList) {//获取目录名String topic = fileTopic.getName();//加载每个消息消费队列下的文件,File[] fileQueueIdList = fileTopic.listFiles();if (fileQueueIdList != null) {for (File fileQueueId : fileQueueIdList) {int queueId;try {//获取文件名,就是queueIdqueueId = Integer.parseInt(fileQueueId.getName());} catch (NumberFormatException e) {continue;}//构建ConsumeQueue 对象//初始化ConsumeQueue 的topic 、queueld 、storePath 、mappedFileSize 属性ConsumeQueue logic = new ConsumeQueue(topic,queueId,StorePathConfigHelper.getStorePathConsumeQueue(this.messageStoreConfig.getStorePathRootDir()),this.getMessageStoreConfig().getMappedFileSizeConsumeQueue(),this);//存入consumeQueueTablethis.putConsumeQueue(topic, queueId, logic);//加载ConsumeQueueif (!logic.load()) {return false;}}}}}log.info("load logics queue all over, OK");return true;}

加载索引文件

IndexService

//加载索引文件public boolean load(final boolean lastExitOK) {File dir = new File(this.storePath);File[] files = dir.listFiles();if (files != null) {// ascending orderArrays.sort(files);for (File file : files) {try {IndexFile f = new IndexFile(file.getPath(), this.hashSlotNum, this.indexNum, 0, 0);//加载索引文件f.load();//如果上次异常退出if (!lastExitOK) {//且索引文件上次刷盘时间小于该索引文件最大的消息时间戳该文件将立即销毁。if (f.getEndTimestamp() > this.defaultMessageStore.getStoreCheckpoint().getIndexMsgTimestamp()) {f.destroy(0);continue;}}log.info("load index file OK, " + f.getFileName());//加入索引文件集合this.indexFileList.add(f);} catch (IOException e) {log.error("load file {} error", file, e);return false;} catch (NumberFormatException e) {log.error("load file {} error", file, e);}}}return true;}

加载索引头部信息

IndexFile

public void load() {this.indexHeader.load();}

IndexHeader

public void load() {//分别解析文件头部信息this.beginTimestamp.set(byteBuffer.getLong(beginTimestampIndex));this.endTimestamp.set(byteBuffer.getLong(endTimestampIndex));this.beginPhyOffset.set(byteBuffer.getLong(beginPhyoffsetIndex));this.endPhyOffset.set(byteBuffer.getLong(endPhyoffsetIndex));this.hashSlotCount.set(byteBuffer.getInt(hashSlotcountIndex));this.indexCount.set(byteBuffer.getInt(indexCountIndex));if (this.indexCount.get() <= 0) {this.indexCount.set(1);}}

根据Broker是否是正常停止执行不同的恢复策略

DefaultMessageStore

private void recover(final boolean lastExitOK) {//恢复所有ConsumeQueue文件,返回最大的在ConsumeQueue存储的comlog偏移量long maxPhyOffsetOfConsumeQueue = this.recoverConsumeQueue();if (lastExitOK) {//正常停止commitLog文件恢复this.commitLog.recoverNormally(maxPhyOffsetOfConsumeQueue);} else {//异常停止commitLog文件恢复this.commitLog.recoverAbnormally(maxPhyOffsetOfConsumeQueue);}this.recoverTopicQueueTable();}

恢复所有ConsumeQueue文件

返回最大的在ConsumeQueue存储的comlog偏移量

//恢复所有ConsumeQueue文件private long recoverConsumeQueue() {long maxPhysicOffset = -1;for (ConcurrentMap<Integer, ConsumeQueue> maps : this.consumeQueueTable.values()) {//遍历每个ConsumeQueuefor (ConsumeQueue logic : maps.values()) {//恢复logic.recover();if (logic.getMaxPhysicOffset() > maxPhysicOffset) {maxPhysicOffset = logic.getMaxPhysicOffset();}}}//返回最大的在ConsumeQueue存储的comlog偏移量return maxPhysicOffset;}

恢复ConsumeQueue文件

ConsumeQueue

public void recover() {final List<MappedFile> mappedFiles = this.mappedFileQueue.getMappedFiles();if (!mappedFiles.isEmpty()) {//从倒数第三个文件开始进行恢复int index = mappedFiles.size() - 3;//不足3 个文件,则从第一个文件开始恢复。if (index < 0)index = 0;int mappedFileSizeLogics = this.mappedFileSize;MappedFile mappedFile = mappedFiles.get(index);ByteBuffer byteBuffer = mappedFile.sliceByteBuffer();//获取该文件的初始偏移量long processOffset = mappedFile.getFileFromOffset();//当前文件已校验通过的offsetlong mappedFileOffset = 0;long maxExtAddr = 1;while (true) {for (int i = 0; i < mappedFileSizeLogics; i += CQ_STORE_UNIT_SIZE) {//每次获取一条消息long offset = byteBuffer.getLong();int size = byteBuffer.getInt();long tagsCode = byteBuffer.getLong();if (offset >= 0 && size > 0) {mappedFileOffset = i + CQ_STORE_UNIT_SIZE;//重新记录消息体总长度加上消息在comlog偏移量this.maxPhysicOffset = offset + size;if (isExtAddr(tagsCode)) {maxExtAddr = tagsCode;}} else {//说明遍历到没数据了log.info("recover current consume queue file over, " + mappedFile.getFileName() + " "+ offset + " " + size + " " + tagsCode);break;}}//说明恢复到了结尾if (mappedFileOffset == mappedFileSizeLogics) {//重新计算下个遍历文件索引index++;if (index >= mappedFiles.size()) {//遍历到最后一个,则结束遍历log.info("recover last consume queue file over, last mapped file "+ mappedFile.getFileName());break;} else {//恢复下一个文件mappedFile = mappedFiles.get(index);byteBuffer = mappedFile.sliceByteBuffer();processOffset = mappedFile.getFileFromOffset();mappedFileOffset = 0;log.info("recover next consume queue file, " + mappedFile.getFileName());}} else {//恢复消息队列结束log.info("recover current consume queue queue over " + mappedFile.getFileName() + " "+ (processOffset + mappedFileOffset));break;}}//记录该文件的恢复的物理偏移量processOffset += mappedFileOffset;//设置刷盘指针this.mappedFileQueue.setFlushedWhere(processOffset);//当前数据提交指针this.mappedFileQueue.setCommittedWhere(processOffset);//删除offset 之后的所有文件this.mappedFileQueue.truncateDirtyFiles(processOffset);if (isExtReadEnable()) {this.consumeQueueExt.recover();log.info("Truncate consume queue extend file by max {}", maxExtAddr);this.consumeQueueExt.truncateByMaxAddress(maxExtAddr);}}}

删除offset 之后的所有文件

MappedFileQueue

//删除offset 之后的所有文件public void truncateDirtyFiles(long offset) {List<MappedFile> willRemoveFiles = new ArrayList<MappedFile>();//遍历目录下文件for (MappedFile file : this.mappedFiles) {//获取文件尾部偏移long fileTailOffset = file.getFileFromOffset() + this.mappedFileSize;//若文件尾部偏移>offsetif (fileTailOffset > offset) {//文件开始偏移>=offset//说明当前文件包含了有效偏移if (offset >= file.getFileFromOffset()) {//分别设置当前文件的刷盘,提交,写入指针.file.setWrotePosition((int) (offset % this.mappedFileSize));file.setCommittedPosition((int) (offset % this.mappedFileSize));file.setFlushedPosition((int) (offset % this.mappedFileSize));} else {//说明该文件是有效文件后面创建的//释放MappedFile 占用的内存资源(内存映射与内存通道等)file.destroy(1000);//加入待删除集合willRemoveFiles.add(file);}}}//删除文件this.deleteExpiredFile(willRemoveFiles);}

MappedFile文件销毁

MappedFile

public boolean destroy(final long intervalForcibly) {//关闭MappedFilethis.shutdown(intervalForcibly);//判断是否清理完成if (this.isCleanupOver()) {try {//关闭通道this.fileChannel.close();log.info("close file channel " + this.fileName + " OK");long beginTime = System.currentTimeMillis();//删除整个物理文件boolean result = this.file.delete();log.info("delete file[REF:" + this.getRefCount() + "] " + this.fileName+ (result ? " OK, " : " Failed, ") + "W:" + this.getWrotePosition() + " M:"+ this.getFlushedPosition() + ", "+ UtilAll.computeElapsedTimeMilliseconds(beginTime));} catch (Exception e) {log.warn("close file channel " + this.fileName + " Failed. ", e);}return true;} else {log.warn("destroy mapped file[REF:" + this.getRefCount() + "] " + this.fileName+ " Failed. cleanupOver: " + this.cleanupOver);}return false;}

关闭MappedFile

ReferenceResource

public void shutdown(final long intervalForcibly) {//默认trueif (this.available) {//初次调用时available 为true ,设置available为fal sethis.available = false;//设置初次关闭的时间戳this.firstShutdownTimestamp = System.currentTimeMillis();//释放资源,引用次数小于1 的情况下才会释放资源this.release();} else if (this.getRefCount() > 0) {//如果引用次数大于0//对比当前时间与firstShutdownTimestamp ,如果已经超过了其最大拒绝存活期,每执行//一次,将引用数减少1000 ,直到引用数小于0 时通过执行release方法释放资源。if ((System.currentTimeMillis() - this.firstShutdownTimestamp) >= intervalForcibly) {this.refCount.set(-1000 - this.getRefCount());this.release();}}}

释放引用和资源

ReferenceResource

public void release() {//引用减1long value = this.refCount.decrementAndGet();if (value > 0)return;synchronized (this) {//释放堆外内存this.cleanupOver = this.cleanup(value);}}

释放堆外内存

MappedFile

public boolean cleanup(final long currentRef) {//如果available为true ,表示MappedFile当前可用,无须清理,if (this.isAvailable()) {log.error("this file[REF:" + currentRef + "] " + this.fileName+ " have not shutdown, stop unmapping.");return false;}//如果资源已经被清除,返回trueif (this.isCleanupOver()) {log.error("this file[REF:" + currentRef + "] " + this.fileName+ " have cleanup, do not do it again.");return true;}//如果是堆外内存,调用堆外内存的cleanup 方法清除clean(this.mappedByteBuffer);//维护虚拟内存TOTAL_MAPPED_VIRTUAL_MEMORY.addAndGet(this.fileSize * (-1));//对象个数-1TOTAL_MAPPED_FILES.decrementAndGet();log.info("unmap file[REF:" + currentRef + "] " + this.fileName + " OK");return true;}

删除过期文件

MappedFileQueue

void deleteExpiredFile(List<MappedFile> files) {if (!files.isEmpty()) {Iterator<MappedFile> iterator = files.iterator();while (iterator.hasNext()) {MappedFile cur = iterator.next();//mappedFiles,不包含,就跳过.if (!this.mappedFiles.contains(cur)) {iterator.remove();log.info("This mappedFile {} is not contained by mappedFiles, so skip it.", cur.getFileName());}}try {//从mappedFiles删除所有if (!this.mappedFiles.removeAll(files)) {log.error("deleteExpiredFile remove failed.");}} catch (Exception e) {log.error("deleteExpiredFile has exception.", e);}}}

正常停止commitLog文件恢复

CommitLog

public void recoverNormally(long maxPhyOffsetOfConsumeQueue) {//在进行文件恢复时查找消息时是否验证CRCboolean checkCRCOnRecover = this.defaultMessageStore.getMessageStoreConfig().isCheckCRCOnRecover();final List<MappedFile> mappedFiles = this.mappedFileQueue.getMappedFiles();if (!mappedFiles.isEmpty()) {// Began to recover from the last third file//从倒数第三个文件开始进行恢复int index = mappedFiles.size() - 3;//如果不足3个文件,则从第一个文件开始恢复。if (index < 0)index = 0;MappedFile mappedFile = mappedFiles.get(index);ByteBuffer byteBuffer = mappedFile.sliceByteBuffer();//Commitlog 文件已确认的物理偏移量,等于mappedFile.getFileFromOffset 加上mappedFileOffset 。long processOffset = mappedFile.getFileFromOffset();//当前文件已校验通过的offset ,long mappedFileOffset = 0;while (true) {DispatchRequest dispatchRequest = this.checkMessageAndReturnSize(byteBuffer, checkCRCOnRecover);//取出一条消息int size = dispatchRequest.getMsgSize();// Normal data//查找结果为true 并且消息的长度大于0 表示消息正确,if (dispatchRequest.isSuccess() && size > 0) {//mappedFileOffset 指针向前移动本条消息的长度mappedFileOffset += size;}// Come the end of the file, switch to the next file Since the// return 0 representatives met last hole,// this can not be included in truncate offsetelse if (dispatchRequest.isSuccess() && size == 0) {//如果查找结果为true 并且消息的长度等于0 ,表示已到该文件的末尾index++;//没有文件则退出if (index >= mappedFiles.size()) {// Current branch can not happenlog.info("recover last 3 physics file over, last mapped file " + mappedFile.getFileName());break;} else {//若还有文件mappedFile = mappedFiles.get(index);//重置变量,继续遍历下个文件,并重新进入循环byteBuffer = mappedFile.sliceByteBuffer();processOffset = mappedFile.getFileFromOffset();mappedFileOffset = 0;log.info("recover next physics file, " + mappedFile.getFileName());}}// Intermediate file read errorelse if (!dispatchRequest.isSuccess()) {//读取消息错误,直接结束log.info("recover physics file end, " + mappedFile.getFileName());break;}}processOffset += mappedFileOffset;this.mappedFileQueue.setFlushedWhere(processOffset);this.mappedFileQueue.setCommittedWhere(processOffset);//删除offset 之后的所有文件this.mappedFileQueue.truncateDirtyFiles(processOffset);// Clear ConsumeQueue redundant data//queue记录的最大commitlog偏移若大于commitlog存储的最大偏移if (maxPhyOffsetOfConsumeQueue >= processOffset) {log.warn("maxPhyOffsetOfConsumeQueue({}) >= processOffset({}), truncate dirty logic files", maxPhyOffsetOfConsumeQueue, processOffset);//删除processOffset之后存储的ConsumeQueue脏数据文件this.defaultMessageStore.truncateDirtyLogicFiles(processOffset);}} else {// Commitlog case files are deletedlog.warn("The commitlog files are deleted, and delete the consume queue files");//Commitlog文件不存在this.mappedFileQueue.setFlushedWhere(0);this.mappedFileQueue.setCommittedWhere(0);//销毁所有ConsumeQueue文件this.defaultMessageStore.destroyLogics();}}

删除processOffset之后存储的ConsumeQueue脏数据文件

public void truncateDirtyLogicFiles(long phyOffset) {ConcurrentMap<String, ConcurrentMap<Integer, ConsumeQueue>> tables = DefaultMessageStore.this.consumeQueueTable;for (ConcurrentMap<Integer, ConsumeQueue> maps : tables.values()) {//遍历每个队列目录for (ConsumeQueue logic : maps.values()) {//每个目录执行删除logic.truncateDirtyLogicFiles(phyOffset);}}}

截断phyOffet之后的文件

ConsumeQueue

public void truncateDirtyLogicFiles(long phyOffet) {//获取逻辑文件大小int logicFileSize = this.mappedFileSize;//设置commitlog最大偏移this.maxPhysicOffset = phyOffet;long maxExtAddr = 1;while (true) {//获取最后一个文件MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile();if (mappedFile != null) {ByteBuffer byteBuffer = mappedFile.sliceByteBuffer();//清空刷盘,写入,提交位置mappedFile.setWrotePosition(0);mappedFile.setCommittedPosition(0);mappedFile.setFlushedPosition(0);for (int i = 0; i < logicFileSize; i += CQ_STORE_UNIT_SIZE) {//获取每条消息long offset = byteBuffer.getLong();int size = byteBuffer.getInt();long tagsCode = byteBuffer.getLong();//说明为该文件第一条消息if (0 == i) {//该文件记录第一条的commitlog偏移>=phyOffet//则说明该文件记录消息无效if (offset >= phyOffet) {//删除文件this.mappedFileQueue.deleteLastMappedFile();break;} else {//继续遍历下条消息int pos = i + CQ_STORE_UNIT_SIZE;//重新设置刷盘,写入,提交位置mappedFile.setWrotePosition(pos);mappedFile.setCommittedPosition(pos);mappedFile.setFlushedPosition(pos);//设置刷入commitlog偏移this.maxPhysicOffset = offset + size;// This maybe not take effect, when not every consume queue has extend file.if (isExtAddr(tagsCode)) {maxExtAddr = tagsCode;}}} else {//不是第一条消息的处理//说明消息有效if (offset >= 0 && size > 0) {if (offset >= phyOffet) {//这里直接返回,而不是删除文件//是因为该文件记录的之前消息是有效的//为什么不清空后面的消息了,这个采用后续消息覆盖解决//而为了保证消息刷盘,写入,以及提交的位置正确,在前一次执行消息解析的时候//已经存储了return;}//继续遍历下条消息int pos = i + CQ_STORE_UNIT_SIZE;//重新设置刷盘,写入,提交位置mappedFile.setWrotePosition(pos);mappedFile.setCommittedPosition(pos);mappedFile.setFlushedPosition(pos);//设置刷入commitlog偏移this.maxPhysicOffset = offset + size;if (isExtAddr(tagsCode)) {maxExtAddr = tagsCode;}//遍历到最后一条消息,则返回if (pos == logicFileSize) {return;}} else {//遍历到文件无效消息,则返回return;}}}} else {//没有文件,直接退出break;}}if (isExtReadEnable()) {this.consumeQueueExt.truncateByMaxAddress(maxExtAddr);}}

销毁所有ConsumeQueue文件

DefaultMessageStore

public void destroyLogics() {for (ConcurrentMap<Integer, ConsumeQueue> maps : this.consumeQueueTable.values()) {//遍历每一个ConsumeQueue目录for (ConsumeQueue logic : maps.values()) {//销毁每个目录所有ConsumeQueue文件logic.destroy();}}}

ConsumeQueue

public void destroy() {this.maxPhysicOffset = -1;this.minLogicOffset = 0;//将消息消费队列目录下的所有文件全部删除。this.mappedFileQueue.destroy();if (isExtReadEnable()) {this.consumeQueueExt.destroy();}}public void destroy() {//遍历目录下每个consumeQueue文件for (MappedFile mf : this.mappedFiles) {//销毁通道,物理文件mf.destroy(1000 * 3);}//清除集合this.mappedFiles.clear();this.flushedWhere = 0;// delete parent directory//删除上级目录File file = new File(storePath);if (file.isDirectory()) {file.delete();}}

异常停止commitLog文件恢复

CommitLog

//Broker 异常停止文件恢复@Deprecatedpublic void recoverAbnormally(long maxPhyOffsetOfConsumeQueue) {// recover by the minimum time stampboolean checkCRCOnRecover = this.defaultMessageStore.getMessageStoreConfig().isCheckCRCOnRecover();final List<MappedFile> mappedFiles = this.mappedFileQueue.getMappedFiles();if (!mappedFiles.isEmpty()) {// Looking beginning to recover from which fileint index = mappedFiles.size() - 1;MappedFile mappedFile = null;//从最后一个文件,往前遍历for (; index >= 0; index--) {mappedFile = mappedFiles.get(index);//判断一个消息文件是一个正确的文件if (this.isMappedFileMatchedRecover(mappedFile)) {log.info("recover from this mapped file " + mappedFile.getFileName());break;}}//遍历到最后一个文件都没找到,则遍历最后一个文件消息if (index < 0) {index = 0;mappedFile = mappedFiles.get(index);}ByteBuffer byteBuffer = mappedFile.sliceByteBuffer();long processOffset = mappedFile.getFileFromOffset();long mappedFileOffset = 0;//遍历消息while (true) {//从result 返回的ByteBuffer 中循环读取消息,一次读取一条,//反序列化并创建DispatchRequest对象,主要记录一条消息数据DispatchRequest dispatchRequest = this.checkMessageAndReturnSize(byteBuffer, checkCRCOnRecover);int size = dispatchRequest.getMsgSize();if (dispatchRequest.isSuccess()) {// Normal data//说明有数据if (size > 0) {mappedFileOffset += size;//是否允许转发if (this.defaultMessageStore.getMessageStoreConfig().isDuplicationEnable()) {//消息物理偏移量>CommitLog的提交指针,则结束if (dispatchRequest.getCommitLogOffset() < this.defaultMessageStore.getConfirmOffset()) {//调用文件转发请求,分别同步index和queue文件this.defaultMessageStore.doDispatch(dispatchRequest);}} else {this.defaultMessageStore.doDispatch(dispatchRequest);}}// Come the end of the file, switch to the next file// Since the return 0 representatives met last hole, this can// not be included in truncate offsetelse if (size == 0) {//无效数据//遍历下一个文件index++;//遍历到了最后一个文件,直接退出if (index >= mappedFiles.size()) {// The current branch under normal circumstances should// not happenlog.info("recover physics file over, last mapped file " + mappedFile.getFileName());break;} else {mappedFile = mappedFiles.get(index);byteBuffer = mappedFile.sliceByteBuffer();processOffset = mappedFile.getFileFromOffset();mappedFileOffset = 0;log.info("recover next physics file, " + mappedFile.getFileName());}}} else {//解析数据不成功,直接结束log.info("recover physics file end, " + mappedFile.getFileName() + " pos=" + byteBuffer.position());break;}}processOffset += mappedFileOffset;this.mappedFileQueue.setFlushedWhere(processOffset);this.mappedFileQueue.setCommittedWhere(processOffset);//删除offset 之后的所有文件this.mappedFileQueue.truncateDirtyFiles(processOffset);// Clear ConsumeQueue redundant dataif (maxPhyOffsetOfConsumeQueue >= processOffset) {log.warn("maxPhyOffsetOfConsumeQueue({}) >= processOffset({}), truncate dirty logic files", maxPhyOffsetOfConsumeQueue, processOffset);this.defaultMessageStore.truncateDirtyLogicFiles(processOffset);}}// Commitlog case files are deletedelse {log.warn("The commitlog files are deleted, and delete the consume queue files");//尚未找到文件//设置commitlog 目录的flushedWhere 、committedWhere指针都为0this.mappedFileQueue.setFlushedWhere(0);this.mappedFileQueue.setCommittedWhere(0);//销毁消息消费队列文件。this.defaultMessageStore.destroyLogics();}}

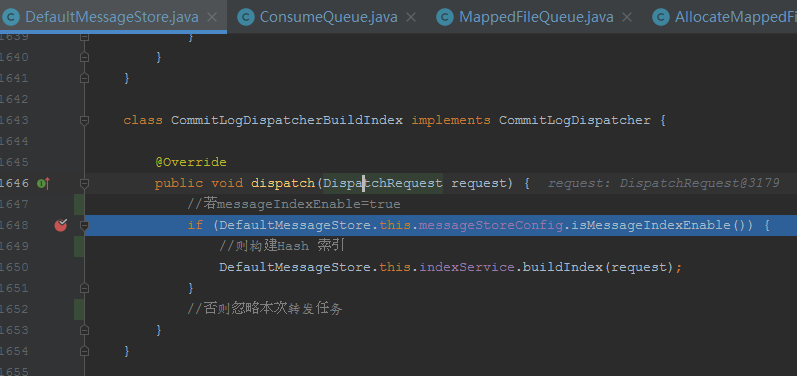

判断一个消息文件是一个正确的文件

CommitLog

//判断一个消息文件是一个正确的文件private boolean isMappedFileMatchedRecover(final MappedFile mappedFile) {ByteBuffer byteBuffer = mappedFile.sliceByteBuffer();//通过魔数判断该文件是否符合commitlog 消息文件的存储格式。int magicCode = byteBuffer.getInt(MessageDecoder.MESSAGE_MAGIC_CODE_POSTION);if (magicCode != MESSAGE_MAGIC_CODE) {return false;}int sysFlag = byteBuffer.getInt(MessageDecoder.SYSFLAG_POSITION);int bornhostLength = (sysFlag & MessageSysFlag.BORNHOST_V6_FLAG) == 0 ? 8 : 20;int msgStoreTimePos = 4 + 4 + 4 + 4 + 4 + 8 + 8 + 4 + 8 + bornhostLength;//若存储时间为0,说明该消息存储文件中未存储任何消息。long storeTimestamp = byteBuffer.getLong(msgStoreTimePos);if (0 == storeTimestamp) {return false;}//如果messagelndexEnable 为true , 表示索引文件的刷盘时间点也参与计算。if (this.defaultMessageStore.getMessageStoreConfig().isMessageIndexEnable()&& this.defaultMessageStore.getMessageStoreConfig().isMessageIndexSafe()) {if (storeTimestamp <= this.defaultMessageStore.getStoreCheckpoint().getMinTimestampIndex()) {log.info("find check timestamp, {} {}",storeTimestamp,UtilAll.timeMillisToHumanString(storeTimestamp));return true;}} else {//文件第一条消息的时间戳小于文件检测点说明该文件部分消息是可靠的,if (storeTimestamp <= this.defaultMessageStore.getStoreCheckpoint().getMinTimestamp()) {log.info("find check timestamp, {} {}",storeTimestamp,UtilAll.timeMillisToHumanString(storeTimestamp));return true;}}return false;}

索引文件的刷盘时间点也参与计算

StoreCheckpoint

public long getMinTimestampIndex() {//分别取三者最小值,文件刷盘时间点 消息消费队列文件刷盘时间点 索引文件刷盘时间点return Math.min(this.getMinTimestamp(), this.indexMsgTimestamp);}

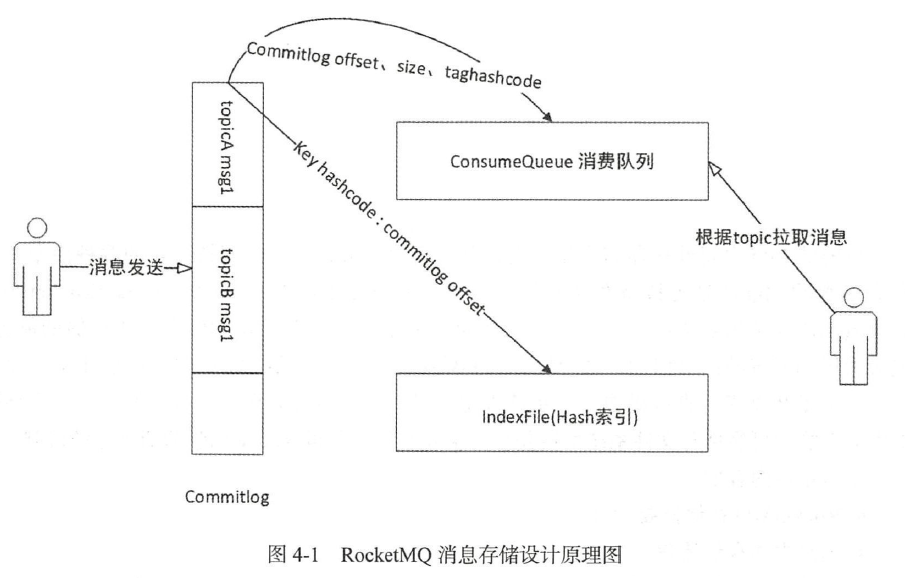

1 ) CommitLog :消息存储文件,所有消息主题的消息都存储在CommitLog 文件中。

2 ) ConsumeQueue :消息消费队列,消息到达CommitLog 文件后,将异步转发到消息

消费队列,供消息消费者消费。

3 ) IndexFile :消息索引文件,主要存储消息Key 与Offset 的对应关系。

4 )事务状态服务: 存储每条消息的事务状态。

5 )定时消息服务:每一个延迟级别对应一个消息消费队列,存储延迟队列的消息拉取

进度。

RocketMQ 将所有主题的消息存储在同-个文件中,确保消息发送时顺序写文件

RocketMQ 引入了ConsumeQueue 消息队列文件,每个消息主题包含多个消息消费队列,每一个消息队列有一个消息文件。

IndexFile 索引文件,其主要设计理念就是为了加速消息的检索性能,根据消息的属性快速从Commitlog 文件中检索消息。

存储流程

SendMessageProcessor

private CompletableFuture<RemotingCommand> asyncSendMessage(ChannelHandlerContext ctx, RemotingCommand request,SendMessageContext mqtraceContext,SendMessageRequestHeader requestHeader) {//校验topic,以及创建topic配置文件final RemotingCommand response = preSend(ctx, request, requestHeader);final SendMessageResponseHeader responseHeader = (SendMessageResponseHeader)response.readCustomHeader();if (response.getCode() != -1) {return CompletableFuture.completedFuture(response);}final byte[] body = request.getBody();//获取topic配置int queueIdInt = requestHeader.getQueueId();TopicConfig topicConfig = this.brokerController.getTopicConfigManager().selectTopicConfig(requestHeader.getTopic());if (queueIdInt < 0) {queueIdInt = randomQueueId(topicConfig.getWriteQueueNums());}//创建消息扩展,主要封装一些其他参数MessageExtBrokerInner msgInner = new MessageExtBrokerInner();msgInner.setTopic(requestHeader.getTopic());msgInner.setQueueId(queueIdInt);if (!handleRetryAndDLQ(requestHeader, response, request, msgInner, topicConfig)) {return CompletableFuture.completedFuture(response);}msgInner.setBody(body);msgInner.setFlag(requestHeader.getFlag());MessageAccessor.setProperties(msgInner, MessageDecoder.string2messageProperties(requestHeader.getProperties()));msgInner.setPropertiesString(requestHeader.getProperties());msgInner.setBornTimestamp(requestHeader.getBornTimestamp());msgInner.setBornHost(ctx.channel().remoteAddress());msgInner.setStoreHost(this.getStoreHost());msgInner.setReconsumeTimes(requestHeader.getReconsumeTimes() == null ? 0 : requestHeader.getReconsumeTimes());String clusterName = this.brokerController.getBrokerConfig().getBrokerClusterName();MessageAccessor.putProperty(msgInner, MessageConst.PROPERTY_CLUSTER, clusterName);msgInner.setPropertiesString(MessageDecoder.messageProperties2String(msgInner.getProperties()));CompletableFuture<PutMessageResult> putMessageResult = null;Map<String, String> origProps = MessageDecoder.string2messageProperties(requestHeader.getProperties());String transFlag = origProps.get(MessageConst.PROPERTY_TRANSACTION_PREPARED);if (transFlag != null && Boolean.parseBoolean(transFlag)) {if (this.brokerController.getBrokerConfig().isRejectTransactionMessage()) {response.setCode(ResponseCode.NO_PERMISSION);response.setRemark("the broker[" + this.brokerController.getBrokerConfig().getBrokerIP1()+ "] sending transaction message is forbidden");return CompletableFuture.completedFuture(response);}putMessageResult = this.brokerController.getTransactionalMessageService().asyncPrepareMessage(msgInner);} else {//异步存储消息putMessageResult = this.brokerController.getMessageStore().asyncPutMessage(msgInner);}return handlePutMessageResultFuture(putMessageResult, response, request, msgInner, responseHeader, mqtraceContext, ctx, queueIdInt);}

校验msg

SendMessageProcessor

private RemotingCommand preSend(ChannelHandlerContext ctx, RemotingCommand request,SendMessageRequestHeader requestHeader) {final RemotingCommand response = RemotingCommand.createResponseCommand(SendMessageResponseHeader.class);response.setOpaque(request.getOpaque());response.addExtField(MessageConst.PROPERTY_MSG_REGION, this.brokerController.getBrokerConfig().getRegionId());response.addExtField(MessageConst.PROPERTY_TRACE_SWITCH, String.valueOf(this.brokerController.getBrokerConfig().isTraceOn()));log.debug("Receive SendMessage request command {}", request);final long startTimestamp = this.brokerController.getBrokerConfig().getStartAcceptSendRequestTimeStamp();if (this.brokerController.getMessageStore().now() < startTimestamp) {response.setCode(ResponseCode.SYSTEM_ERROR);response.setRemark(String.format("broker unable to service, until %s", UtilAll.timeMillisToHumanString2(startTimestamp)));return response;}response.setCode(-1);//校验消息super.msgCheck(ctx, requestHeader, response);if (response.getCode() != -1) {return response;}return response;}

AbstractSendMessageProcessor

protected RemotingCommand msgCheck(final ChannelHandlerContext ctx,final SendMessageRequestHeader requestHeader, final RemotingCommand response) {//检查该Broker 是否有写权限。if (!PermName.isWriteable(this.brokerController.getBrokerConfig().getBrokerPermission())&& this.brokerController.getTopicConfigManager().isOrderTopic(requestHeader.getTopic())) {response.setCode(ResponseCode.NO_PERMISSION);response.setRemark("the broker[" + this.brokerController.getBrokerConfig().getBrokerIP1()+ "] sending message is forbidden");return response;}//验证topic长度以及命名格式if (!TopicValidator.validateTopic(requestHeader.getTopic(), response)) {return response;}//检测该topic是否可以发送消息,默认主题不能发送,仅仅供路由查找。if (TopicValidator.isNotAllowedSendTopic(requestHeader.getTopic(), response)) {return response;}//获取该topic对应topicConfigTopicConfig topicConfig =this.brokerController.getTopicConfigManager().selectTopicConfig(requestHeader.getTopic());if (null == topicConfig) {int topicSysFlag = 0;if (requestHeader.isUnitMode()) {if (requestHeader.getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {topicSysFlag = TopicSysFlag.buildSysFlag(false, true);} else {topicSysFlag = TopicSysFlag.buildSysFlag(true, false);}}log.warn("the topic {} not exist, producer: {}", requestHeader.getTopic(), ctx.channel().remoteAddress());//创建topicConfigtopicConfig = this.brokerController.getTopicConfigManager().createTopicInSendMessageMethod(requestHeader.getTopic(),requestHeader.getDefaultTopic(),RemotingHelper.parseChannelRemoteAddr(ctx.channel()),requestHeader.getDefaultTopicQueueNums(), topicSysFlag);if (null == topicConfig) {if (requestHeader.getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {topicConfig =this.brokerController.getTopicConfigManager().createTopicInSendMessageBackMethod(requestHeader.getTopic(), 1, PermName.PERM_WRITE | PermName.PERM_READ,topicSysFlag);}}if (null == topicConfig) {response.setCode(ResponseCode.TOPIC_NOT_EXIST);response.setRemark("topic[" + requestHeader.getTopic() + "] not exist, apply first please!"+ FAQUrl.suggestTodo(FAQUrl.APPLY_TOPIC_URL));return response;}}int queueIdInt = requestHeader.getQueueId();int idValid = Math.max(topicConfig.getWriteQueueNums(), topicConfig.getReadQueueNums());if (queueIdInt >= idValid) {String errorInfo = String.format("request queueId[%d] is illegal, %s Producer: %s",queueIdInt,topicConfig.toString(),RemotingHelper.parseChannelRemoteAddr(ctx.channel()));log.warn(errorInfo);response.setCode(ResponseCode.SYSTEM_ERROR);response.setRemark(errorInfo);return response;}return response;}

TopicConfigManager

public TopicConfig createTopicInSendMessageMethod(final String topic, final String defaultTopic,final String remoteAddress, final int clientDefaultTopicQueueNums, final int topicSysFlag) {TopicConfig topicConfig = null;boolean createNew = false;try {if (this.lockTopicConfigTable.tryLock(LOCK_TIMEOUT_MILLIS, TimeUnit.MILLISECONDS)) {try {topicConfig = this.topicConfigTable.get(topic);//再次获取topic配置文件if (topicConfig != null)return topicConfig;//获取默认topic TBW102配置TopicConfig defaultTopicConfig = this.topicConfigTable.get(defaultTopic);if (defaultTopicConfig != null) {//isAutoCreateTopicEnable=true才能发现默认topicif (defaultTopic.equals(TopicValidator.AUTO_CREATE_TOPIC_KEY_TOPIC)) {if (!this.brokerController.getBrokerConfig().isAutoCreateTopicEnable()) {defaultTopicConfig.setPerm(PermName.PERM_READ | PermName.PERM_WRITE);}}//是否允许继承defaultTopicConfig属性if (PermName.isInherited(defaultTopicConfig.getPerm())) {topicConfig = new TopicConfig(topic);int queueNums =clientDefaultTopicQueueNums > defaultTopicConfig.getWriteQueueNums() ? defaultTopicConfig.getWriteQueueNums() : clientDefaultTopicQueueNums;if (queueNums < 0) {queueNums = 0;}topicConfig.setReadQueueNums(queueNums);topicConfig.setWriteQueueNums(queueNums);int perm = defaultTopicConfig.getPerm();perm &= ~PermName.PERM_INHERIT;topicConfig.setPerm(perm);topicConfig.setTopicSysFlag(topicSysFlag);topicConfig.setTopicFilterType(defaultTopicConfig.getTopicFilterType());} else {log.warn("Create new topic failed, because the default topic[{}] has no perm [{}] producer:[{}]",defaultTopic, defaultTopicConfig.getPerm(), remoteAddress);}} else {log.warn("Create new topic failed, because the default topic[{}] not exist. producer:[{}]",defaultTopic, remoteAddress);}if (topicConfig != null) {log.info("Create new topic by default topic:[{}] config:[{}] producer:[{}]",defaultTopic, topicConfig, remoteAddress);this.topicConfigTable.put(topic, topicConfig);this.dataVersion.nextVersion();//标记创建新topiccreateNew = true;//创建topic文件this.persist();}} finally {this.lockTopicConfigTable.unlock();}}} catch (InterruptedException e) {log.error("createTopicInSendMessageMethod exception", e);}//若创建新的topic成功if (createNew) {//向name注册所有topicthis.brokerController.registerBrokerAll(false, true, true);}return topicConfig;}

异步存储消息(默认)

DefaultMessageStore

@Overridepublic CompletableFuture<PutMessageResult> asyncPutMessage(MessageExtBrokerInner msg) {//是否可写入PutMessageStatus checkStoreStatus = this.checkStoreStatus();if (checkStoreStatus != PutMessageStatus.PUT_OK) {return CompletableFuture.completedFuture(new PutMessageResult(checkStoreStatus, null));}//校验消息PutMessageStatus msgCheckStatus = this.checkMessage(msg);if (msgCheckStatus == PutMessageStatus.MESSAGE_ILLEGAL) {return CompletableFuture.completedFuture(new PutMessageResult(msgCheckStatus, null));}long beginTime = this.getSystemClock().now();//写入消息到commitLogCompletableFuture<PutMessageResult> putResultFuture = this.commitLog.asyncPutMessage(msg);putResultFuture.thenAccept((result) -> {long elapsedTime = this.getSystemClock().now() - beginTime;if (elapsedTime > 500) {log.warn("putMessage not in lock elapsed time(ms)={}, bodyLength={}", elapsedTime, msg.getBody().length);}this.storeStatsService.setPutMessageEntireTimeMax(elapsedTime);if (null == result || !result.isOk()) {this.storeStatsService.getPutMessageFailedTimes().incrementAndGet();}});return putResultFuture;}

是否可写入

private PutMessageStatus checkStoreStatus() {//若消息存储关闭if (this.shutdown) {log.warn("message store has shutdown, so putMessage is forbidden");return PutMessageStatus.SERVICE_NOT_AVAILABLE;}//Broker 为SLAVE 角色if (BrokerRole.SLAVE == this.messageStoreConfig.getBrokerRole()) {long value = this.printTimes.getAndIncrement();if ((value % 50000) == 0) {log.warn("message store has shutdown, so putMessage is forbidden");}return PutMessageStatus.SERVICE_NOT_AVAILABLE;}//当前Rocket不支持写入则拒绝消息写入;if (!this.runningFlags.isWriteable()) {long value = this.printTimes.getAndIncrement();if ((value % 50000) == 0) {log.warn("message store has shutdown, so putMessage is forbidden");}return PutMessageStatus.SERVICE_NOT_AVAILABLE;} else {this.printTimes.set(0);}if (this.isOSPageCacheBusy()) {return PutMessageStatus.OS_PAGECACHE_BUSY;}return PutMessageStatus.PUT_OK;}

校验消息

private PutMessageStatus checkMessage(MessageExtBrokerInner msg) {//消息主题长度超过256 个字符,拒绝该消息写if (msg.getTopic().length() > Byte.MAX_VALUE) {log.warn("putMessage message topic length too long " + msg.getTopic().length());return PutMessageStatus.MESSAGE_ILLEGAL;}//消息属性长度超过65536 个字符if (msg.getPropertiesString() != null && msg.getPropertiesString().length() > Short.MAX_VALUE) {log.warn("putMessage message properties length too long " + msg.getPropertiesString().length());return PutMessageStatus.MESSAGE_ILLEGAL;}return PutMessageStatus.PUT_OK;}

CommitLog

写入消息到commitLog

public CompletableFuture<PutMessageResult> asyncPutMessage(final MessageExtBrokerInner msg) {// Set the storage time//记录存储时间msg.setStoreTimestamp(System.currentTimeMillis());// Set the message body BODY CRC (consider the most appropriate setting// on the client)msg.setBodyCRC(UtilAll.crc32(msg.getBody()));// Back to ResultsAppendMessageResult result = null;StoreStatsService storeStatsService = this.defaultMessageStore.getStoreStatsService();String topic = msg.getTopic();int queueId = msg.getQueueId();final int tranType = MessageSysFlag.getTransactionValue(msg.getSysFlag());if (tranType == MessageSysFlag.TRANSACTION_NOT_TYPE|| tranType == MessageSysFlag.TRANSACTION_COMMIT_TYPE) {// Delay Delivery 若消息的延迟级别大于0if (msg.getDelayTimeLevel() > 0) {if (msg.getDelayTimeLevel() > this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()) {msg.setDelayTimeLevel(this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel());}topic = TopicValidator.RMQ_SYS_SCHEDULE_TOPIC;queueId = ScheduleMessageService.delayLevel2QueueId(msg.getDelayTimeLevel());// Backup real topic, queueIdMessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_TOPIC, msg.getTopic());MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_QUEUE_ID, String.valueOf(msg.getQueueId()));msg.setPropertiesString(MessageDecoder.messageProperties2String(msg.getProperties()));msg.setTopic(topic);msg.setQueueId(queueId);}}long elapsedTimeInLock = 0;MappedFile unlockMappedFile = null;//获取上次写入的文件MappedFile mappedFile = this.mappedFileQueue.getLastMappedFile();putMessageLock.lock(); //spin or ReentrantLock ,depending on store configtry {long beginLockTimestamp = this.defaultMessageStore.getSystemClock().now();this.beginTimeInLock = beginLockTimestamp;// Here settings are stored timestamp, in order to ensure an orderly// global 设置存储时间,保证全局有序msg.setStoreTimestamp(beginLockTimestamp);//若没有获取到最后写入的文件,以及文件写满if (null == mappedFile || mappedFile.isFull()) {//创建新的文件,偏移地址为0mappedFile = this.mappedFileQueue.getLastMappedFile(0); // Mark: NewFile may be cause noise}//若新建失败,则异常.可能磁盘不足或空间不够if (null == mappedFile) {log.error("create mapped file1 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());beginTimeInLock = 0;return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, null));}//将消息追加到MappedFile中result = mappedFile.appendMessage(msg, this.appendMessageCallback);switch (result.getStatus()) {case PUT_OK:break;case END_OF_FILE:unlockMappedFile = mappedFile;// Create a new file, re-write the messagemappedFile = this.mappedFileQueue.getLastMappedFile(0);if (null == mappedFile) {// XXX: warn and notify melog.error("create mapped file2 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());beginTimeInLock = 0;return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result));}result = mappedFile.appendMessage(msg, this.appendMessageCallback);break;case MESSAGE_SIZE_EXCEEDED:case PROPERTIES_SIZE_EXCEEDED:beginTimeInLock = 0;return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result));case UNKNOWN_ERROR:beginTimeInLock = 0;return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result));default:beginTimeInLock = 0;return CompletableFuture.completedFuture(new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result));}elapsedTimeInLock = this.defaultMessageStore.getSystemClock().now() - beginLockTimestamp;beginTimeInLock = 0;} finally {putMessageLock.unlock();}if (elapsedTimeInLock > 500) {log.warn("[NOTIFYME]putMessage in lock cost time(ms)={}, bodyLength={} AppendMessageResult={}", elapsedTimeInLock, msg.getBody().length, result);}if (null != unlockMappedFile && this.defaultMessageStore.getMessageStoreConfig().isWarmMapedFileEnable()) {this.defaultMessageStore.unlockMappedFile(unlockMappedFile);}PutMessageResult putMessageResult = new PutMessageResult(PutMessageStatus.PUT_OK, result);// StatisticsstoreStatsService.getSinglePutMessageTopicTimesTotal(msg.getTopic()).incrementAndGet();storeStatsService.getSinglePutMessageTopicSizeTotal(topic).addAndGet(result.getWroteBytes());CompletableFuture<PutMessageStatus> flushResultFuture = submitFlushRequest(result, putMessageResult, msg);CompletableFuture<PutMessageStatus> replicaResultFuture = submitReplicaRequest(result, putMessageResult, msg);return flushResultFuture.thenCombine(replicaResultFuture, (flushStatus, replicaStatus) -> {if (flushStatus != PutMessageStatus.PUT_OK) {putMessageResult.setPutMessageStatus(PutMessageStatus.FLUSH_DISK_TIMEOUT);}if (replicaStatus != PutMessageStatus.PUT_OK) {putMessageResult.setPutMessageStatus(replicaStatus);}return putMessageResult;});}

MappedFileQueue

获取上个文件

public MappedFile getLastMappedFile() {MappedFile mappedFileLast = null;while (!this.mappedFiles.isEmpty()) {try {///获取尾端文件mappedFileLast = this.mappedFiles.get(this.mappedFiles.size() - 1);break;} catch (IndexOutOfBoundsException e) {//continue;} catch (Exception e) {log.error("getLastMappedFile has exception.", e);break;}}return mappedFileLast;}

创建文件

public MappedFile getLastMappedFile(final long startOffset) {return getLastMappedFile(startOffset, true);}public MappedFile getLastMappedFile(final long startOffset, boolean needCreate) {long createOffset = -1;MappedFile mappedFileLast = getLastMappedFile();//创建的偏移必须是mappedFileSize设置的倍数if (mappedFileLast == null) {createOffset = startOffset - (startOffset % this.mappedFileSize);}if (mappedFileLast != null && mappedFileLast.isFull()) {createOffset = mappedFileLast.getFileFromOffset() + this.mappedFileSize;}if (createOffset != -1 && needCreate) {//利用偏移为文件名String nextFilePath = this.storePath + File.separator + UtilAll.offset2FileName(createOffset);String nextNextFilePath = this.storePath + File.separator+ UtilAll.offset2FileName(createOffset + this.mappedFileSize);MappedFile mappedFile = null;if (this.allocateMappedFileService != null) {//分别异步创建2个文件mappedFile = this.allocateMappedFileService.putRequestAndReturnMappedFile(nextFilePath,nextNextFilePath, this.mappedFileSize);} else {try {mappedFile = new MappedFile(nextFilePath, this.mappedFileSize);} catch (IOException e) {log.error("create mappedFile exception", e);}}if (mappedFile != null) {//若mappedFiles队列为空if (this.mappedFiles.isEmpty()) {//设置是MappedFileQueue 队列中第一个文件mappedFile.setFirstCreateInQueue(true);}//添加mapppedFiles集合this.mappedFiles.add(mappedFile);}return mappedFile;}return mappedFileLast;}

追加消息

MappedFile

public AppendMessageResult appendMessage(final MessageExtBrokerInner msg, final AppendMessageCallback cb) {return appendMessagesInner(msg, cb);}

writeBuffer.slice() : this.mappedByteBuffer.slice();大家注意这句代码

这句代码的意思是

writeBuffer:堆内存ByteBuffer,如果不为空,数据首先将存储在该Buffer 中,然后提交到MappedFile 对应的内存映射文件Buffer .

注意:transientStorePoolEnable为true 时不为空。

mappedByteBuffer:物理文件对应的内存映射Buffer

//将消息追加到MappedFile 中public AppendMessageResult appendMessagesInner(final MessageExt messageExt, final AppendMessageCallback cb) {assert messageExt != null;assert cb != null;//获取文件当前写位置int currentPos = this.wrotePosition.get();//若写的位置小于文件大小if (currentPos < this.fileSize) {//创建一个与MappedFile 的共享内存区ByteBuffer byteBuffer = writeBuffer != null ? writeBuffer.slice() : this.mappedByteBuffer.slice();//设置缓冲区写入位置byteBuffer.position(currentPos);AppendMessageResult result;if (messageExt instanceof MessageExtBrokerInner) {//序列化msgresult = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, (MessageExtBrokerInner) messageExt);} else if (messageExt instanceof MessageExtBatch) {result = cb.doAppend(this.getFileFromOffset(), byteBuffer, this.fileSize - currentPos, (MessageExtBatch) messageExt);} else {return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR);}//记录当前文件写的指针this.wrotePosition.addAndGet(result.getWroteBytes());//记录写的时间戳this.storeTimestamp = result.getStoreTimestamp();return result;}//说明写满,抛出异常log.error("MappedFile.appendMessage return null, wrotePosition: {} fileSize: {}", currentPos, this.fileSize);return new AppendMessageResult(AppendMessageStatus.UNKNOWN_ERROR);}

CommitLog.DefaultAppendMessageCallback

public AppendMessageResult doAppend(final long fileFromOffset, final ByteBuffer byteBuffer, final int maxBlank,final MessageExtBrokerInner msgInner) {// STORETIMESTAMP + STOREHOSTADDRESS + OFFSET <br>// PHY OFFSET 算出物理偏移量,也就是在内存中的逻辑偏移量long wroteOffset = fileFromOffset + byteBuffer.position();int sysflag = msgInner.getSysFlag();int bornHostLength = (sysflag & MessageSysFlag.BORNHOST_V6_FLAG) == 0 ? 4 + 4 : 16 + 4;int storeHostLength = (sysflag & MessageSysFlag.STOREHOSTADDRESS_V6_FLAG) == 0 ? 4 + 4 : 16 + 4;ByteBuffer bornHostHolder = ByteBuffer.allocate(bornHostLength);ByteBuffer storeHostHolder = ByteBuffer.allocate(storeHostLength);//重置可读的位置this.resetByteBuffer(storeHostHolder, storeHostLength);String msgId;//创建全局唯一消息ID 4字节ip和4字节端口号,8字节消息偏移量if ((sysflag & MessageSysFlag.STOREHOSTADDRESS_V6_FLAG) == 0) {msgId = MessageDecoder.createMessageId(this.msgIdMemory, msgInner.getStoreHostBytes(storeHostHolder), wroteOffset);} else {msgId = MessageDecoder.createMessageId(this.msgIdV6Memory, msgInner.getStoreHostBytes(storeHostHolder), wroteOffset);}// Record ConsumeQueue information//记录ConsumeQueue信息keyBuilder.setLength(0);keyBuilder.append(msgInner.getTopic());keyBuilder.append('-');keyBuilder.append(msgInner.getQueueId());// topicName-queueId组成String key = keyBuilder.toString();//通过key获得偏移Long queueOffset = CommitLog.this.topicQueueTable.get(key);if (null == queueOffset) {queueOffset = 0L;//当前所有消息队列的当前待写入偏移量。CommitLog.this.topicQueueTable.put(key, queueOffset);}// Transaction messages that require special handlingfinal int tranType = MessageSysFlag.getTransactionValue(msgInner.getSysFlag());switch (tranType) {// Prepared and Rollback message is not consumed, will not enter the// consumer queueccase MessageSysFlag.TRANSACTION_PREPARED_TYPE:case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE:queueOffset = 0L;break;case MessageSysFlag.TRANSACTION_NOT_TYPE:case MessageSysFlag.TRANSACTION_COMMIT_TYPE:default:break;}/*** Serialize message 序列化消息*/final byte[] propertiesData =msgInner.getPropertiesString() == null ? null : msgInner.getPropertiesString().getBytes(MessageDecoder.CHARSET_UTF8);final int propertiesLength = propertiesData == null ? 0 : propertiesData.length;if (propertiesLength > Short.MAX_VALUE) {log.warn("putMessage message properties length too long. length={}", propertiesData.length);return new AppendMessageResult(AppendMessageStatus.PROPERTIES_SIZE_EXCEEDED);}final byte[] topicData = msgInner.getTopic().getBytes(MessageDecoder.CHARSET_UTF8);final int topicLength = topicData.length;final int bodyLength = msgInner.getBody() == null ? 0 : msgInner.getBody().length;//根据消息体的长度、主题的长度、属性的长度结合消息存储格式计算消息的总长度。final int msgLen = calMsgLength(msgInner.getSysFlag(), bodyLength, topicLength, propertiesLength);// Exceeds the maximum message 超过最大的单个消息大小,则异常if (msgLen > this.maxMessageSize) {CommitLog.log.warn("message size exceeded, msg total size: " + msgLen + ", msg body size: " + bodyLength+ ", maxMessageSize: " + this.maxMessageSize);return new AppendMessageResult(AppendMessageStatus.MESSAGE_SIZE_EXCEEDED);}// Determines whether there is sufficient free space/*如果消息长度+END_FILE_ MIN_ BLANK_ LENGTH 大于CommitLog 文件的空闲空间Broker 会重新创建一个新的CommitLog文件来存储该消息。*/if ((msgLen + END_FILE_MIN_BLANK_LENGTH) > maxBlank) {this.resetByteBuffer(this.msgStoreItemMemory, maxBlank);//每个CommitLog 文件最少会空闲8 个字节,// 高4 字节存储当前文件剩余空间,// 低4 字节存储魔数: CommitLog.BLANK MAGICCODE 。// 1 TOTALSIZEthis.msgStoreItemMemory.putInt(maxBlank);// 2 MAGICCODEthis.msgStoreItemMemory.putInt(CommitLog.BLANK_MAGIC_CODE);// 3 The remaining space may be any value// Here the length of the specially set maxBlankfinal long beginTimeMills = CommitLog.this.defaultMessageStore.now();byteBuffer.put(this.msgStoreItemMemory.array(), 0, maxBlank);//返回AppendMessageStatus.END_OF_FILEreturn new AppendMessageResult(AppendMessageStatus.END_OF_FILE, wroteOffset, maxBlank, msgId, msgInner.getStoreTimestamp(),queueOffset, CommitLog.this.defaultMessageStore.now() - beginTimeMills);}// Initialization of storage spacethis.resetByteBuffer(msgStoreItemMemory, msgLen);// 1 TOTALSIZEthis.msgStoreItemMemory.putInt(msgLen);// 2 MAGICCODEthis.msgStoreItemMemory.putInt(CommitLog.MESSAGE_MAGIC_CODE);// 3 BODYCRCthis.msgStoreItemMemory.putInt(msgInner.getBodyCRC());// 4 QUEUEIDthis.msgStoreItemMemory.putInt(msgInner.getQueueId());// 5 FLAGthis.msgStoreItemMemory.putInt(msgInner.getFlag());// 6 QUEUEOFFSETthis.msgStoreItemMemory.putLong(queueOffset);// 7 PHYSICALOFFSETthis.msgStoreItemMemory.putLong(fileFromOffset + byteBuffer.position());// 8 SYSFLAGthis.msgStoreItemMemory.putInt(msgInner.getSysFlag());// 9 BORNTIMESTAMPthis.msgStoreItemMemory.putLong(msgInner.getBornTimestamp());// 10 BORNHOSTthis.resetByteBuffer(bornHostHolder, bornHostLength);this.msgStoreItemMemory.put(msgInner.getBornHostBytes(bornHostHolder));// 11 STORETIMESTAMPthis.msgStoreItemMemory.putLong(msgInner.getStoreTimestamp());// 12 STOREHOSTADDRESSthis.resetByteBuffer(storeHostHolder, storeHostLength);this.msgStoreItemMemory.put(msgInner.getStoreHostBytes(storeHostHolder));// 13 RECONSUMETIMESthis.msgStoreItemMemory.putInt(msgInner.getReconsumeTimes());// 14 Prepared Transaction Offsetthis.msgStoreItemMemory.putLong(msgInner.getPreparedTransactionOffset());// 15 BODYthis.msgStoreItemMemory.putInt(bodyLength);if (bodyLength > 0)this.msgStoreItemMemory.put(msgInner.getBody());// 16 TOPICthis.msgStoreItemMemory.put((byte) topicLength);this.msgStoreItemMemory.put(topicData);// 17 PROPERTIESthis.msgStoreItemMemory.putShort((short) propertiesLength);if (propertiesLength > 0)this.msgStoreItemMemory.put(propertiesData);final long beginTimeMills = CommitLog.this.defaultMessageStore.now();// Write messages to the queue buffer//将消息写入bufferbyteBuffer.put(this.msgStoreItemMemory.array(), 0, msgLen);AppendMessageResult result = new AppendMessageResult(AppendMessageStatus.PUT_OK, wroteOffset, msgLen, msgId,msgInner.getStoreTimestamp(), queueOffset, CommitLog.this.defaultMessageStore.now() - beginTimeMills);switch (tranType) {case MessageSysFlag.TRANSACTION_PREPARED_TYPE:case MessageSysFlag.TRANSACTION_ROLLBACK_TYPE:break;case MessageSysFlag.TRANSACTION_NOT_TYPE:case MessageSysFlag.TRANSACTION_COMMIT_TYPE:// The next update ConsumeQueue information//更新消息队列逻辑偏移量。CommitLog.this.topicQueueTable.put(key, ++queueOffset);break;default:break;}return result;}

计算消息长度

protected static int calMsgLength(int sysFlag, int bodyLength, int topicLength, int propertiesLength) {int bornhostLength = (sysFlag & MessageSysFlag.BORNHOST_V6_FLAG) == 0 ? 8 : 20;int storehostAddressLength = (sysFlag & MessageSysFlag.STOREHOSTADDRESS_V6_FLAG) == 0 ? 8 : 20;final int msgLen = 4 //TOTALSIZE 该消息条目总长度+ 4 //MAGICCODE 魔数固定值Ox daa320a7+ 4 //BODYCRC 消息体e r e 校验码,+ 4 //QUEUEID 消息消费队列ID+ 4 //FLAG 消息FLA G , Rock e tMQ 不做处理, 供应用程序使用+ 8 //QUEUEOFFSET 消息在消息消费队列的偏移量+ 8 //PHYSICALOFFSET 消息在CommitLog 文件中的偏移量+ 4 //SYSFLAG 消息系统Flag ,例如是否压缩、是否是事务消息等+ 8 //BORNTIMESTAMP 消息生产者调用消息发送API 的时间戳+ bornhostLength //BORNHOST 消息发送者IP 、端口号+ 8 //STORETIMESTAMP 消息存储时间戳,+ storehostAddressLength //STOREHOSTADDRESS Broker 服务器IP+ 端口号+ 4 //RECONSUMETIMES 消息重试次数,+ 8 //Prepared Transaction Offset 事务消息物理偏移量+ 4 + (bodyLength > 0 ? bodyLength : 0) //BODY 消息体长度,+ 1 + topicLength //TOPIC+ 2 + (propertiesLength > 0 ? propertiesLength : 0) //propertiesLength 消息属性长度+ 0;return msgLen;}

同步存储消息

/*** 同步存储消息*/@Overridepublic PutMessageResult putMessage(MessageExtBrokerInner msg) {//校验是否可存PutMessageStatus checkStoreStatus = this.checkStoreStatus();if (checkStoreStatus != PutMessageStatus.PUT_OK) {return new PutMessageResult(checkStoreStatus, null);}//校验消息属性和主题长度PutMessageStatus msgCheckStatus = this.checkMessage(msg);if (msgCheckStatus == PutMessageStatus.MESSAGE_ILLEGAL) {return new PutMessageResult(msgCheckStatus, null);}long beginTime = this.getSystemClock().now();//存储消息PutMessageResult result = this.commitLog.putMessage(msg);long elapsedTime = this.getSystemClock().now() - beginTime;if (elapsedTime > 500) {log.warn("not in lock elapsed time(ms)={}, bodyLength={}", elapsedTime, msg.getBody().length);}this.storeStatsService.setPutMessageEntireTimeMax(elapsedTime);if (null == result || !result.isOk()) {this.storeStatsService.getPutMessageFailedTimes().incrementAndGet();}return result;}

存储消息

public PutMessageResult putMessage(final MessageExtBrokerInner msg) {// Set the storage timemsg.setStoreTimestamp(System.currentTimeMillis());// Set the message body BODY CRC (consider the most appropriate setting// on the client)msg.setBodyCRC(UtilAll.crc32(msg.getBody()));// Back to ResultsAppendMessageResult result = null;StoreStatsService storeStatsService = this.defaultMessageStore.getStoreStatsService();String topic = msg.getTopic();int queueId = msg.getQueueId();final int tranType = MessageSysFlag.getTransactionValue(msg.getSysFlag());if (tranType == MessageSysFlag.TRANSACTION_NOT_TYPE|| tranType == MessageSysFlag.TRANSACTION_COMMIT_TYPE) {// Delay Delivery//若消息的延迟级别大于0if (msg.getDelayTimeLevel() > 0) {if (msg.getDelayTimeLevel() > this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel()) {msg.setDelayTimeLevel(this.defaultMessageStore.getScheduleMessageService().getMaxDelayLevel());}topic = TopicValidator.RMQ_SYS_SCHEDULE_TOPIC;queueId = ScheduleMessageService.delayLevel2QueueId(msg.getDelayTimeLevel());// Backup real topic, queueIdMessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_TOPIC, msg.getTopic());MessageAccessor.putProperty(msg, MessageConst.PROPERTY_REAL_QUEUE_ID, String.valueOf(msg.getQueueId()));msg.setPropertiesString(MessageDecoder.messageProperties2String(msg.getProperties()));//用延迟消息主题SCHEDULE TOPIC 、消息队列ID 更新原先消息的主题与队列//用于消息重试机制与定时消息处理msg.setTopic(topic);msg.setQueueId(queueId);}}InetSocketAddress bornSocketAddress = (InetSocketAddress) msg.getBornHost();if (bornSocketAddress.getAddress() instanceof Inet6Address) {msg.setBornHostV6Flag();}InetSocketAddress storeSocketAddress = (InetSocketAddress) msg.getStoreHost();if (storeSocketAddress.getAddress() instanceof Inet6Address) {msg.setStoreHostAddressV6Flag();}long elapsedTimeInLock = 0;MappedFile unlockMappedFile = null;//获取当前可以写入的Commitlog 文件//文件名存偏移地址,表示该文件中的第一条消息的物理偏移量MappedFile mappedFile = this.//看作是${ ROCKET_HOME }/store/commitlog 文件夹mappedFileQueue.//对应该文件夹下一个个的文件。getLastMappedFile();//加锁,也就是说消息存commitLog是串行的,这样也是为了保证有序,和安全.//依赖storeConfigputMessageLock.lock(); //spin or ReentrantLock ,depending on store configtry {long beginLockTimestamp = this.defaultMessageStore.getSystemClock().now();this.beginTimeInLock = beginLockTimestamp;// Here settings are stored timestamp, in order to ensure an orderly// global//设置消息存储时间,以保证全局有序msg.setStoreTimestamp(beginLockTimestamp);//若文件为空,或者写满.则新建一个commit文件,偏移地址为0if (null == mappedFile || mappedFile.isFull()) {mappedFile = this.mappedFileQueue.getLastMappedFile(0); // Mark: NewFile may be cause noise}//若新建失败,则异常.可能磁盘不足或空间不够if (null == mappedFile) {log.error("create mapped file1 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());beginTimeInLock = 0;return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, null);}result = mappedFile.appendMessage(msg, this.appendMessageCallback);switch (result.getStatus()) {case PUT_OK:break;case END_OF_FILE:unlockMappedFile = mappedFile;// Create a new file, re-write the messagemappedFile = this.mappedFileQueue.getLastMappedFile(0);if (null == mappedFile) {// XXX: warn and notify melog.error("create mapped file2 error, topic: " + msg.getTopic() + " clientAddr: " + msg.getBornHostString());beginTimeInLock = 0;return new PutMessageResult(PutMessageStatus.CREATE_MAPEDFILE_FAILED, result);}result = mappedFile.appendMessage(msg, this.appendMessageCallback);break;case MESSAGE_SIZE_EXCEEDED:case PROPERTIES_SIZE_EXCEEDED:beginTimeInLock = 0;return new PutMessageResult(PutMessageStatus.MESSAGE_ILLEGAL, result);case UNKNOWN_ERROR:beginTimeInLock = 0;return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result);default:beginTimeInLock = 0;return new PutMessageResult(PutMessageStatus.UNKNOWN_ERROR, result);}elapsedTimeInLock = this.defaultMessageStore.getSystemClock().now() - beginLockTimestamp;beginTimeInLock = 0;} finally {putMessageLock.unlock();}if (elapsedTimeInLock > 500) {log.warn("[NOTIFYME]putMessage in lock cost time(ms)={}, bodyLength={} AppendMessageResult={}", elapsedTimeInLock, msg.getBody().length, result);}if (null != unlockMappedFile && this.defaultMessageStore.getMessageStoreConfig().isWarmMapedFileEnable()) {this.defaultMessageStore.unlockMappedFile(unlockMappedFile);}PutMessageResult putMessageResult = new PutMessageResult(PutMessageStatus.PUT_OK, result);// StatisticsstoreStatsService.getSinglePutMessageTopicTimesTotal(msg.getTopic()).incrementAndGet();storeStatsService.getSinglePutMessageTopicSizeTotal(topic).addAndGet(result.getWroteBytes());//根据是同步刷盘还是异步刷盘方式,将内存中的数据持久化到磁盘handleDiskFlush(result, putMessageResult, msg);//执行HA 主从同步复制handleHA(result, putMessageResult, msg);return putMessageResult;}

CommitLog刷盘

可通过在broker 配置文件中配置flushDiskType 来设定刷盘方式,可选值为ASYNC FLUSH (异步刷盘)、S刊C_FLUSH ( 同步刷盘) , 默认为异步刷盘

索引文件的刷盘并不是采取定时刷盘机制,而是每更新一次索引文件就会将上一次的改动刷写到磁盘。

CommitLog

/*** 刷盘*/public void handleDiskFlush(AppendMessageResult result, PutMessageResult putMessageResult, MessageExt messageExt) {// Synchronization flush 同步刷盘//获取刷盘方式,是否为同步刷盘if (FlushDiskType.SYNC_FLUSH == this.defaultMessageStore.getMessageStoreConfig().getFlushDiskType()) {final GroupCommitService service = (GroupCommitService) this.flushCommitLogService;if (messageExt.isWaitStoreMsgOK()) {//构建GroupCommitRequest 同步任务GroupCommitRequest request = new GroupCommitRequest(result.getWroteOffset() + result.getWroteBytes());//提交到service 。service.putRequest(request);CompletableFuture<PutMessageStatus> flushOkFuture = request.future();PutMessageStatus flushStatus = null;try {//等待同步刷盘任务完成,如果超时则返回刷盘错误,flushStatus = flushOkFuture.get(this.defaultMessageStore.getMessageStoreConfig().getSyncFlushTimeout(),TimeUnit.MILLISECONDS);} catch (InterruptedException | ExecutionException | TimeoutException e) {//flushOK=false;}if (flushStatus != PutMessageStatus.PUT_OK) {log.error("do groupcommit, wait for flush failed, topic: " + messageExt.getTopic() + " tags: " + messageExt.getTags()+ " client address: " + messageExt.getBornHostString());putMessageResult.setPutMessageStatus(PutMessageStatus.FLUSH_DISK_TIMEOUT);}} else {//该线程处于等待状态则将其唤醒。service.wakeup();}}// Asynchronous flush 异步刷盘else {/*如果transientStorePoolEnable 为true , RocketMQ 会单独申请一个与目标物理文件( commitlog)同样大小的堆外内存, 该堆外内存将使用内存锁定,确保不会被置换到虚拟内存中去,消息首先追加到堆外内存,然后提交到与物理文件的内存映射内存中,再flush 到磁盘。如果transientStorePoolEnable 为false ,消息直接追加到与物理文件直接映射的内存中,然后刷写到磁盘中。*/if (!this.defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) {flushCommitLogService.wakeup();} else {commitLogService.wakeup();}}}

同步刷盘

放入刷盘任务请求

CommitLog.GroupCommitService

public synchronized void putRequest(final GroupCommitRequest request) {synchronized (this.requestsWrite) {//客户端提交同步刷盘任务到GroupCommitService 线程this.requestsWrite.add(request);}//该线程处于等待状态则将其唤醒。this.wakeup();}

默认等待5S,等待GroupCommitService线程调用

GroupCommitService

public void run() {CommitLog.log.info(this.getServiceName() + " service started");while (!this.isStopped()) {try {//休息10msthis.waitForRunning(10);//处理一批同步刷盘请求this.doCommit();} catch (Exception e) {CommitLog.log.warn(this.getServiceName() + " service has exception. ", e);}}// Under normal circumstances shutdown, wait for the arrival of the// request, and then flushtry {Thread.sleep(10);} catch (InterruptedException e) {CommitLog.log.warn("GroupCommitService Exception, ", e);}synchronized (this) {//交换任务,避免锁竞争this.swapRequests();}//处理另外一批同步刷盘请求this.doCommit();CommitLog.log.info(this.getServiceName() + " service end");}

执行刷盘提交任务

GroupCommitService

private void doCommit() {synchronized (this.requestsRead) {if (!this.requestsRead.isEmpty()) {//遍历请求for (GroupCommitRequest req : this.requestsRead) {// There may be a message in the next file, so a maximum of// two times the flush 刷新2次boolean flushOK = false;for (int i = 0; i < 2 && !flushOK; i++) {//当前刷盘指针是否大于下一个刷盘指针flushOK = CommitLog.this.mappedFileQueue.getFlushedWhere() >= req.getNextOffset();if (!flushOK) {//刷盘CommitLog.this.mappedFileQueue.flush(0);}}//唤醒消息发送线程并通知刷盘结果。req.wakeupCustomer(flushOK ? PutMessageStatus.PUT_OK : PutMessageStatus.FLUSH_DISK_TIMEOUT);}long storeTimestamp = CommitLog.this.mappedFileQueue.getStoreTimestamp();if (storeTimestamp > 0) {//更新刷盘检测点StoreCheckpoint 中的PhysicMsgTimestamp//但并没有执行检测点的刷盘操作,刷盘检测点的刷盘操作将在刷写消息队列文件时触发CommitLog.this.defaultMessageStore.getStoreCheckpoint().setPhysicMsgTimestamp(storeTimestamp);}//清除刷盘请求this.requestsRead.clear();} else {// Because of individual messages is set to not sync flush, it// will come to this process//可能出现单个消息为不同步刷新CommitLog.this.mappedFileQueue.flush(0);}}}

MappedFileQueue

public boolean flush(final int flushLeastPages) {boolean result = true;//根据消息偏移量offset 查找MappedFileMappedFile mappedFile = this.findMappedFileByOffset(this.flushedWhere, this.flushedWhere == 0);if (mappedFile != null) {//获取文件最后一次内容写入时间long tmpTimeStamp = mappedFile.getStoreTimestamp();//将内存中的数据刷写到磁盘int offset = mappedFile.flush(flushLeastPages);//获取刷写磁盘指针long where = mappedFile.getFileFromOffset() + offset;result = where == this.flushedWhere;//记录刷写磁盘指针this.flushedWhere = where;if (0 == flushLeastPages) {//记录文件最后一次内容写入时间//注意这里记录的是刷入channel或channel映射的byteBuffer的时间,而不是刷盘时间this.storeTimestamp = tmpTimeStamp;}}return result;}

将内存中的数据刷写到磁盘

MappedFile

public int flush(final int flushLeastPages) {if (this.isAbleToFlush(flushLeastPages)) {if (this.hold()) {//获取该文件内存映射写指针//如果开启了堆内存池,则是堆内存写指针int value = getReadPosition();try {//We only append data to fileChannel or mappedByteBuffer, never both.//说明使用了堆内存,执行到这里堆内存已经写入fileChannel中了,重新刷入磁盘就行了if (writeBuffer != null || this.fileChannel.position() != 0) {//文件的所有待定修改立即同步到磁盘,布尔型参数表示在方法返回值前文件的元数据(metadata)是否也要被同步更新到磁盘this.fileChannel.force(false);} else {//说明没用堆内存ByteBuffer,直接使用内存映射刷入即可.this.mappedByteBuffer.force();}} catch (Throwable e) {log.error("Error occurred when force data to disk.", e);}//记录刷入磁盘最新指针this.flushedPosition.set(value);this.release();} else {log.warn("in flush, hold failed, flush offset = " + this.flushedPosition.get());this.flushedPosition.set(getReadPosition());}}//返回刷入磁盘最新指针return this.getFlushedPosition();}

唤醒消息发送线程并通知刷盘结果。

public void wakeupCustomer(final PutMessageStatus putMessageStatus) {this.flushOKFuture.complete(putMessageStatus);}

交换请求

避免同步刷盘消费任务与其他消息生产者提交任务直接的锁竞争

private void swapRequests() {//这两个容器每执行完一次任务后交换,继续消费任务List<GroupCommitRequest> tmp = this.requestsWrite;this.requestsWrite = this.requestsRead;this.requestsRead = tmp;}

异步刷盘

如果 transientStorePoolEnable 为false ,消息直接追加到与物理文件直接映射的内存中,然后刷写到磁盘中。

flushCommitLogService机制

CommitLog.FlushRealTimeService

public void run() {CommitLog.log.info(this.getServiceName() + " service started");while (!this.isStopped()) {//默认为false , 表示await 方法等待;如果为true ,表示使用Thread.sleep 方法等待。boolean flushCommitLogTimed = CommitLog.this.defaultMessageStore.getMessageStoreConfig().isFlushCommitLogTimed();//FlushRealTimeService 线程任务运行间隔。int interval = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushIntervalCommitLog();//一次刷写任务至少包含页数, 如果待刷写数据不足,小于该参数配置的值,将忽略本次刷写任务,默认4 页。int flushPhysicQueueLeastPages = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushCommitLogLeastPages();//两次真实刷写任务最大间隔, 默认10s 。int flushPhysicQueueThoroughInterval =CommitLog.this.defaultMessageStore.getMessageStoreConfig().getFlushCommitLogThoroughInterval();boolean printFlushProgress = false;// Print flush progresslong currentTimeMillis = System.currentTimeMillis();/*如果距上次提交间隔超过flushPhysicQueueThoroughinterval ,则本次刷盘任务将忽略flushPhysicQueuLeastPages , 也就是如果待刷写数据小于指定页数也执行刷写磁盘操作。*/if (currentTimeMillis >= (this.lastFlushTimestamp + flushPhysicQueueThoroughInterval)) {this.lastFlushTimestamp = currentTimeMillis;flushPhysicQueueLeastPages = 0;printFlushProgress = (printTimes++ % 10) == 0;}try {//执行一次刷盘任务前先等待指定时间间隔, 然后再执行刷盘任务。if (flushCommitLogTimed) {Thread.sleep(interval);} else {this.waitForRunning(interval);}if (printFlushProgress) {this.printFlushProgress();}long begin = System.currentTimeMillis();//将内存中数据刷写到磁盘CommitLog.this.mappedFileQueue.flush(flushPhysicQueueLeastPages);long storeTimestamp = CommitLog.this.mappedFileQueue.getStoreTimestamp();if (storeTimestamp > 0) {//更新存储检测点文件的comm1tlog 文件的更新时间戳CommitLog.this.defaultMessageStore.getStoreCheckpoint().setPhysicMsgTimestamp(storeTimestamp);}long past = System.currentTimeMillis() - begin;if (past > 500) {log.info("Flush data to disk costs {} ms", past);}} catch (Throwable e) {CommitLog.log.warn(this.getServiceName() + " service has exception. ", e);this.printFlushProgress();}}// Normal shutdown, to ensure that all the flush before exitboolean result = false;for (int i = 0; i < RETRY_TIMES_OVER && !result; i++) {result = CommitLog.this.mappedFileQueue.flush(0);CommitLog.log.info(this.getServiceName() + " service shutdown, retry " + (i + 1) + " times " + (result ? "OK" : "Not OK"));}this.printFlushProgress();CommitLog.log.info(this.getServiceName() + " service end");}

将内存中的数据刷写到磁盘

MappedFile

public int flush(final int flushLeastPages) {if (this.isAbleToFlush(flushLeastPages)) {if (this.hold()) {//获取该文件内存映射写指针//如果开启了堆内存池,则是堆内存写指针int value = getReadPosition();try {//We only append data to fileChannel or mappedByteBuffer, never both.//说明使用了堆内存,执行到这里堆内存已经写入fileChannel中了,重新刷入磁盘就行了if (writeBuffer != null || this.fileChannel.position() != 0) {//文件的所有待定修改立即同步到磁盘,布尔型参数表示在方法返回值前文件的元数据(metadata)是否也要被同步更新到磁盘this.fileChannel.force(false);} else {//说明没用堆内存ByteBuffer,直接使用内存映射刷入即可.this.mappedByteBuffer.force();}} catch (Throwable e) {log.error("Error occurred when force data to disk.", e);}//记录刷入磁盘最新指针this.flushedPosition.set(value);this.release();} else {log.warn("in flush, hold failed, flush offset = " + this.flushedPosition.get());this.flushedPosition.set(getReadPosition());}}//返回刷入磁盘最新指针return this.getFlushedPosition();}

commitLogService提交

如果transientStorePoolEnable 为true , RocketMQ 会单独申请一个与目标物理文件( commitlog)同样大小的堆外内存, 该堆外内存将使用内存锁定,确保不会被置换到虚拟内存中去,消息首先追加到堆外内存,然后提交到与物理文件的内存映射内存中,再flush到磁盘。

CommitLog.CommitRealTimeService

public void run() {CommitLog.log.info(this.getServiceName() + " service started");while (!this.isStopped()) {//CommitRealTimeService 线程间隔时间,默认200ms//将ByteBuffer 新追加的内容提交到MappedByteBufferint interval = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getCommitIntervalCommitLog();//一次提交任务至少包含页数, 如果待提交数据不足,小于该参数配置的值,将忽略本次提交任务,默认4 页。int commitDataLeastPages = CommitLog.this.defaultMessageStore.getMessageStoreConfig().getCommitCommitLogLeastPages();//两次真实提交最大间隔,默认200ms 。int commitDataThoroughInterval =CommitLog.this.defaultMessageStore.getMessageStoreConfig().getCommitCommitLogThoroughInterval();long begin = System.currentTimeMillis();//如果距上次提交间隔超过commitDataThoroughlnterval ,if (begin >= (this.lastCommitTimestamp + commitDataThoroughInterval)) {//本次提交忽略commitCommitLogLeastPages参数//也就是如果待提交数据小于指定页数, 也执行提交操作。this.lastCommitTimestamp = begin;commitDataLeastPages = 0;}try {//执行提交操作,将待提交数据提交到物理文件的内存映射内存区boolean result = CommitLog.this.mappedFileQueue.commit(commitDataLeastPages);long end = System.currentTimeMillis();//如果返回false ,并不是代表提交失败,而是只提交了一部分数据if (!result) {this.lastCommitTimestamp = end; // result = false means some data committed.//now wake up flush thread.//唤醒刷盘线程执行刷盘操作//该线程每完成一次提交动作,将等待200ms 再继续执行下一次提交任务。flushCommitLogService.wakeup();}if (end - begin > 500) {log.info("Commit data to file costs {} ms", end - begin);}//等待200msthis.waitForRunning(interval);} catch (Throwable e) {CommitLog.log.error(this.getServiceName() + " service has exception. ", e);}}boolean result = false;for (int i = 0; i < RETRY_TIMES_OVER && !result; i++) {result = CommitLog.this.mappedFileQueue.commit(0);CommitLog.log.info(this.getServiceName() + " service shutdown, retry " + (i + 1) + " times " + (result ? "OK" : "Not OK"));}CommitLog.log.info(this.getServiceName() + " service end");}}

MappedFileQueue

public boolean commit(final int commitLeastPages) {boolean result = true;//根据消息偏移量offset 查找MappedFileMappedFile mappedFile = this.findMappedFileByOffset(this.committedWhere, this.committedWhere == 0);if (mappedFile != null) {//执行提交操作int offset = mappedFile.commit(commitLeastPages);long where = mappedFile.getFileFromOffset() + offset;result = where == this.committedWhere;//记录提交指针this.committedWhere = where;}return result;}

根据消息偏移量offset 查找MappedFile

MappedFileQueue

public MappedFile findMappedFileByOffset(final long offset, final boolean returnFirstOnNotFound) {try {MappedFile firstMappedFile = this.getFirstMappedFile();MappedFile lastMappedFile = this.getLastMappedFile();if (firstMappedFile != null && lastMappedFile != null) {//偏移不在起始和结束文件中,说明越界.if (offset < firstMappedFile.getFileFromOffset() || offset >= lastMappedFile.getFileFromOffset() + this.mappedFileSize) {LOG_ERROR.warn("Offset not matched. Request offset: {}, firstOffset: {}, lastOffset: {}, mappedFileSize: {}, mappedFiles count: {}",offset,firstMappedFile.getFileFromOffset(),lastMappedFile.getFileFromOffset() + this.mappedFileSize,this.mappedFileSize,this.mappedFiles.size());} else {//因为RocketMQ定时删除存储文件//所以第一个文件偏移开始并不一定是000000.//同理可得offset / this.mappedFileSize并不能定位到具体文件//所以还需要减去第一个文件的偏移/文件大小,算出磁盘中起始第几个文件int index = (int) ((offset / this.mappedFileSize) - (firstMappedFile.getFileFromOffset() / this.mappedFileSize));MappedFile targetFile = null;try {//获取映射文件targetFile = this.mappedFiles.get(index);} catch (Exception ignored) {}//再次检测是否在文件范围内if (targetFile != null && offset >= targetFile.getFileFromOffset()&& offset < targetFile.getFileFromOffset() + this.mappedFileSize) {return targetFile;}//遍历所有文件查找for (MappedFile tmpMappedFile : this.mappedFiles) {if (offset >= tmpMappedFile.getFileFromOffset()&& offset < tmpMappedFile.getFileFromOffset() + this.mappedFileSize) {return tmpMappedFile;}}}//如果配置了没找到返回第一个,就返回第一个文件if (returnFirstOnNotFound) {return firstMappedFile;}}} catch (Exception e) {log.error("findMappedFileByOffset Exception", e);}return null;}

执行提交操作

MappedFile

public int commit(final int commitLeastPages) {/*writeBuffer如果为空,直接返回wrotePosition 指针,无须执行commit 操作,表明commit 操作的实际是writeBuffer堆外内存*/if (writeBuffer == null) {//no need to commit data to file channel, so just regard wrotePosition as committedPosition.return this.wrotePosition.get();}//判断是否执行commit 操作,主要判断页是否满足if (this.isAbleToCommit(commitLeastPages)) {//添加引用if (this.hold()) {//具体的提交实现commit0(commitLeastPages);//释放引用this.release();} else {log.warn("in commit, hold failed, commit offset = " + this.committedPosition.get());}}// All dirty data has been committed to FileChannel.///所有脏数据已经写入channel,且该文件已经提交满了.if (writeBuffer != null && this.transientStorePool != null && this.fileSize == this.committedPosition.get()) {//归还堆外内存给堆内存池this.transientStorePool.returnBuffer(writeBuffer);//释放GCthis.writeBuffer = null;}//返回最新提交位置return this.committedPosition.get();}

实时更新消息消费队列与索引文件

消息消费队列文件、消息属性索引文件都是基于CommitLog 文件构建的, 当消息生产者提交的消息存储在Commitlog 文件中,ConsumeQueue 、IndexFile 需要及时更新,否则消息无法及时被消费,根据消息属性查找消息也会出现较大延迟。

Broker 服务器在启动时会启动ReputMessageService 线程,准实时转发CommitLog 文件更新事件, 相应的任务处理器根据

转发的消息及时更新ConsumeQueue 、IndexFile 文件。

DefaultMessageStore

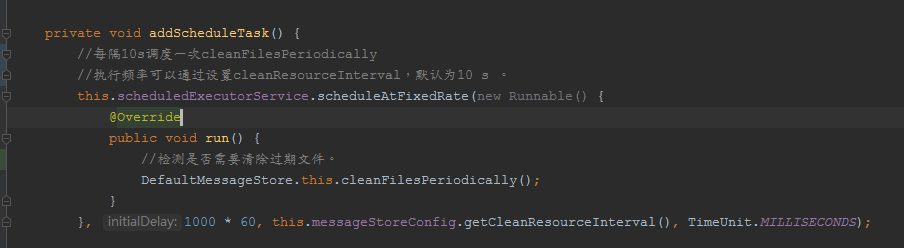

public void start() throws Exception {//获取${ROCKETMQ_HOME}\store\lock的锁lock = lockFile.getChannel().tryLock(0, 1, false);//如果文件不存在,且锁不是抢占式锁则锁失败,说明已经开启锁了if (lock == null || lock.isShared() || !lock.isValid()) {throw new RuntimeException("Lock failed,MQ already started");}//文件写入Lock,并刷入磁盘lockFile.getChannel().write(ByteBuffer.wrap("lock".getBytes()));lockFile.getChannel().force(true);{/*** 1. Make sure the fast-forward messages to be truncated during the recovering according to the max physical offset of the commitlog;* 2. DLedger committedPos may be missing, so the maxPhysicalPosInLogicQueue maybe bigger that maxOffset returned by DLedgerCommitLog, just let it go;* 3. Calculate the reput offset according to the consume queue;* 4. Make sure the fall-behind messages to be dispatched before starting the commitlog, especially when the broker role are automatically changed.*///获取commitLog最小偏移量long maxPhysicalPosInLogicQueue = commitLog.getMinOffset();//获取ConsumeQueue记录的最大偏移for (ConcurrentMap<Integer, ConsumeQueue> maps : this.consumeQueueTable.values()) {//因为ConsumeQueue是在commitLog之后刷入磁盘,所以ConsumeQueue里的数据可能会比commitLog小for (ConsumeQueue logic : maps.values()) {if (logic.getMaxPhysicOffset() > maxPhysicalPosInLogicQueue) {maxPhysicalPosInLogicQueue = logic.getMaxPhysicOffset();}}}//若还未获取第一个文件则从0开始if (maxPhysicalPosInLogicQueue < 0) {maxPhysicalPosInLogicQueue = 0;}//在这期间可能磁盘出现问题,将maxPhysicalPosInLogicQueue重置为最小偏移.保证数据安全.if (maxPhysicalPosInLogicQueue < this.commitLog.getMinOffset()) {maxPhysicalPosInLogicQueue = this.commitLog.getMinOffset();/*** This happens in following conditions:* 1. If someone removes all the consumequeue files or the disk get damaged. 如果有人删除了consumequeue文件或磁盘损坏。* 2. Launch a new broker, and copy the commitlog from other brokers. 启动新的broker,并将委托日志复制到其他broker中。** All the conditions has the same in common that the maxPhysicalPosInLogicQueue should be 0.* If the maxPhysicalPosInLogicQueue is gt 0, there maybe something wrong.*/log.warn("[TooSmallCqOffset] maxPhysicalPosInLogicQueue={} clMinOffset={}", maxPhysicalPosInLogicQueue, this.commitLog.getMinOffset());}log.info("[SetReputOffset] maxPhysicalPosInLogicQueue={} clMinOffset={} clMaxOffset={} clConfirmedOffset={}",maxPhysicalPosInLogicQueue, this.commitLog.getMinOffset(), this.commitLog.getMaxOffset(), this.commitLog.getConfirmOffset());//maxPhysicalPosInLogicQueue:ReputMessageService 从哪个物理偏移量开始转发消息给ConsumeQueue和IndexFile 。this.reputMessageService.setReputFromOffset(maxPhysicalPosInLogicQueue);//开启线程转发消息给ConsumeQueu巳和IndexFilethis.reputMessageService.start();/*** 1. Finish dispatching the messages fall behind, then to start other services.* 2. DLedger committedPos may be missing, so here just require dispatchBehindBytes <= 0*/while (true) {if (dispatchBehindBytes() <= 0) {break;}Thread.sleep(1000);log.info("Try to finish doing reput the messages fall behind during the starting, reputOffset={} maxOffset={} behind={}", this.reputMessageService.getReputFromOffset(), this.getMaxPhyOffset(), this.dispatchBehindBytes());}this.recoverTopicQueueTable();}if (!messageStoreConfig.isEnableDLegerCommitLog()) {this.haService.start();this.handleScheduleMessageService(messageStoreConfig.getBrokerRole());}this.flushConsumeQueueService.start();this.commitLog.start();this.storeStatsService.start();this.createTempFile();this.addScheduleTask();this.shutdown = false;}

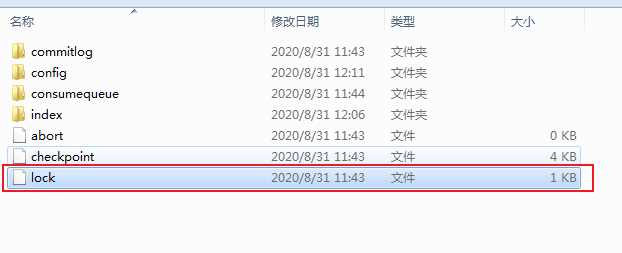

lock也就是目录这个文件

DefaultMessageStore.ReputMessageService

@Overridepublic void run() {DefaultMessageStore.log.info(this.getServiceName() + " service started");//如果线程没关闭while (!this.isStopped()) {try {//每执行一次任务推送休息1毫秒Thread.sleep(1);// 继续尝试推送消息到消息消费队列和索引文件,this.doReput();} catch (Exception e) {DefaultMessageStore.log.warn(this.getServiceName() + " service has exception. ", e);}}DefaultMessageStore.log.info(this.getServiceName() + " service end");}private void doReput() {//说明调度过多,commitLog已经过期if (this.reputFromOffset < DefaultMessageStore.this.commitLog.getMinOffset()) {log.warn("The reputFromOffset={} is smaller than minPyOffset={}, this usually indicate that the dispatch behind too much and the commitlog has expired.",this.reputFromOffset, DefaultMessageStore.this.commitLog.getMinOffset());this.reputFromOffset = DefaultMessageStore.this.commitLog.getMinOffset();}for (boolean doNext = true; this.isCommitLogAvailable() && doNext; ) {//是否允许转发if (DefaultMessageStore.this.getMessageStoreConfig().isDuplicationEnable()//reputFromOffset>CommitLog的提交指针,则结束&& this.reputFromOffset >= DefaultMessageStore.this.getConfirmOffset()) {break;}//返回reputFromOffset 偏移量开始的全部有效数据(commitlog 文件)SelectMappedBufferResult result = DefaultMessageStore.this.commitLog.getData(reputFromOffset);if (result != null) {try {//获取在文件中的偏移位置.算上文件起始地址this.reputFromOffset = result.getStartOffset();for (int readSize = 0; readSize < result.getSize() && doNext; ) {//从result 返回的ByteBuffer 中循环读取消息,一次读取一条,//反序列化并创建DispatchRequest对象,主要记录一条消息数据DispatchRequest dispatchRequest =DefaultMessageStore.this.commitLog.checkMessageAndReturnSize(result.getByteBuffer(), false, false);//在这里默认采用的是getMsgSizeint size = dispatchRequest.getBufferSize() == -1 ? dispatchRequest.getMsgSize() : dispatchRequest.getBufferSize();if (dispatchRequest.isSuccess()) {if (size > 0) {//最终将分别调用CommitLogDispatcherBuildConsumeQueue (构建消息消费队列)、//CommitLogDispatcherBuildlndex (构建索引文件) 。DefaultMessageStore.this.doDispatch(dispatchRequest);if (BrokerRole.SLAVE != DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole()&& DefaultMessageStore.this.brokerConfig.isLongPollingEnable()) {DefaultMessageStore.this.messageArrivingListener.arriving(dispatchRequest.getTopic(),dispatchRequest.getQueueId(), dispatchRequest.getConsumeQueueOffset() + 1,dispatchRequest.getTagsCode(), dispatchRequest.getStoreTimestamp(),dispatchRequest.getBitMap(), dispatchRequest.getPropertiesMap());}//更改为下一条消息偏移量this.reputFromOffset += size;readSize += size;if (DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole() == BrokerRole.SLAVE) {DefaultMessageStore.this.storeStatsService.getSinglePutMessageTopicTimesTotal(dispatchRequest.getTopic()).incrementAndGet();DefaultMessageStore.this.storeStatsService.getSinglePutMessageTopicSizeTotal(dispatchRequest.getTopic()).addAndGet(dispatchRequest.getMsgSize());}} else if (size == 0) {//返回下一个文件的起始偏移this.reputFromOffset = DefaultMessageStore.this.commitLog.rollNextFile(this.reputFromOffset);//跳过循环readSize = result.getSize();}} else if (!dispatchRequest.isSuccess()) {//没解析到完整的消息if (size > 0) {log.error("[BUG]read total count not equals msg total size. reputFromOffset={}", reputFromOffset);//跳过不完整的消息,并声明这是一个bugthis.reputFromOffset += size;} else {//没数据,标记不需要执行下一条.doNext = false;// If user open the dledger pattern or the broker is master node,// it will not ignore the exception and fix the reputFromOffset variableif (DefaultMessageStore.this.getMessageStoreConfig().isEnableDLegerCommitLog() ||DefaultMessageStore.this.brokerConfig.getBrokerId() == MixAll.MASTER_ID) {log.error("[BUG]dispatch message to consume queue error, COMMITLOG OFFSET: {}",this.reputFromOffset);this.reputFromOffset += result.getSize() - readSize;}}}}} finally {result.release();}} else {doNext = false;}}}

获取当前Commitlog 目录最小偏移量

CommitLog

public long getMinOffset() {//获取第一个文件MappedFile mappedFile = this.mappedFileQueue.getFirstMappedFile();if (mappedFile != null) {//第一个文件可用if (mappedFile.isAvailable()) {//返回该文件起始偏移量return mappedFile.getFileFromOffset();} else {//返回下个文件的起始偏移量return this.rollNextFile(mappedFile.getFileFromOffset());}}return -1;}

返回reputFromOffset 偏移量开始的全部有效数据(commitlog 文件)

public SelectMappedBufferResult getData(final long offset) {return this.getData(offset, offset == 0);}public SelectMappedBufferResult getData(final long offset, final boolean returnFirstOnNotFound) {//获取单个 CommitLog 文件大小,默认1G,int mappedFileSize = this.defaultMessageStore.getMessageStoreConfig().getMappedFileSizeCommitLog();//根据消息偏移量offset 查找MappedFileMappedFile mappedFile = this.mappedFileQueue.findMappedFileByOffset(offset, returnFirstOnNotFound);if (mappedFile != null) {int pos = (int) (offset % mappedFileSize);//查找pos 到当前最大可读之间的数据SelectMappedBufferResult result = mappedFile.selectMappedBuffer(pos);return result;}return null;}

根据消息偏移量offset 查找MappedFile

MappedFileQueue