python目标检测 训练数据预处理及加载代码

数据预处理

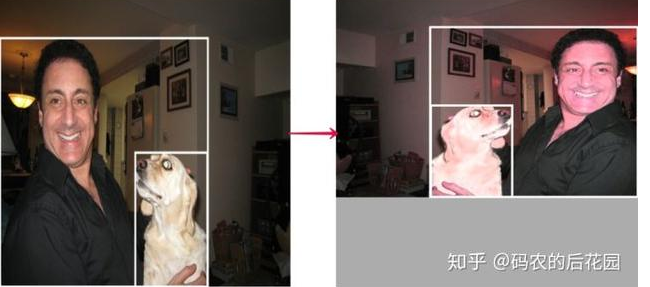

def letterbox_image(img, inp_dim):'''resize image with unchanged aspect ratio using paddingParameters----------img : numpy.ndarrayImageinp_dim: tuple(int)shape of the reszied imageReturns-------numpy.ndarray:Resized image'''inp_dim = (inp_dim, inp_dim)img_w, img_h = img.shape[1], img.shape[0]w, h = inp_dimnew_w = int(img_w * min(w/img_w, h/img_h))new_h = int(img_h * min(w/img_w, h/img_h))resized_image = cv2.resize(img, (new_w,new_h)) # 按照target_szie/(长边)为scale进行resize,然后填充空白区域canvas = np.full((inp_dim[1], inp_dim[0], 3), 0)canvas[(h-new_h)//2:(h-new_h)//2 + new_h,(w-new_w)//2:(w-new_w)//2 + new_w, :] = resized_imagereturn canvasclass Resize(object):"""Resize the image in accordance to `image_letter_box` function in darknetThe aspect ratio is maintained. The longer side is resized to the inputsize of the network, while the remaining space on the shorter side is filledwith black color. **This should be the last transform**Parameters----------inp_dim : tuple(int)tuple containing the size to which the image will be resized.Returns-------numpy.ndaaraySheared image in the numpy format of shape `HxWxC`numpy.ndarrayResized bounding box co-ordinates of the format `n x 4` where n isnumber of bounding boxes and 4 represents `x1,y1,x2,y2` of the box"""def __init__(self, inp_dim):self.inp_dim = inp_dimdef __call__(self, img, bboxes):w,h = img.shape[1], img.shape[0]img = letterbox_image(img, self.inp_dim) # 按照target_szie/(长边)为scale进行resize,然后填充空白区域scale = min(self.inp_dim/h, self.inp_dim/w)bboxes[:,:4] *= (scale)new_w = scale*wnew_h = scale*hinp_dim = self.inp_dimdel_h = (inp_dim - new_h)/2del_w = (inp_dim - new_w)/2add_matrix = np.array([[del_w, del_h, del_w, del_h]]).astype(int)bboxes[:,:4] += add_matrix # 根据空白区域补充img = img.astype(np.uint8)return img, bboxesclass RandomHorizontalFlip(object):"""Randomly horizontally flips the Image with the probability *p*Parameters----------p: floatThe probability with which the image is flippedReturns-------numpy.ndaarayFlipped image in the numpy format of shape `HxWxC`numpy.ndarrayTranformed bounding box co-ordinates of the format `n x 4` where n isnumber of bounding boxes and 4 represents `x1,y1,x2,y2` of the box"""def __init__(self, p=0.5):self.p = pdef __call__(self, img, bboxes):img_center = np.array(img.shape[:2])[::-1]/2 # 得到图像中心坐标(x,y)img_center = np.hstack((img_center, img_center))if random.random() < self.p:img = img[:, ::-1, :] # 图像水平翻转bboxes[:, [0, 2]] += 2*(img_center[[0, 2]] - bboxes[:, [0, 2]]) # 将box(x1,y1,x2,y2)的x坐标翻转,box_w = abs(bboxes[:, 0] - bboxes[:, 2])bboxes[:, 0] -= box_w # 翻转后的坐标,x1>x2;该操作交换坐标,使得x1<x2bboxes[:, 2] += box_wreturn img, bboxesclass RandomScale(object):"""Randomly scales an imageBounding boxes which have an area of less than 25% in the remaining in thetransformed image is dropped. The resolution is maintained, and the remainingarea if any is filled by black color.Parameters----------scale: float or tuple(float)if **float**, the image is scaled by a factor drawnrandomly from a range (1 - `scale` , 1 + `scale`). If **tuple**,the `scale` is drawn randomly from values specified by thetupleReturns-------numpy.ndaarayScaled image in the numpy format of shape `HxWxC`numpy.ndarrayTranformed bounding box co-ordinates of the format `n x 4` where n isnumber of bounding boxes and 4 represents `x1,y1,x2,y2` of the box"""def __init__(self, scale = 0.2, diff = False):self.scale = scaleif type(self.scale) == tuple:assert len(self.scale) == 2, "Invalid range"assert self.scale[0] > -1, "Scale factor can't be less than -1"assert self.scale[1] > -1, "Scale factor can't be less than -1"else:assert self.scale > 0, "Please input a positive float"self.scale = (max(-1, -self.scale), self.scale)self.diff = diffdef __call__(self, img, bboxes):#Chose a random digit to scale byimg_shape = img.shapeif self.diff:scale_x = random.uniform(*self.scale)scale_y = random.uniform(*self.scale)else:scale_x = random.uniform(*self.scale)scale_y = scale_xresize_scale_x = 1 + scale_xresize_scale_y = 1 + scale_y# The logic of the Scale transformation is fairly simple.# We use the OpenCV function cv2.resize to scale our image, and scale our bounding boxes by the scale factor(s).img= cv2.resize(img, None, fx = resize_scale_x, fy = resize_scale_y)bboxes[:,:4] *= [resize_scale_x, resize_scale_y, resize_scale_x, resize_scale_y]canvas = np.zeros(img_shape, dtype = np.uint8) # 原始图像大小y_lim = int(min(resize_scale_y,1)*img_shape[0])x_lim = int(min(resize_scale_x,1)*img_shape[1])canvas[:y_lim,:x_lim,:] = img[:y_lim,:x_lim,:] # 有可能变大或者变小,如果变大,取其中一部分,变小,黑色填充img = canvasbboxes = clip_box(bboxes, [0,0,1 + img_shape[1], img_shape[0]], 0.25) # 对变换后的box:处理超出边界和面积小于阈值drop操作;return img, bboxesclass RandomTranslate(object): # 随机平移"""Randomly Translates the imageBounding boxes which have an area of less than 25% in the remaining in thetransformed image is dropped. The resolution is maintained, and the remainingarea if any is filled by black color.Parameters----------translate: float or tuple(float)if **float**, the image is translated by a factor drawnrandomly from a range (1 - `translate` , 1 + `translate`). If **tuple**,`translate` is drawn randomly from values specified by thetupleReturns-------numpy.ndaarayTranslated image in the numpy format of shape `HxWxC`numpy.ndarrayTranformed bounding box co-ordinates of the format `n x 4` where n isnumber of bounding boxes and 4 represents `x1,y1,x2,y2` of the box"""def __init__(self, translate = 0.2, diff = False):self.translate = translateif type(self.translate) == tuple:assert len(self.translate) == 2, "Invalid range"assert self.translate[0] > 0 & self.translate[0] < 1assert self.translate[1] > 0 & self.translate[1] < 1else:assert self.translate > 0 and self.translate < 1self.translate = (-self.translate, self.translate) # 必须在(0-1)之间self.diff = diffdef __call__(self, img, bboxes):#Chose a random digit to scale byimg_shape = img.shape#translate the image#percentage of the dimension of the image to translatetranslate_factor_x = random.uniform(*self.translate)translate_factor_y = random.uniform(*self.translate)if not self.diff:translate_factor_y = translate_factor_xcanvas = np.zeros(img_shape).astype(np.uint8)corner_x = int(translate_factor_x*img.shape[1])corner_y = int(translate_factor_y*img.shape[0])#change the origin to the top-left corner of the translated box # 相当于做一个平移操作,做超过边界处理等orig_box_cords = [max(0,corner_y), max(corner_x,0), min(img_shape[0], corner_y + img.shape[0]), min(img_shape[1],corner_x + img.shape[1])]mask = img[max(-corner_y, 0):min(img.shape[0], -corner_y + img_shape[0]), max(-corner_x, 0):min(img.shape[1], -corner_x + img_shape[1]),:]canvas[orig_box_cords[0]:orig_box_cords[2], orig_box_cords[1]:orig_box_cords[3],:] = maskimg = canvasbboxes[:,:4] += [corner_x, corner_y, corner_x, corner_y] # box做一个平移操作bboxes = clip_box(bboxes, [0,0,img_shape[1], img_shape[0]], 0.25)return img, bboxesclass RandomRotate(object):"""Randomly rotates an imageBounding boxes which have an area of less than 25% in the remaining in thetransformed image is dropped. The resolution is maintained, and the remainingarea if any is filled by black color.Parameters----------angle: float or tuple(float)if **float**, the image is rotated by a factor drawnrandomly from a range (-`angle`, `angle`). If **tuple**,the `angle` is drawn randomly from values specified by thetupleReturns-------numpy.ndaarayRotated image in the numpy format of shape `HxWxC`numpy.ndarrayTranformed bounding box co-ordinates of the format `n x 4` where n isnumber of bounding boxes and 4 represents `x1,y1,x2,y2` of the box"""def __init__(self, angle = 10):self.angle = angleif type(self.angle) == tuple:assert len(self.angle) == 2, "Invalid range"else:self.angle = (-self.angle, self.angle)def __call__(self, img, bboxes):angle = random.uniform(*self.angle)w,h = img.shape[1], img.shape[0]cx, cy = w//2, h//2img = rotate_im(img, angle) # 旋转后,为了保证整图信息,仿射后的图像变大,先求仿射矩阵,然后变换整图;corners = get_corners(bboxes) # 得到四个角点corners = np.hstack((corners, bboxes[:,4:]))corners[:,:8] = rotate_box(corners[:,:8], angle, cx, cy, h, w) # 根据仿射矩阵得到box旋转后的坐标new_bbox = get_enclosing_box(corners) # we have to find the tightest rectangle parallel to the sides of the image containing the tilted rectangular box.scale_factor_x = img.shape[1] / wscale_factor_y = img.shape[0] / himg = cv2.resize(img, (w,h)) # 旋转后变大的图像恢复到原图像大小;new_bbox[:,:4] /= [scale_factor_x, scale_factor_y, scale_factor_x, scale_factor_y]bboxes = new_bboxbboxes = clip_box(bboxes, [0,0,w, h], 0.25)return img, bboxesclass RandomShear(object): # 旋转的特殊情况"""Randomly shears an image in horizontal directionBounding boxes which have an area of less than 25% in the remaining in thetransformed image is dropped. The resolution is maintained, and the remainingarea if any is filled by black color.Parameters----------shear_factor: float or tuple(float)if **float**, the image is sheared horizontally by a factor drawnrandomly from a range (-`shear_factor`, `shear_factor`). If **tuple**,the `shear_factor` is drawn randomly from values specified by thetupleReturns-------numpy.ndaaraySheared image in the numpy format of shape `HxWxC`numpy.ndarrayTranformed bounding box co-ordinates of the format `n x 4` where n isnumber of bounding boxes and 4 represents `x1,y1,x2,y2` of the box"""def __init__(self, shear_factor = 0.2):self.shear_factor = shear_factorif type(self.shear_factor) == tuple:assert len(self.shear_factor) == 2, "Invalid range for scaling factor"else:self.shear_factor = (-self.shear_factor, self.shear_factor)shear_factor = random.uniform(*self.shear_factor)def __call__(self, img, bboxes):shear_factor = random.uniform(*self.shear_factor)w,h = img.shape[1], img.shape[0]if shear_factor < 0:img, bboxes = HorizontalFlip()(img, bboxes) # 一种巧妙的方法,来避免...M = np.array([[1, abs(shear_factor), 0],[0,1,0]])nW = img.shape[1] + abs(shear_factor*img.shape[0])bboxes[:,[0,2]] += ((bboxes[:,[1,3]]) * abs(shear_factor) ).astype(int)img = cv2.warpAffine(img, M, (int(nW), img.shape[0])) # 只进行水平变换if shear_factor < 0:img, bboxes = HorizontalFlip()(img, bboxes)img = cv2.resize(img, (w,h))scale_factor_x = nW / wbboxes[:,:4] /= [scale_factor_x, 1, scale_factor_x, 1]return img, bboxes

数据加载

1.解析图片

def parse_data(data):img = np.array(cv2.imread(data))h, w, c = img.shapeassert c == 3img = cv2.resize(img, (scale_size, scale_size))img = img.astype(np.float32)shift = (scale_size - crop_size) // 2img = img[shift: shift + crop_size, shift: shift + crop_size, :]# Flip image at random if flag is selectedif np.random.random() < 0.5: # self.horizontal_flip andimg = cv2.flip(img, 1)img = (img - np.array(127.5)) / 127.5return imgdef parse_data_without_augmentation(data):img = np.array(cv2.imread(data))h, w, c = img.shapeassert c == 3img = cv2.resize(img, (crop_size, crop_size))img = img.astype(np.float32)img = (img - np.array(127.5)) / 127.5return img

2.多进程加载

#!/usr/bin/env python# -*- coding: utf-8 -*-# @Time : 2019/3/10 11:15# @Author : Whu_DSP# @File : dped_dataloader.pyimport multiprocessing as mtpimport osimport cv2import numpy as npfrom scipy import miscdef parse_data(filename):I = np.asarray(misc.imread(filename))I = np.float16(I) / 255return Iclass Dataloader:def __init__(self, dped_dir, type_phone, batch_size, is_training, im_shape):self.works = mtp.Pool(10)self.dped_dir = dped_dirself.phone_type = type_phoneself.batch_size = batch_sizeself.is_training = is_trainingself.im_shape = im_shapeself.image_list, self.dslr_list = self._get_data_file_list()self.num_images = len(self.image_list)self._cur = 0self._perm = Noneself._shuffle_index() # init orderdef _get_data_file_list(self):if self.is_training:directory_phone = os.path.join(self.dped_dir, str(self.phone_type), 'training_data', str(self.phone_type))directory_dslr = os.path.join(self.dped_dir, str(self.phone_type), 'training_data', 'canon')else:directory_phone = os.path.join(self.dped_dir, str(self.phone_type), 'test_data', 'patches',str(self.phone_type))directory_dslr = os.path.join(self.dped_dir, str(self.phone_type), 'test_data', 'patches', 'canon')# num_images = len([name for name in os.listdir(directory_phone) if os.path.isfile(os.path.join(directory_phone, name))])image_list = [os.path.join(directory_phone, name) for name in os.listdir(directory_phone)]dslr_list = [os.path.join(directory_dslr, name) for name in os.listdir(directory_dslr)]return image_list, dslr_listdef _shuffle_index(self):'''randomly permute the train order'''self._perm = np.random.permutation(np.arange(self.num_images))self._cur = 0def _get_next_minbatch_index(self):"""return the indices for the next minibatch"""if self._cur + self.batch_size > self.num_images:self._shuffle_index()next_index = self._perm[self._cur:self._cur + self.batch_size]self._cur += self.batch_sizereturn next_indexdef get_minibatch(self, minibatch_db):"""return minibatch datas for train/test"""if self.is_training:jobs = self.works.map(parse_data, minibatch_db)else:jobs = self.works.map(parse_data, minibatch_db)index = 0images_data = np.zeros([self.batch_size, self.im_shape[0], self.im_shape[1], 3])for index_job in range(len(jobs)):images_data[index, :, :, :] = jobs[index_job]index += 1return images_datadef next_batch(self):"""Get next batch images and labels"""db_index = self._get_next_minbatch_index()minibatch_db = []for i in range(len(db_index)):minibatch_db.append(self.image_list[db_index[i]])minibatch_db_t = []for i in range(len(db_index)):minibatch_db_t.append(self.dslr_list[db_index[i]])images_data = self.get_minibatch(minibatch_db)dslr_data = self.get_minibatch(minibatch_db_t)return images_data, dslr_dataif __name__ == "__main__":data_dir = "F:\\ranjiewen\\TF_EnhanceDPED\\data\\dped"train_loader = Dataloader(data_dir, "iphone", 32, True,[100,100])test_loader = Dataloader(data_dir, "iphone", 32, False, [100, 100])for i in range(10):image_batch,label_batch = train_loader.next_batch()print(image_batch.shape,label_batch.shape)print("-------------------------------------------")image_batch,label_batch = test_loader.next_batch()print(image_batch.shape,label_batch.shape)

其他增强方式

- 对图像进行缩放并进行长和宽的扭曲

- 对图像进行翻转

- 对图像进行色域扭曲

from PIL import Image, ImageDrawimport numpy as npfrom matplotlib.colors import rgb_to_hsv, hsv_to_rgb"""数据增强的方式:数据增强其实就是让图片变得更加多样,数据增强是非常重要的提高目标检测算法鲁棒性的手段。可以通过改变亮度,图像扭曲等方式使得图像变得更加多种多样,改变后的图片放入神经网络进行训练可以提高网络的鲁棒性,降低各方面额外因素对识别的影响."""def rand(a=0, b=1): # 生成一个取值范围为[a,b)的随机数return np.random.rand() * (b - a) + a# get_random_data数据增强def get_random_data(annotation_line, input_shape, random=True, max_boxes=20, jitter=.5, hue=.1, sat=1.5, val=1.5, proc_img=True):"""实时数据增强的随机预处理random preprocessing for real-time data augmentation:param annotation_line: 数据集中的某一行对应的图片:param input_shape: yolo网络输入图片的大小416*416:param random::param max_boxes::param jitter:控制图片的宽高的扭曲比率,jitter=.5表示在0.5到1.5之间进行扭曲:param hue: 代表hsv色域中三个通道中的色调进行扭曲,色调(H)=.1:param sat: 代表hsv色域中三个通道中的饱和度进行扭曲,饱和度(S)=1.5:param val: 代表hsv色域中三个通道中的明度进行扭曲,明度(V)=1.5:param proc_img::return:"""line = annotation_line.split()image = Image.open(line[0])iw, ih = image.size # 原图片大小h, w = input_shape # 模型输入图片的大小box = np.array([np.array(list(map(int, box.split(',')))) for box in line[1:]]) # 对该行的图片中的目标框进行一个划分# 对图像进行缩放并且进行长和宽的扭曲# 扭曲后的图片大小可能会大于416*416的大小,但是在加灰条的时候会修正为416*416new_ar = w/h * rand(1-jitter, 1+jitter)/rand(1-jitter, 1+jitter) # 表原图片的宽高的扭曲比率,jitter=0,则原图的宽高的比率不变,否则对图片的宽和高进行一定的扭曲scale = rand(.25, 2) # scale控制对原图片的缩放比率,rand(.25, 2)表示在0.25到2之间缩放,图片可能会放大可能会缩小,rand(.25, 1)会把原始的图片进行缩小,图片的边缘加上灰条,可以训练网络对我们小目标的检测能力。rand(1,2)则是一定放大图像if new_ar < 1:nh = int(scale * h)nw = int(nh * new_ar)else:nw = int(scale * w)nh = int(nw / new_ar)image = image.resize((nw, nh), Image.BICUBIC)# print(nw,nh) # 扭曲后的图片的宽和高# 将图像多余的部分加上灰条,一定保证图片的大小为w,h = 416,416dx = int(rand(0, w - nw))dy = int(rand(0, h - nh))new_image = Image.new('RGB', (w, h), (128, 128, 128)) # (128, 128, 128)代表灰色new_image.paste(image, (dx, dy))image = new_image# 是否翻转图像flip = rand() < .5 # 有50%的几率发生翻转if flip: image = image.transpose(Image.FLIP_LEFT_RIGHT) # 左右翻转# 色域扭曲# 色域扭曲是发生在这个hsv这样的色域上,hsv色域是有色调H、饱和度S、明度V三者控制,调整这3个值调整色域扭曲的比率hue = rand(-hue, hue)sat = rand(1, sat) if rand()<.5 else 1/rand(1, sat)val = rand(1, val) if rand()<.5 else 1/rand(1, val)x = rgb_to_hsv(np.array(image) / 255.) # 将图片从RGB图像调整到hsv色域上之后,再对其色域进行扭曲x[..., 0] += huex[..., 0][x[..., 0] > 1] -= 1x[..., 0][x[..., 0] < 0] += 1x[..., 1] *= satx[..., 2] *= valx[x > 1] = 1x[x < 0] = 0image_data = hsv_to_rgb(x) # numpy array, 0 to 1# 将box进行调整# 对原图片进项扭曲后,也要对原图片中的框框也进行相应的调整box_data = np.zeros((max_boxes, 5))if len(box) > 0:np.random.shuffle(box)# 扭曲调整box[:, [0, 2]] = box[:, [0, 2]] * nw / iw + dxbox[:, [1, 3]] = box[:, [1, 3]] * nh / ih + dy# 旋转调整if flip: box[:, [0, 2]] = w - box[:, [2, 0]]# 因为调整后不再图像中的目标框的调整box[:, 0:2][box[:, 0:2] < 0] = 0box[:, 2][box[:, 2] > w] = wbox[:, 3][box[:, 3] > h] = hbox_w = box[:, 2] - box[:, 0]box_h = box[:, 3] - box[:, 1]box = box[np.logical_and(box_w > 1, box_h > 1)] # discard invalid boxif len(box) > max_boxes: box = box[:max_boxes]box_data[:len(box)] = boxreturn image_data, box_data# 原图片绘制展示def normal_(annotation_line, input_shape):"""random preprocessing for real-time data augmentation:param annotation_line: 选取的数据集第a行所对应的图片进行数据增强:param input_shape: 输入的大小:return:"""line = annotation_line.split() # 以空格进行分割# 获取该行对应的图片image = Image.open(line[0])# 获取该图片上的每一个目标框box = np.array([np.array(list(map(int, box.split(',')))) for box in line[1:]])return image, boxif __name__ == "__main__":with open("2007_train.txt") as f:lines = f.readlines()a = np.random.randint(0, len(lines))line = lines[a] # 选取的数据集第a行所对应的图片进行数据增强image_data, box_data = normal_(line, [416, 416])img = image_data# 原图片绘制展示#数据集的第a行图片的展现for j in range(len(box_data)):thickness = 3left, top, right, bottom = box_data[j][0:4]draw = ImageDraw.Draw(img)for i in range(thickness):draw.rectangle([left + i, top + i, right - i, bottom - i], outline=(255, 255, 255))img.show()#对图片进行数据增强后的展示image_data, box_data = get_random_data(line, [416, 416])print(box_data)img = Image.fromarray((image_data * 255).astype(np.uint8))for j in range(len(box_data)):thickness = 3left, top, right, bottom = box_data[j][0:4]#创建绘制对象draw = ImageDraw.Draw(img)for i in range(thickness):draw.rectangle([left + i, top + i, right - i, bottom - i], outline=(255, 255, 255))img.show()

还没有评论,来说两句吧...