ELK 日志收集:Filebeat, Logstash, Elasticsearch

文章目录

- 1, 数据收集引擎:Logstash (监听端口以接收数据,并转发到目标端)

- 2, 数据源端:Filebeat (发送日志数据给监听端口)

- 3, 数据目标端:Elasticsearch (索引被接收的数据)

1, 数据收集引擎:Logstash (监听端口以接收数据,并转发到目标端)

- a, 启动接收端口

- b, pipline作业

c, 转发接收的数据到es仓库

1,测试logstash :

cmd > cd logstash-7.12.0

cmd > logstash -e ‘input { stdin { } } output { stdout {} }’在此终端输入一些数据后回车,看是否有打印

#2, filebeat --> logstash --> console stdout#使用Logstash,收集filebeats发送的数据:#2.1, logstash配置,并启动:logstash -f first-pipeline.conf --config.reload.automatic #--config.test_and_exitfirst-pipeline.conf 配置如下:input {beats {port => "5044"}}# The filter part of this file is commented out to indicate that it is# optional.# filter {## }output {stdout { codec => rubydebug }}#3, 启动日志如下:[2021-04-02T15:53:52,668][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time { "seconds"=>0.51}[2021-04-02T15:53:52,684][INFO ][logstash.inputs.beats ][main] Starting input listener { :address=>"0.0.0.0:5044"}[2021-04-02T15:53:52,699][INFO ][logstash.javapipeline ][main] Pipeline started { "pipeline.id"=>"main"}[2021-04-02T15:53:52,790][INFO ][logstash.agent ] Pipelines running { :count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}[2021-04-02T15:53:52,807][INFO ][org.logstash.beats.Server][main][d8a9d29cbbead0a1f85f978a5c1bdc16ef566a6df07141bc471f85a33ec1ddce] Starting server on port: 5044[2021-04-02T15:53:52,966][INFO ][logstash.agent ] Successfully started Logstash API endpoint { :port=>9600}

2, 数据源端:Filebeat (发送日志数据给监听端口)

filebeats配置,并启动:https://www.elastic.co/guide/en/beats/filebeat/7.12/filebeat-installation-configuration.html

====1, D:/download/elk-stack/filebeat-7.11.2-windows-x86_64/README.md

To get started with Filebeat, you need to set up Elasticsearch on

your localhost first. After that, start Filebeat with:

./filebeat -c filebeat.yml -e

This will start Filebeat and send the data to your Elasticsearch

instance. To load the dashboards for Filebeat into Kibana, run:./filebeat setup -e

-d, —d string Enable certain debug selectors

-e, —e Log to stderr and disable syslog/file output

#====2, conf/filebeats.yml 配置如下:filebeat.config.modules:path: ${path.config}/modules.d/*.ymlreload.enabled: truereload.period: 10sfilebeat.inputs:- type: logclose_inactive: 6hclose_renamed: truepaths:- D:\download\elk-stack\logstash-7.11.2\logstash-tutorial-dataset#- "/var/log/apache2/*"output.logstash:hosts: ["localhost:5044"]#====3, 停止后,删除日志读取记录:从头读取整改文件rm data/registry./filebeat -e -c filebeat.yml -d "publish"#log日志样例:D:\download\elk-stack\logstash-7.11.2\logstash-tutorial-dataset#200.49.190.100 - - [04/Jan/2015:05:17:37 +0000] "GET /blog/tags/web HTTP/1.1" 200 44019 "-" "QS304 Profile/MIDP-2.0 Configuration/CLDC-1.1"#86.1.76.62 - - [04/Jan/2015:05:30:37 +0000] "GET /style2.css HTTP/1.1" 200 4877 "http://www.semicomplete.com/projects/xdotool/" "Mozilla/5.0 (X11; Linux x86_64; rv:24.0) Gecko/20140205 Firefox/24.0 Iceweasel/24.3.0"

3, 数据目标端:Elasticsearch (索引被接收的数据)

filebeat —> logstash —> elasticsearch

1, filebeat —> logstash —> elasticsearch

logstash -f first-pipeline.conf —config.reload.automatic #—config.test_and_exit

first-pipeline.conf :

input {beats {port => "5044"}}filter {grok {match => { "message" => "%{COMBINEDAPACHELOG}"}}geoip {source => "clientip"}}output {elasticsearch {hosts => [ "localhost:9200" ]user => "elastic"password => "123456"}}

1.1 , 修改logstash配置: 添加fileter { grok {match => { “message” => “%{COMBINEDAPACHELOG}”}} }

grok 表达式测试(本地Kibana: 开发工具 —> Grok Debugger ) : https://www.elastic.co/guide/en/kibana/7.12/xpack-grokdebugger.html

继续添加一条数据: 在 D:\download\elk-stack\logstash-7.11.2\logstash-tutorial-dataset 文件后面,追加日志数据,看logstash 启动终端 是否捕获数据

…

"clientip" => "86.1.76.62","input" => {"type" => "log"},

1.2, 修改logstash配置: 添加fileter {grok{match=>{“message”=>”%{COMBINEDAPACHELOG}”}} geoip{source=>”clientip”}}

解析了 ip所在的地址信息

…

“clientip” => “86.1.76.62”,"input" => {"type" => "log"},

“httpversion” => “1.1”,

"geoip" => {"city_name" => "Burnley","timezone" => "Europe/London","longitude" => -2.2342,"country_code3" => "GB","region_code" => "LAN",

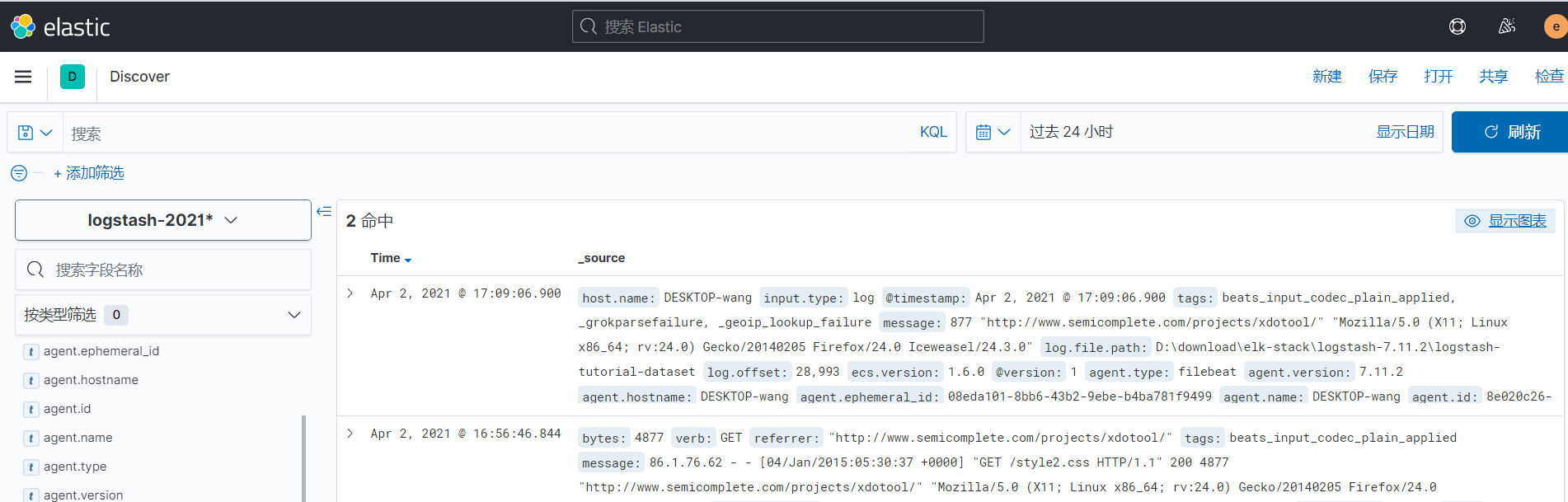

#2, 查看控制台输出: ==》 在es 里面创建了index: logstash-2021.04.02-000001#Installing elasticsearch template to _template/logstash,Creating rollover alias <logstash-{now/d}-000001>#[2021-04-02T16:49:02,269][INFO ][logstash.agent ] Pipelines running { :count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}[2021-04-02T16:49:02,347][INFO ][logstash.outputs.elasticsearch][main] Attempting to install template { :manage_template=>{ "index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{ "index.refresh_interval"=>"5s", "number_of_shards"=>1, "index.lifecycle.name"=>"logstash-policy", "index.lifecycle.rollover_alias"=>"logstash"}, "mappings"=>{ "dynamic_templates"=>[{ "message_field"=>{ "path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{ "type"=>"text", "norms"=>false}}}, { "string_fields"=>{ "match"=>"*", "match_mapping_type"=>"string", "mapping"=>{ "type"=>"text", "norms"=>false, "fields"=>{ "keyword"=>{ "type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{ "@timestamp"=>{ "type"=>"date"}, "@version"=>{ "type"=>"keyword"}, "geoip"=>{ "dynamic"=>true, "properties"=>{ "ip"=>{ "type"=>"ip"}, "location"=>{ "type"=>"geo_point"}, "latitude"=>{ "type"=>"half_float"}, "longitude"=>{ "type"=>"half_float"}}}}}}}[2021-04-02T16:49:02,375][INFO ][logstash.outputs.elasticsearch][main] Installing elasticsearch template to _template/logstash[2021-04-02T16:49:02,519][INFO ][logstash.outputs.elasticsearch][main] Creating rollover alias <logstash-{ now/d}-000001>#3, 查看es数据:curl -u elastic:123456 "http://localhost:9200/_cat/indices?pretty&v=true"curl -u elastic:123456 -XGET 'localhost:9200/logstash-2021.04.02-000001/_search?pretty&q=response=200'

- 创建kibana索引: 查看数据

还没有评论,来说两句吧...