【Java源码分析】HashTable源码分析

类的定义

public class Hashtable<K,V>extends Dictionary<K,V>implements Map<K,V>, Cloneable, java.io.Serializable {}

注意前面虽然说HashTable和HashMap是非常像的,但是这两个类的父类是不一样的。前者是字典类的子类,后者是抽象Map的子类。

- HashTable 也是将key映射到value的集合类,key不允许为null,并且作为key的类是必须实现hashCode()和equals()方法

- 影响HashTable的性能的两个因素也是容量capacity和装载因子load-factor。关于这一点和HashMap是一样的,默认装载因子也是0.75

- 初始化容量用于控制空间利用率和扩容之间的平衡,如果初始容量很小,那么后续可能引发多次扩容(扩容会重新分配空间,再hash,并拷贝数据,所以比较耗时);如果初始容量很大,可能会有点浪费存储空间;所以和HashMap一样,最好预估一个合理的初始大小

- 迭代器创建并迭代的过程中同样是不允许修改HashTable结构的(比如增加或者删除数据),否则出现fail-fast,同时fail-fast虽然抛出了

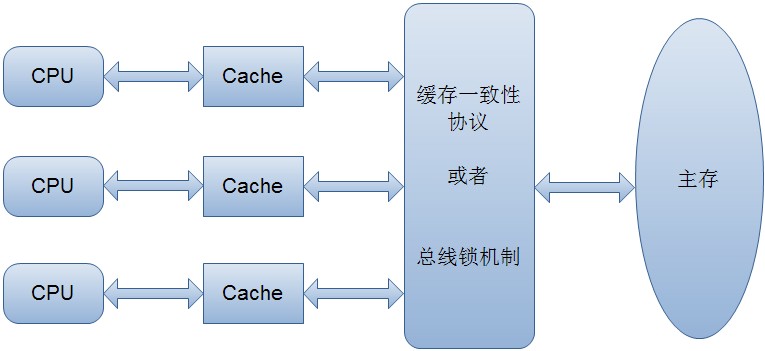

ConcurrentModificationException但是它并不能保证线程安全性 - HashTable是线程安全的如果程序本身不是多线程环境下运行,那么建议使用HashMap,如果是高并发环境下,建议使用

java.util.concurrent.ConcurrentHashMap,只有在一般并发环境下建议使用HashTable

重要的成员变量

private transient Entry<K,V>[] table; // 基于数组实现private transient int count; // 实际存放的实体个数private int threshold; // 阈值,用于判断是否需要扩容private float loadFactor; // 装载因子

构造函数

public Hashtable() {this(11, 0.75f);}public Hashtable(int initialCapacity) {this(initialCapacity, 0.75f);}public Hashtable(int initialCapacity, float loadFactor) {if (initialCapacity < 0)throw new IllegalArgumentException("Illegal Capacity: "+initialCapacity);if (loadFactor <= 0 || Float.isNaN(loadFactor))throw new IllegalArgumentException("Illegal Load: "+loadFactor);if (initialCapacity==0)initialCapacity = 1;this.loadFactor = loadFactor;table = new Entry[initialCapacity];threshold = (int)Math.min(initialCapacity * loadFactor, MAX_ARRAY_SIZE + 1);useAltHashing = sun.misc.VM.isBooted() &&(initialCapacity >= Holder.ALTERNATIVE_HASHING_THRESHOLD);}public Hashtable(Map<? extends K, ? extends V> t) {this(Math.max(2*t.size(), 11), 0.75f);putAll(t);}

构造函数和HashMap一样,不同点在于默认容量不一样,HashTable的默认容量是11

hash函数

transient final int hashSeed = sun.misc.Hashing.randomHashSeed(this);private int hash(Object k) {if (useAltHashing) {if (k.getClass() == String.class) {return sun.misc.Hashing.stringHash32((String) k);} else {int h = hashSeed ^ k.hashCode();// This function ensures that hashCodes that differ only by// constant multiples at each bit position have a bounded// number of collisions (approximately 8 at default load factor).h ^= (h >>> 20) ^ (h >>> 12);return h ^ (h >>> 7) ^ (h >>> 4);}} else {return k.hashCode();}}

hash的计算和HashMap是一样的

判断是否存在给定值

public synchronized boolean contains(Object value) {if (value == null) {throw new NullPointerException();}Entry tab[] = table;for (int i = tab.length ; i-- > 0 ;) {for (Entry<K,V> e = tab[i] ; e != null ; e = e.next) {if (e.value.equals(value)) {return true;}}}return false;}public boolean containsValue(Object value) {return contains(value);}

可以看到这里一旦发现value是null就直接抛出异常,这样直接说明HashTable是不允许null-value的。另外该方法虽然是contains(),但是实际功能和containsValue()是一样的,从containsValue()的实现可以看出

给定的key是否存在

public synchronized boolean containsKey(Object key) {Entry tab[] = table;int hash = hash(key);int index = (hash & 0x7FFFFFFF) % tab.length;for (Entry<K,V> e = tab[index] ; e != null ; e = e.next) {if ((e.hash == hash) && e.key.equals(key)) {return true;}}return false;}

当且仅当给定的key是存在的时候才返回true,但是注意一点的是这里所说的是否存在是取决于equals()方法是如何实现的。这和开头所强调的存入HashTable的key必须实现hashCode和equals方法是一致的.这里(hash & 0x7FFFFFFF)的目的应该是为了保证hash是一个正值,毕竟只有第32位为0其他都是1

使用给定的key查找

public synchronized V get(Object key) {Entry tab[] = table;int hash = hash(key);int index = (hash & 0x7FFFFFFF) % tab.length;for (Entry<K,V> e = tab[index] ; e != null ; e = e.next) {if ((e.hash == hash) && e.key.equals(key)) {return e.value;}}return null;}

同HashMap,因为这里的底层实现结构都是一样的,都是基于数组,数组的每个下标对应一个单链表。如果对应的key在HashTable中存在着对应的value那么返回找到的value,否则返回Null

扩容

protected void rehash() {int oldCapacity = table.length;Entry<K,V>[] oldMap = table;// overflow-conscious codeint newCapacity = (oldCapacity << 1) + 1;if (newCapacity - MAX_ARRAY_SIZE > 0) {if (oldCapacity == MAX_ARRAY_SIZE)// Keep running with MAX_ARRAY_SIZE bucketsreturn;newCapacity = MAX_ARRAY_SIZE;}Entry<K,V>[] newMap = new Entry[newCapacity];modCount++;threshold = (int)Math.min(newCapacity * loadFactor, MAX_ARRAY_SIZE + 1);boolean currentAltHashing = useAltHashing;useAltHashing = sun.misc.VM.isBooted() &&(newCapacity >= Holder.ALTERNATIVE_HASHING_THRESHOLD);boolean rehash = currentAltHashing ^ useAltHashing;table = newMap;for (int i = oldCapacity ; i-- > 0 ;) {for (Entry<K,V> old = oldMap[i] ; old != null ; ) {Entry<K,V> e = old;old = old.next;if (rehash) {e.hash = hash(e.key);}int index = (e.hash & 0x7FFFFFFF) % newCapacity;e.next = newMap[index];newMap[index] = e;}}}

当实际装入的键值对的个数超过了table的长度也就是容量capacity和装载因子的乘积,就会进行扩容,扩容过程是自动的,但是比较耗时。扩增后的容量是最初的容量的2倍加1,这里和HashMap对比就很容易理解,HashMap的初始容量是偶数,而且要求容量一直是偶数,那么扩容的时候就直接扩大到原来的2倍。相应的,HashTable的初始容量是奇数11,2倍之后变成偶数,所以加1。不过这里没有要求HashTable的容量必须是奇数,但无论如何扩容后容量都会变成奇数

添加键值对

public synchronized V put(K key, V value) {// Make sure the value is not nullif (value == null) {throw new NullPointerException();}// Makes sure the key is not already in the hashtable.Entry tab[] = table;int hash = hash(key);int index = (hash & 0x7FFFFFFF) % tab.length;for (Entry<K,V> e = tab[index] ; e != null ; e = e.next) {if ((e.hash == hash) && e.key.equals(key)) {V old = e.value;e.value = value;return old;}}modCount++;if (count >= threshold) {// Rehash the table if the threshold is exceededrehash();tab = table;hash = hash(key);index = (hash & 0x7FFFFFFF) % tab.length;}// Creates the new entry.Entry<K,V> e = tab[index];tab[index] = new Entry<>(hash, key, value, e);count++;return null;}

注意键和值都必须不能为NULL添加过程中如果发现给定的key已经存在,那么替换旧值并返回旧值,否则将新的键值对加入到HashTable,其中如果添加过程中发现实际键值对数目已经超过阈值,那么就进行扩容

删除键值对

public synchronized V remove(Object key) {Entry tab[] = table;int hash = hash(key);int index = (hash & 0x7FFFFFFF) % tab.length;for (Entry<K,V> e = tab[index], prev = null ; e != null ; prev = e, e = e.next) {if ((e.hash == hash) && e.key.equals(key)) {modCount++;if (prev != null) {prev.next = e.next;} else {tab[index] = e.next;}count--;V oldValue = e.value;e.value = null;return oldValue;}}return null;}

如果找到了给定key对应的键值对,那么就做删除操作,如果没有找到,那么就什么也不做。实际的删除过程就是单链表的节点删除

清空操作

public synchronized void clear() {Entry tab[] = table;modCount++;for (int index = tab.length; --index >= 0; )tab[index] = null;count = 0;}

和HashMap的清空操作一样,只是将每个slot置为NULL,剩下的交给GC

比较两个HashTable是否相等

public synchronized boolean equals(Object o) {if (o == this)return true;if (!(o instanceof Map))return false;Map<K,V> t = (Map<K,V>) o;if (t.size() != size())return false;try {Iterator<Map.Entry<K,V>> i = entrySet().iterator();while (i.hasNext()) {Map.Entry<K,V> e = i.next();K key = e.getKey();V value = e.getValue();if (value == null) {if (!(t.get(key)==null && t.containsKey(key)))return false;} else {if (!value.equals(t.get(key)))return false;}}} catch (ClassCastException unused) {return false;} catch (NullPointerException unused) {return false;}return true;}

首先比较大小是否相等,如果大小相等,那么就依次比较实体集合中每个实体的是否是一样的键值对,如果所有的键值对都一样,那么就返回true,否则返回false ,注意由于HashTable中数据存储并不是有序的,所以实际比较的时候使用的是get()根据指定的key获取对应的value

获取HashTable的hashCode

public synchronized int hashCode() {/** This code detects the recursion caused by computing the hash code* of a self-referential hash table and prevents the stack overflow* that would otherwise result. This allows certain 1.1-era* applets with self-referential hash tables to work. This code* abuses the loadFactor field to do double-duty as a hashCode* in progress flag, so as not to worsen the space performance.* A negative load factor indicates that hash code computation is* in progress.*/int h = 0;if (count == 0 || loadFactor < 0)return h; // Returns zeroloadFactor = -loadFactor; // Mark hashCode computation in progressEntry[] tab = table;for (Entry<K,V> entry : tab)while (entry != null) {h += entry.hashCode();entry = entry.next;}loadFactor = -loadFactor; // Mark hashCode computation completereturn h;}

实际计算HashTable的hashCode是根据每个实体的hashCode来计算的,所有实体的hashCode()之和就是HashTable的hashCode。loadFractor扮演了一个标志位的角色,标志计算的开始和结束。关于这里h += entry.hashCode()中h是否会溢出的问题,上面再hash()函数中已经看到了每次求hash的时候都会与0x7fffffff,也就是说hash永远为正,不会溢出

序列化和反序列化

private void writeObject(java.io.ObjectOutputStream s)throws IOException {Entry<K, V> entryStack = null;synchronized (this) {// Write out the length, threshold, loadfactors.defaultWriteObject();// Write out length, count of elementss.writeInt(table.length);s.writeInt(count);// Stack copies of the entries in the tablefor (int index = 0; index < table.length; index++) {Entry<K,V> entry = table[index];while (entry != null) {entryStack =new Entry<>(0, entry.key, entry.value, entryStack);entry = entry.next;}}}// Write out the key/value objects from the stacked entrieswhile (entryStack != null) {s.writeObject(entryStack.key);s.writeObject(entryStack.value);entryStack = entryStack.next;}}private void readObject(java.io.ObjectInputStream s)throws IOException, ClassNotFoundException{// Read in the length, threshold, and loadfactors.defaultReadObject();// set hashSeedUNSAFE.putIntVolatile(this, HASHSEED_OFFSET,sun.misc.Hashing.randomHashSeed(this));// Read the original length of the array and number of elementsint origlength = s.readInt();int elements = s.readInt();// Compute new size with a bit of room 5% to grow but// no larger than the original size. Make the length// odd if it's large enough, this helps distribute the entries.// Guard against the length ending up zero, that's not valid.int length = (int)(elements * loadFactor) + (elements / 20) + 3;if (length > elements && (length & 1) == 0)length--;if (origlength > 0 && length > origlength)length = origlength;Entry<K,V>[] table = new Entry[length];threshold = (int) Math.min(length * loadFactor, MAX_ARRAY_SIZE + 1);count = 0;useAltHashing = sun.misc.VM.isBooted() &&(length >= Holder.ALTERNATIVE_HASHING_THRESHOLD);// Read the number of elements and then all the key/value objectsfor (; elements > 0; elements--) {K key = (K)s.readObject();V value = (V)s.readObject();// synch could be eliminated for performancereconstitutionPut(table, key, value);}this.table = table;}

注意在反序列化过程中,并不会重建一个和序列化时候一模一样的HashTable,相反,会根据实际的大小和装载因子等数据创建一个更加合理大小的数组来存放数据,也就是上面加注释的求取新的length的代码。首先需要保证的是新的length是不能超过原来的length,否则没有意义,另外是保证这个新的length是奇数,而且比原来增加5%.另外这里也说明了为什么capacity或者说length需要时奇数,主要是为了更均匀的映射。HashMap中设置为偶数也是为了均匀Hash

还没有评论,来说两句吧...