人脸识别开源网络笔记

https://github.com/HaoSir/ECCV-2020-Fair-Face-Recognition-challenge_2nd_place_solution-ustc-nelslip-

Spherical Confidence Learning for Face Recognition

人脸识别中的球形置信度学习

作者 | Shen Li、Jianqing Xu、Xiaqing Xu、Pengcheng Shen、Shaoxin Li、Bryan Hooi

单位 | 新加坡国立大学;腾讯优图;Aibee

论文 |

https://openaccess.thecvf.com/content/CVPR2021/papers/Li_Spherical_Confidence_Learning_for_Face_Recognition_CVPR_2021_paper.pdf

论文 | https://github.com/MathsShen/SCF/

备注 | CVPR 2021 oral

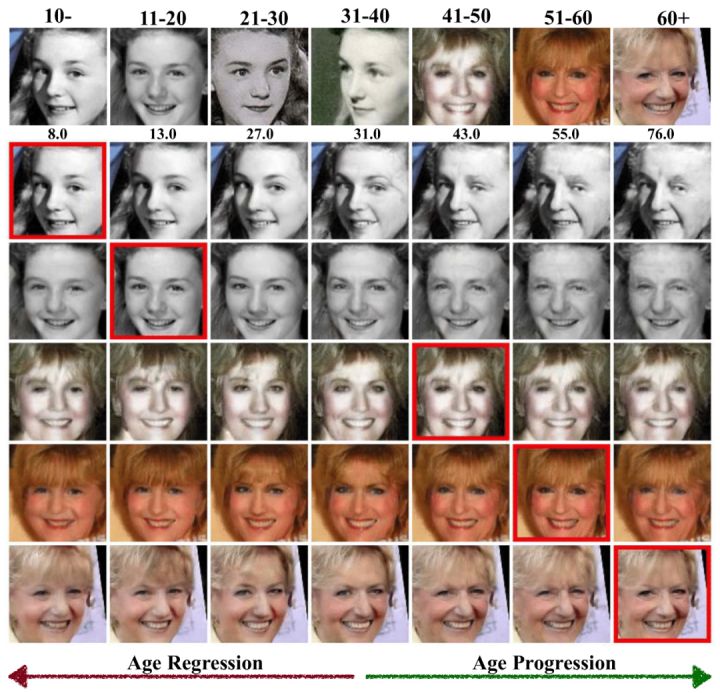

文章提出一个多任务学习框架:MTLFace,可以同时实现 AIFR 和 FAS。设计两个新的模块:AFD 将特征分解为年龄和身份相关的特征,ICM 实现身份层面的人脸年龄合成。在跨年龄段和一般基准数据集上进行的广泛的人脸识别实验证明了所提出的方法的优越性。

作者 | Zhizhong Huang, Junping Zhang, Hongming Shan

单位 | 复旦大学;

论文 | https://arxiv.org/abs/2103.01520

代码 | https://github.com/Hzzone/MTLFace

备注 | CVPR 2021 oral

https://blog.csdn.net/moxibingdao/article/details/108722683

class CurricularFace(nn.Module):r"""Implement of CurricularFace (https://arxiv.org/pdf/2004.00288.pdf):Args:in_features: size of each input sampleout_features: size of each output sampledevice_id: the ID of GPU where the model will be trained by model parallel.if device_id=None, it will be trained on CPU without model parallel.m: margins: scale of outputs"""def __init__(self, in_features, out_features, m = 0.5, s = 64.):super(CurricularFace, self).__init__()self.in_features = in_featuresself.out_features = out_featuresself.m = mself.s = sself.cos_m = math.cos(m)self.sin_m = math.sin(m)self.threshold = math.cos(math.pi - m)self.mm = math.sin(math.pi - m) * mself.kernel = Parameter(torch.Tensor(in_features, out_features))self.register_buffer('t', torch.zeros(1))nn.init.normal_(self.kernel, std=0.01)def forward(self, embbedings, label):embbedings = l2_norm(embbedings, axis = 1)kernel_norm = l2_norm(self.kernel, axis = 0)cos_theta = torch.mm(embbedings, kernel_norm)cos_theta = cos_theta.clamp(-1, 1) # for numerical stabilitywith torch.no_grad():origin_cos = cos_theta.clone()target_logit = cos_theta[torch.arange(0, embbedings.size(0)), label].view(-1, 1)sin_theta = torch.sqrt(1.0 - torch.pow(target_logit, 2))cos_theta_m = target_logit * self.cos_m - sin_theta * self.sin_m #cos(target+margin)mask = cos_theta > cos_theta_mfinal_target_logit = torch.where(target_logit > self.threshold, cos_theta_m, target_logit - self.mm)hard_example = cos_theta[mask]with torch.no_grad():self.t = target_logit.mean() * 0.01 + (1 - 0.01) * self.tcos_theta[mask] = hard_example * (self.t + hard_example)cos_theta.scatter_(1, label.view(-1, 1).long(), final_target_logit)output = cos_theta * self.sreturn output

![洛谷 P1169 [ZJOI2007]棋盘制作 洛谷 P1169 [ZJOI2007]棋盘制作](https://image.dandelioncloud.cn/images/20230808/72ba490c52904facb1bad28940d1f12a.png)

还没有评论,来说两句吧...