【python爬虫】爬取知乎收藏夹内所有问题名称地址保存至Mysql

转载请注明源地址,代码在Github中(可能会更新):https://github.com/qqxx6661/python/

初学python,练手项目。该代码并没有什么太大的实际意义,毕竟收藏可以直接在网页上看,没必要这样折腾。仅作学习之用。

PS:请勿长时间爬取,以免ip被知乎屏蔽。代码中代理有一定问题,由于知乎是走https,普通http代理没法用,实际运行中就算用了代理还是走本地ip

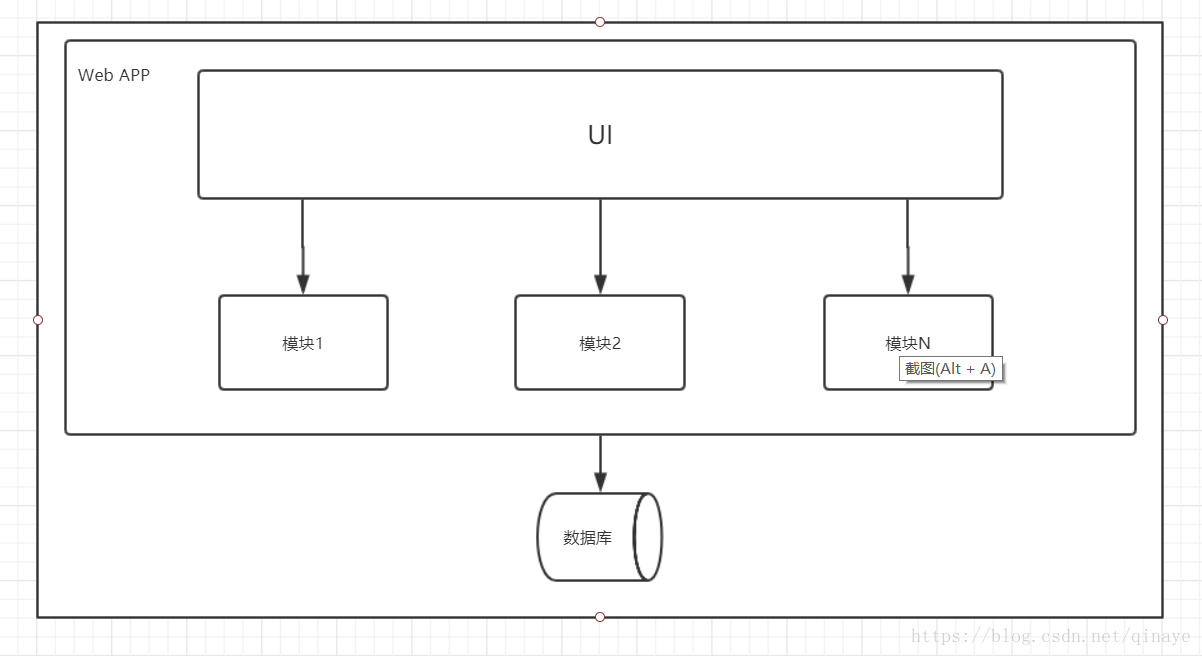

该程序中用到(可以初步理解):

1.python连接数据库:Mysql-connector

2.re正则表达式

3.requests用法:代理,post,get,headers等

4.验证码抓取

5.文件保存和读取

运行截图:

# -*- coding: utf-8 -*-from __future__ import unicode_literalsimport requestsimport reimport timefrom subprocess import Popenimport mysql.connectorclass Spider:def __init__(self):print '爬虫初始化......'self.s = requests.session()# 代理信息self.proxies = {"http": "http://42.159.195.126:80", "https": "https://115.225.250.91:8998"}try:r = self.s.get('http://www.baidu.com/', proxies=self.proxies)except requests.exceptions.ProxyError, e:print eprint '代理失效,请修改代码更换代理'exit()print '代理通过验证,可以使用'# 知乎headersself.headers = {'Accept': '*/*','Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8','X-Requested-With': 'XMLHttpRequest','Referer': 'https://www.zhihu.com','Accept-Language': 'zh-CN,zh;q=0.8','Accept-Encoding': 'gzip, deflate','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 Safari/537.36 SE 2.X MetaSr 1.0','Host': 'www.zhihu.com'}# 登陆知乎获取验证码r = self.s.get('https://www.zhihu.com', headers=self.headers, proxies=self.proxies)cer = re.compile('name=\"_xsrf\" value=\"(.*)\"', flags=0)strlist = cer.findall(r.text)_xsrf = strlist[0]#print(r.request.headers)#print(str(int(time.time() * 1000)))Captcha_URL = 'https://www.zhihu.com/captcha.gif?r=' + str(int(time.time() * 1000))r = self.s.get(Captcha_URL, headers=self.headers)with open('code.gif', 'wb') as f:f.write(r.content)Popen('code.gif', shell=True)captcha = input('captcha: ')login_data = { # 填写账号信息'_xsrf': _xsrf,'phone_num': '18769201984','password': 'ybs852421','remember_me': 'true','captcha': captcha}# 登陆知乎r = self.s.post('https://www.zhihu.com/login/phone_num', data=login_data, headers=self.headers, proxies=self.proxies)print(r.text)def get_collections(self): # 获取关注的收藏夹# 抓取自己创建的收藏夹r = self.s.get('http://www.zhihu.com/collections/mine', headers=self.headers, proxies=self.proxies)# 抓取关注的收藏夹#r = self.s.get('https://www.zhihu.com/collections', headers=self.headers, proxies=self.proxies)# 获取收藏夹名数组和地址名数组re_mine_url = re.compile(r'<a href="(/collection/\d+?)"')list_mine_url = re.findall(re_mine_url, r.content)re_mine_name = re.compile(r'\d"\s>(.*?)</a>')list_mine_name = re.findall(re_mine_name, r.content)#print '收藏夹名称:', list_mine_name# for循环遍历数组for i in range(len(list_mine_url)):list_mine_url[i] = 'https://www.zhihu.com' + list_mine_url[i]#print '收藏夹地址:', list_mine_urlconn = mysql.connector.connect(user='root', password='123456', database='test')cursor = conn.cursor()for i in range(len(list_mine_url)):page = 0sql = 'create table %s (id integer(10) primary key auto_increment, name varchar(100), address varchar(100))' % list_mine_name[i].decode("UTF-8")print sqlcursor.execute(sql)conn.commit()while 1:page += 1col_url = list_mine_url[i]col_url = col_url + '?page=' + str(page)print '正在抓取:', col_urlr = self.s.get(col_url, headers=self.headers, proxies=self.proxies)re_col_url = re.compile(r'href="(/question/\d*?)">.+?</a></h2>')list_col_url = re.findall(re_col_url, r.content)re_col_name = re.compile(r'\d">(.+?)</a></h2>')list_col_name = re.findall(re_col_name, r.content)if list_col_name:#print '问题名称', list_col_name# for循环遍历数组for j in range(len(list_col_url)):list_col_url[j] = 'https://www.zhihu.com' + list_col_url[j]#print '问题地址:', list_col_urlfor j in range(len(list_col_url)):sql = 'insert into %s (name, address) values ("%s", "%s")' % (list_mine_name[i].decode("UTF-8"), list_col_name[j].decode("UTF-8"), list_col_url[j])cursor.execute(sql)conn.commit()else:print '该收藏夹已无更多问题'breakcursor.close()spider = Spider()spider.get_collections()print '爬虫执行完毕,请检查数据库'

还没有评论,来说两句吧...