Scrapy网络爬虫框架实战[以腾讯新闻网为例]

本博客为原创博客,仅供技术学习使用。不经允许禁止复制下来,传到百度文库等平台。

目录

- 引言

- 待爬的url

- 框架架构

- items的编写

- Spider的编写

- 存储pipelines的编写

- 相关配置settings的编写

- main方法的编写

- 运行结果展示

引言

关于Scrapy的相关介绍及豆瓣案例请看我写的另外两篇博客。

http://blog.csdn.net/qy20115549/article/details/52528896

http://blog.csdn.net/qy20115549/article/details/52575291

待爬的url

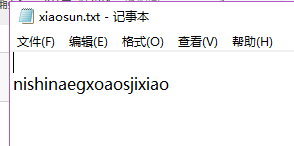

如下图所示,所需要爬去的url地址,有很多,存储在txt文本文件中,如其中的一个链接为 //stock.qq.com/a/20160919/007925.htm。

//stock.qq.com/a/20160919/007925.htm。

框架架构

items的编写

为了简单起见,我只爬了新闻的标题及正文。如下图所示:

__author__ = ' HeFei University of Technology Qian Yang email:1563178220@qq.com'# -*- coding: utf-8 -*-import scrapyclass News(scrapy.Item):content = scrapy.Field()title = scrapy.Field()

Spider的编写

__author__ = ' HeFei University of Technology Qian Yang email:1563178220@qq.com'# -*- coding:utf-8 -*-import scrapyfrom tengxunnews.items import Newsclass Teng(scrapy.Spider):name = 'tengxunnews'allowed_domains = ["qq.com"]#read url from filef = open("E:\\a.txt", "r")start_urls = []while True:line = f.readline()if line:pass # do something hereline=line.strip().replace("['","").replace("']","")p=line.rfind('.')filename=line[0:p]print "the url is %s"%linestart_urls.append(line)else:breakf.close()def parse(self, response):item = News()item['content'] = response.xpath('//div[@id="Cnt-Main-Article-QQ"]/p/text()').extract()item['title'] = response.xpath('//div[@class="hd"]/h1/text()').extract()yield item

存储pipelines的编写

__author__ = ' HeFei University of Technology Qian Yang email:1563178220@qq.com'# -*- coding: utf-8 -*-import jsonimport codecs#以Json的形式存储class JsonWithEncodingCnblogsPipeline(object):def __init__(self):self.file = codecs.open('tengxunnews.json', 'w', encoding='gbk')def process_item(self, item, spider):line = json.dumps(dict(item), ensure_ascii=False) + "\n"self.file.write(line)return itemdef spider_closed(self, spider):self.file.close()#将数据存储到mysql数据库from twisted.enterprise import adbapiimport MySQLdbimport MySQLdb.cursorsclass MySQLStorePipeline(object):#数据库参数def __init__(self):dbargs = dict(host = '127.0.0.1',db = 'test',user = 'root',passwd = '112233',cursorclass = MySQLdb.cursors.DictCursor,charset = 'utf8',use_unicode = True)self.dbpool = adbapi.ConnectionPool('MySQLdb',**dbargs)''' The default pipeline invoke function '''def process_item(self, item,spider):res = self.dbpool.runInteraction(self.insert_into_table,item)return item#插入的表,此表需要事先建好def insert_into_table(self,conn,item):conn.execute('insert into tengxunnews(content, title) values(%s,%s)', (item['content'][0],item['title'][0]))

相关配置settings的编写

settings主要放配置方面的文件,如下为我在setting末尾添加的配置代码。

#USER_AGENTUSER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_3) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.54 Safari/536.5'# start MySQL database configure settingMYSQL_HOST = 'localhost'MYSQL_DBNAME = 'test'MYSQL_USER = 'root'MYSQL_PASSWD = '11223'# end of MySQL database configure settingITEM_PIPELINES = {'tengxunnews.pipelines.JsonWithEncodingCnblogsPipeline': 300,'tengxunnews.pipelines.MySQLStorePipeline': 300,}

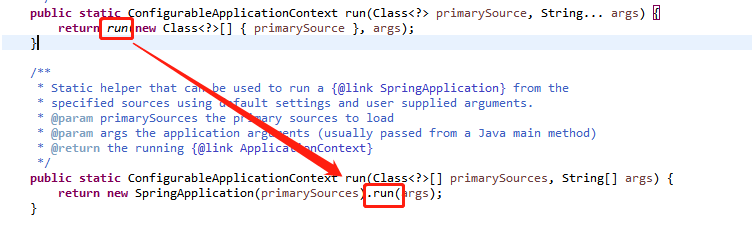

main方法的编写

__author__ = ' HeFei University of Technology Qian Yang email:1563178220@qq.com'from scrapy import cmdlinecmdline.execute("scrapy crawl tengxunnews".split())

运行结果展示

有问题请联系:合肥工业大学 管理学院 钱洋 1563178220@qq.com

还没有评论,来说两句吧...