【docker 17 源码分析】 Docker 镜像源码分析

Docker的graph driver主要用于管理和维护[镜像][Link 1],包括把镜像从仓库下载下来,到运行时把镜像挂载起来可以被容器访问等

目前docker支持的graph driver有:

- Overlay

- Aufs

- Devicemapper

- Btrfs

- Zfs

- Vfs

Docker镜像概念

- rootfs: 容器进程可见的文件系统、工具、容器文件等

- Union mount:多种文件系统内容合并后的目录,较为常见的有UnionFS、AUFS、OverlayFS等

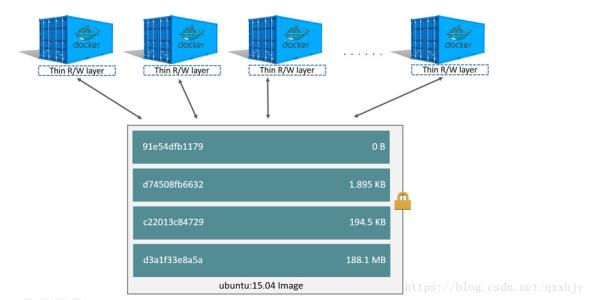

- layer: Docker容器中的每一层只读的image,以及最上层可读写的文件系统,均被称为layer

镜像基于内容寻址

docker1.10推翻了之前的镜像管理方式,重新开发了基于内容寻址的策略。该策略至少有3个好处:

- 提高了安全性

- 避免了ID冲突

确保数据完整性

基于内容寻址的实现,使用了两个目录:/var/lib/docker/image和/var/lib/docker/overlay, 后面的这个根据存储驱动的名称不同,而目录名不同。image目录保存了image的内容(sha256)数据。overlay目录保持了image的真实数据。

写时复制策略

每个container都有自己的读写layer,对镜像文件的修改和删除操作都会先执行镜像文件拷贝到读写layer的操作,然后对读写layer的文件进行修改和删除。

Docker镜像的内容主要包含两个部分:第一,镜像层文件内容;第二,镜像json文件静态的镜像不包含的

- 1./proc以及/sys等虚拟文件系统的内容

- 2.容器的hosts文件,hostname文件以及resolv.conf文件,这些事具体环境的信息,原则上的确不应该被打入镜像。

- 3.容器的Volume路径,这部分的视角来源于从宿主机上挂载到容器内部的路径

- 4.部分的设备文件

image存放路径解析

对于overlay2存储驱动路径为/var/lib/docker/image/overlay2

1. repositories.json:

存储image的image-id信息,主要是name和image id的对应关系

2. imagedb目录

content/sha256: 每一个镜像的配置信息,其id都是image-id,文件内容的sha256码

3. distribution目录

diffid-by-digest: digest到diffid的对应关系

# cat 85199fa09ec1510ee053da666286c385d07b8fab9fa61ae73a5b8c454e1b3397

sha256:919f29a58bd0ef96e5ed82bc7da07cafaea5e712acfd4a4b4558e06d57d7c2fc

v2metadata-by-diffid:diffid到digest的对应关系

# cat 919f29a58bd0ef96e5ed82bc7da07cafaea5e712acfd4a4b4558e06d57d7c2fc | python -m json.tool

[

{

“Digest”: “sha256:85199fa09ec1510ee053da666286c385d07b8fab9fa61ae73a5b8c454e1b3397”,

“HMAC”: “”,

“SourceRepository”: “docker.io/library/buildpack-deps”

},

{

“Digest”: “sha256:85199fa09ec1510ee053da666286c385d07b8fab9fa61ae73a5b8c454e1b3397”,

“HMAC”: “3ba1c2a458861768fa3153832272d291557d1d08951b29b40b1e47d95a91c09f”,

“SourceRepository”: “docker.io/zhangzhonglin/docker-dev”

},

{

“Digest”: “sha256:85199fa09ec1510ee053da666286c385d07b8fab9fa61ae73a5b8c454e1b3397”,

“HMAC”: “”,

“SourceRepository”: “docker.io/zhangzhonglin/docker-dev”

},

{

“Digest”: “sha256:85199fa09ec1510ee053da666286c385d07b8fab9fa61ae73a5b8c454e1b3397”,

“HMAC”: “”,

“SourceRepository”: “docker.io/library/debian”

}

]

4. layerdb目录-元数据属性信息

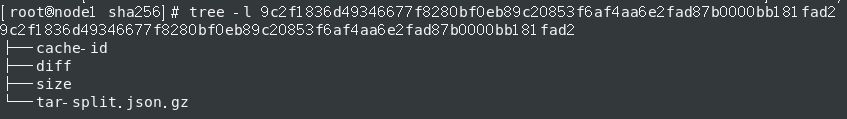

目录名称是layer的chainid,由于最底层的layer的chainid和diffid相同,比如centos:7.2.1511就是一层,chainid用到了所有祖先layer的信息,从而能保证根据chainid得到的rootfs是唯一的cache-id: 每一个层生成的uuid,神马鬼值,干啥用?????????????????diff: 最上层的layer的id,也就是为这个chain附加上去的层,是一个layer-idsize: 单位字节tar-split.json.gz: 压缩该层的压缩包

目录 /var/lib/docker/overlay2

存放的是镜像的每一层layer解压后的,以及基于每一个镜像生成容器后,对镜像合并挂载后的目录和对应的init目录

- /var/lib/docker/overlay2/

/merged: 所有镜像层合并后的,就是容器中进程看到的 - /var/lib/docker/overlay2/

/upper: 只读的上层 - /var/lib/docker/overlay2/

/work:用来做cow相关操作的 /var/lib/docker/overlay2/

/diff: 容器层的读写层 “GraphDriver”: {

"Data": {"LowerDir": "/var/lib/docker/overlay2/713057c5c2f360df298bed473d78999515d9939d77d2e2ecb21afc09ceff3530-init/diff:/var/lib/docker/overlay2/8aff7f83b84a179e0d0685f6898ad6c969e9e5c8160c5118548c61bb296c1001/diff","MergedDir": "/var/lib/docker/overlay2/713057c5c2f360df298bed473d78999515d9939d77d2e2ecb21afc09ceff3530/merged","UpperDir": "/var/lib/docker/overlay2/713057c5c2f360df298bed473d78999515d9939d77d2e2ecb21afc09ceff3530/diff","WorkDir": "/var/lib/docker/overlay2/713057c5c2f360df298bed473d78999515d9939d77d2e2ecb21afc09ceff3530/work"},"Name": "overlay2"}

为什么digest?

镜像的内容变了,但镜像的名称和tag没有变,所以会造成前后两次通过同样的名称和tag从服务器得到不同的两个镜像的问题,于是docker引入了镜像的digest的概念。一个镜像的digest就是镜像的manifes文件的sha256码,当镜像的内容发生变化的时候,即镜像的layer发生变化,从而layer的sha256发生变化,而manifest里面包含了每一个layer的sha256,所以manifest的sha256也会发生变化,即镜像的digest发生变化,这样就保证了digest能唯一的对应一个镜像。

初始化注册

全局变量drivers,所有存储驱动注册// All registered driversdrivers map[string]InitFunc所有存储驱动文件都有该init函数,注册到全局变量drivers中func init() {graphdriver.Register(driverName, Init)}

一. NewDaemon函数

路径: daemon/daemon.go

1.1 调用NewManager实例化

root路径默认为:/var/lib/docker,execRoot路径为: /run/docker/pluginsd.pluginManager, err = plugin.NewManager(plugin.ManagerConfig{Root: filepath.Join(config.Root, "plugins"),ExecRoot: getPluginExecRoot(config.Root),Store: d.PluginStore,Executor: containerdRemote,RegistryService: registryService,LiveRestoreEnabled: config.LiveRestoreEnabled,LogPluginEvent: d.LogPluginEvent, // todo: make privateAuthzMiddleware: config.AuthzMiddleware,})

1.2 实例化layerStore

路径为d.layerStore, err = layer.NewStoreFromOptions(layer.StoreOptions{StorePath: config.Root,MetadataStorePathTemplate: filepath.Join(config.Root, "image", "%s", "layerdb"),GraphDriver: driverName,GraphDriverOptions: config.GraphOptions,UIDMaps: uidMaps,GIDMaps: gidMaps,PluginGetter: d.PluginStore,ExperimentalEnabled: config.Experimental,})

1.2.1 NewStoreFromOptions函数

调用daemon/graphdriver/driver.go函数中的New方法,结构体store实现了Store接口// NewStoreFromOptions creates a new Store instancefunc NewStoreFromOptions(options StoreOptions) (Store, error) {driver, err := graphdriver.New(options.GraphDriver, options.PluginGetter, graphdriver.Options{Root: options.StorePath,DriverOptions: options.GraphDriverOptions,UIDMaps: options.UIDMaps,GIDMaps: options.GIDMaps,ExperimentalEnabled: options.ExperimentalEnabled,})if err != nil {return nil, fmt.Errorf("error initializing graphdriver: %v", err)}logrus.Debugf("Using graph driver %s", driver)fms, err := NewFSMetadataStore(fmt.Sprintf(options.MetadataStorePathTemplate, driver))if err != nil {return nil, err}return NewStoreFromGraphDriver(fms, driver)}

1.2.1.1 New函数

路径daemon/graphdriver/driver.go,一点点继续分析// New creates the driver and initializes it at the specified root.func New(name string, pg plugingetter.PluginGetter, config Options) (Driver, error) {if name != "" {logrus.Debugf("[graphdriver] trying provided driver: %s", name) // so the logs show specified driverreturn GetDriver(name, pg, config)}遍历一个slice这个是定义顺序的存储驱动,getBuiltinDriver函数如果有存储驱动直接调用initFunc初始化,就是各个存储驱动注册的Init函数,讲解overlay2的存储驱动for _, name := range priority {if name == "vfs" {// don't use vfs even if there is state present.continue}if _, prior := driversMap[name]; prior {// of the state found from prior drivers, check in order of our priority// which we would preferdriver, err := getBuiltinDriver(name, config.Root, config.DriverOptions, config.UIDMaps, config.GIDMaps)// abort starting when there are other prior configured drivers// to ensure the user explicitly selects the driver to loadif len(driversMap)-1 > 0 {var driversSlice []stringfor name := range driversMap {driversSlice = append(driversSlice, name)}return nil, fmt.Errorf("%s contains several valid graphdrivers: %s; Please cleanup or explicitly choose storage driver (-s <DRIVER>)", config.Root, strings.Join(driversSlice, ", "))}logrus.Infof("[graphdriver] using prior storage driver: %s", name)return driver, nil}}

二. overlay2存储驱动

2.1 Init函数

路径: daemon/graphdriver/overlay2/overlay.go// Init returns the a native diff driver for overlay filesystem.// If overlay filesystem is not supported on the host, graphdriver.ErrNotSupported is returned as error.// If an overlay filesystem is not supported over an existing filesystem then error graphdriver.ErrIncompatibleFS is returned.func Init(home string, options []string, uidMaps, gidMaps []idtools.IDMap) (graphdriver.Driver, error) {opts, err := parseOptions(options)if err != nil {return nil, err}

2.1.1 supportsOverlay函数

验证是否支持overlay,验证方式为读取/proc/filesystems,比如我的环境:

# cat /proc/filesystems

………………

nodev overlay

fuseblk

nodev fuse

nodev fusectl

func supportsOverlay() error {// We can try to modprobe overlay first before looking at// proc/filesystems for when overlay is supportedexec.Command("modprobe", "overlay").Run()f, err := os.Open("/proc/filesystems")if err != nil {return err}defer f.Close()s := bufio.NewScanner(f)for s.Scan() {if s.Text() == "nodev\toverlay" {return nil}}logrus.Error("'overlay' not found as a supported filesystem on this host. Please ensure kernel is new enough and has overlay support loaded.")return graphdriver.ErrNotSupported}

d.naiveDiff = graphdriver.NewNaiveDiffDriver(d, uidMaps, gidMaps)这块就是包裹了一下,实现了四个主要接口:

// Diff(id, parent string) (archive.Archive, error)// Changes(id, parent string) ([]archive.Change, error)// ApplyDiff(id, parent string, diff archive.Reader) (size int64, err error)// DiffSize(id, parent string) (size int64, err error)

// NewNaiveDiffDriver returns a fully functional driver that wraps the// given ProtoDriver and adds the capability of the following methods which// it may or may not support on its own:// Diff(id, parent string) (archive.Archive, error)// Changes(id, parent string) ([]archive.Change, error)// ApplyDiff(id, parent string, diff archive.Reader) (size int64, err error)// DiffSize(id, parent string) (size int64, err error)func NewNaiveDiffDriver(driver ProtoDriver, uidMaps, gidMaps []idtools.IDMap) Driver {return &NaiveDiffDriver{ProtoDriver: driver,uidMaps: uidMaps,gidMaps: gidMaps}}

2.1.2 NewStoreFromGraphDriver函数

// NewStoreFromGraphDriver creates a new Store instance using the provided// metadata store and graph driver. The metadata store will be used to restore// the Store.func NewStoreFromGraphDriver(store MetadataStore, driver graphdriver.Driver) (Store, error) {caps := graphdriver.Capabilities{}if capDriver, ok := driver.(graphdriver.CapabilityDriver); ok {caps = capDriver.Capabilities()}

NewFSStoreBackend创建的是文件系统存储,路径为/var/lib/docker/image/overlay2/imagedb,该文件下创建两个目录分别为content, metadata,这俩文件都含有shan256目录

// NewFSStoreBackend returns new filesystem based backend for image.Storefunc NewFSStoreBackend(root string) (StoreBackend, error) {return newFSStore(root)}func newFSStore(root string) (*fs, error) {s := &fs{root: root,}if err := os.MkdirAll(filepath.Join(root, contentDirName, string(digest.Canonical)), 0700); err != nil {return nil, errors.Wrap(err, "failed to create storage backend")}if err := os.MkdirAll(filepath.Join(root, metadataDirName, string(digest.Canonical)), 0700); err != nil {return nil, errors.Wrap(err, "failed to create storage backend")}return s, nil}NewImageStore函数用来缓存当前镜像以及层信息// NewImageStore returns new store object for given layer storefunc NewImageStore(fs StoreBackend, ls LayerGetReleaser) (Store, error) {is := &store{ls: ls,images: make(map[ID]*imageMeta),fs: fs,digestSet: digestset.NewSet(),}// load all current images and retain layersif err := is.restore(); err != nil {return nil, err}return is, nil}

总结一下:

在NewDaemon函数中,定义并创建了一大堆目录,存储后端,将层结构信息存储images里

还没有评论,来说两句吧...