Exception in thread "main" java.io.IOException: Trying to load more than 32 hfiles to one family of

遇见问题:

命令:hbase org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles /user/yz/zhaochao/duotou200/ neibudt_200_cols_hfile20190409_1

报错:

Exception in thread "main" java.io.IOException: Trying to load more than 32 hfiles to one family of

解决思路:

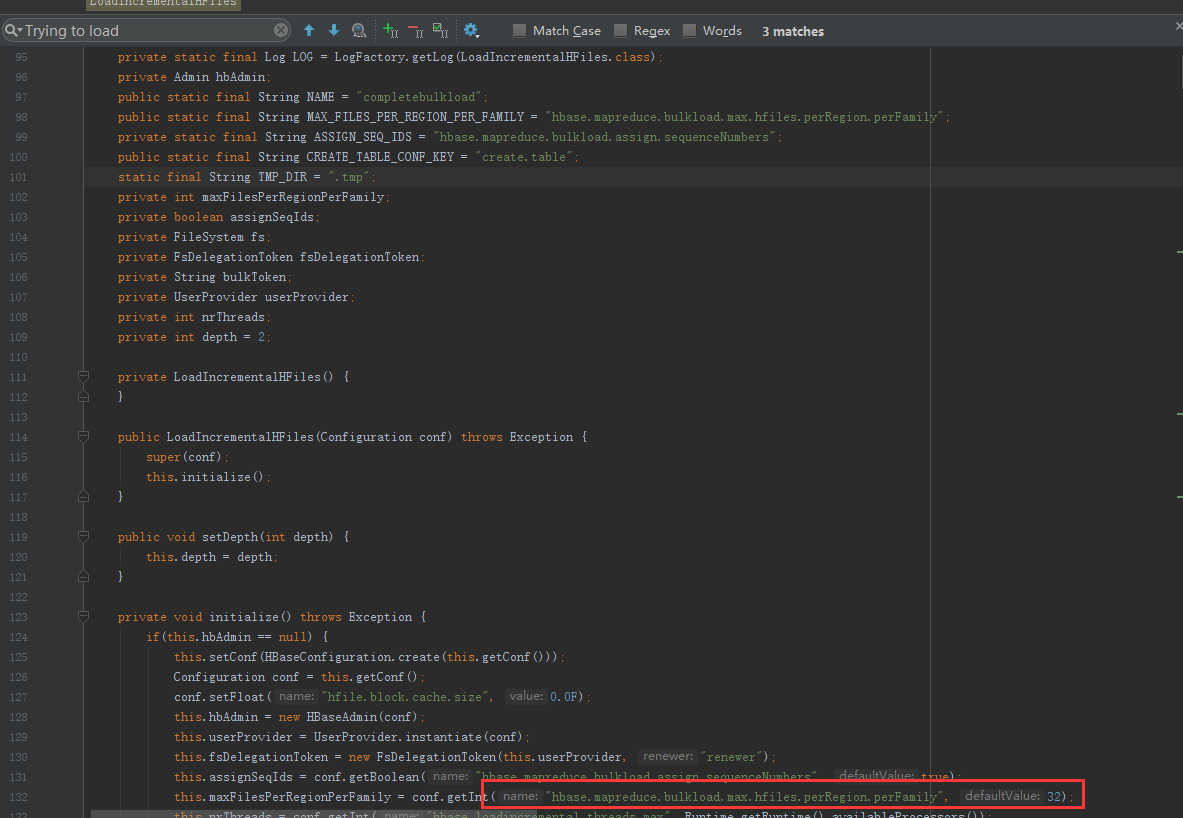

idea中ctrl+n输入LoadIncrementalHFiles,找到源码:

解决问题:

将参数调大

-Dhbase.mapreduce.bulkload.max.hfiles.perRegion.perFamily=2000

还有问题,发现文件处理到1500就完犊子了。

再解决:

控制文件个数,我的是spark:

rdd.coalesce(100).saveAsNewAPIHadoopFile(output, classOf[ImmutableBytesWritable],classOf[KeyValue],classOf[HFileOutputFormat2], hbaseConf)

完事~

还没有评论,来说两句吧...