K8s环境搭建

K8s环境搭建

实验环境:三台centos7.5

172.26.0.10 master172.26.0.12 node1172.26.0.14 node2

一、Kubernetes搭建

1.在各个节点上面安装k8s组件

配置master为etcd和master节点:

[root@master~]# yum install -y kubernetes etcd flannel ntp

[root@node1~]# yum install -y kubernetes flannel ntp

[root@node2~]# yum install -y kubernetes flannel ntp

关闭防火墙:

[root@master~]#systemctl stop firewalld && systemctl disable firewalld &&

systemctl status firewalld

[root@node1~]# systemctl stop firewalld && systemctl disable firewalld &&

systemctl status firewalld

[root@node2~]# systemctl stop firewalld && systemctl disable firewalld &&

systemctl status firewalld

配置每个节点的主机名和hosts文件

vi /etc/hosts

172.26.0.10 master

172.26.0.12 node1

172.26.0.14 node2

2.配置etcd

[root@master~]#vim /etc/etcd/etcd.conf #修改原文件,只需要以下红色标记的内容

ETCD_DATA_DIR=”/var/lib/etcd/default.etcd”

ETCD_LISTEN_CLIENT_URLS=”http://localhost:2379,http://172.26.0.10:2379“

ETCD_NAME=”etcd”

ETCD_ADVERTISE_CLIENT_URLS=”http://172.26.0.10:2379“

启动服务

[root@master~]#systemctl start etcd

[root@master~]#systemctl status etcd

[root@master~]#systemctl enable etcd

etcd 通讯使用 2379 端口

查看:

[root@master~]# netstat -antup | grep 2379

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 15901/etcd

tcp 0 0 172.26.0.10:2379 0.0.0.0:* LISTEN 15901/etcd

检查 etcd 集群成员列表,这里只有一台

[root@master~]# etcdctl member list

8e9e05c52164694d: name=etcd peerURLs=http://localhost:2380 clientURLs=http://172.26.0.10:2379 isLeader=true

- 配置master服务器

a. 配置kubemetes配置文件

[root@master~]# vim /etc/kubernetes/conf

KUBE_LOGTOSTDERR=”—logtostderr=true”

KUBE_LOG_LEVEL=”—v=0”

KUBE_ALLOW_PRIV=”—allow-privileged=false”

KUBE_MASTER=”—master=http://172.26.0.10:8080“

b. 配置apiserver配置文件

[root@master~]# vim /etc/kubernetes/apiserver

KUBE_API_ADDRESS=”—insecure-bind-address=0.0.0.0”

KUBE_ETCD_SERVERS=”—etcd-servers=http://172.26.0.10:2379“

KUBE_SERVICE_ADDRESSES=”—service-cluster-ip-range=10.254.0.0/16”

KUBE_ADMISSION_CONTROL=”—admission-control=AlwaysAdmit”

KUBE_API_ARGS=””

c. 配置 kube-scheduler 配置文件

[root@master~]# vim /etc/kubernetes//scheduler

KUBE_SCHEDULER_ARGS=”0.0.0.0”

配置 etcd,指定容器云中 docker 的 IP 网段

[root@master~]# etcdctl mkdir /k8s/network

[root@master~]# etcdctl get /k8s/network/config

[root@master~]# vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS=”http://172.26.0.10:2379“

FLANNEL_ETCD_PREFIX=”/k8s/network”

FLANNEL_OPTIONS=”—iface=ens192” #指定通信的物理网卡

[root@master~]# systemctl restart flanneld

[root@master~]# cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.255.0.0/16

FLANNEL_SUBNET=10.255.62.1/24

FLANNEL_MTU=1472

FLANNEL_IPMASQ=false

[root@master~]# cat /run/flannel/docker

DOCKER_OPT_BIP=”—bip=10.255.62.1/24”

DOCKER_OPT_IPMASQ=”—ip-masq=true”

DOCKER_OPT_MTU=”—mtu=1472”

DOCKER_NETWORK_OPTIONS=” —bip=10.255.62.1/24 —ip-masq=true —mtu=1472”

- 启动master上4个服务

[root@master ~]# systemctl restart kube-apiserver kube-controller-manager

kube-scheduler flanneld

[root@master ~]# systemctl status kube-apiserver kube-controller-manager

kube-scheduler flanneld

[root@master ~]# systemctl enable kube-apiserver kube-controller-manager

kube-scheduler flanneld

- 配置minion节点服务器

注:minion各节点配置相同,这边已node1为例

a. 配置flanneld服务

[root@node1~]# vim /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS=”http://172.26.0.10:2379“

FLANNEL_ETCD_PREFIX=”/k8s/network”

FLANNEL_OPTIONS=”—iface=ens192” #指定通信的物理网卡

[root@node1 ~]# vim /etc/kubernetes/config

KUBE_LOGTOSTDERR=”—logtostderr=true”

KUBE_LOG_LEVEL=”—v=0”

KUBE_ALLOW_PRIV=”—allow-privileged=false”

KUBE_MASTER=”—master=http://172.26.0.10:8080“

[root@node1 ~]# vim /etc/kubernetes/kubelet

KUBELET_ADDRESS=”—address=0.0.0.0”

KUBELET_HOSTNAME=”—hostname-override=node1”

KUBELET_API_SERVER=”—api-servers=http://172.26.0.10:8080“

KUBELET_POD_INFRA_CONTAINER=”—pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest”

KUBELET_ARGS=””

b. 启动node1服务

[root@node1 ~]# systemctl restart flanneld kube-proxy kubelet docker

[root@node1 ~]# systemctl enable flanneld kube-proxy kubelet docker

[root@node1 ~]# systemctl status flanneld kube-proxy kubelet dock

注:node2按上面步骤设置一下

查看服务是否安装成功

[root@master ~]# kubectl get nodes

NAME STATUS AGE

node1 Ready 2y

node2 Ready 2y

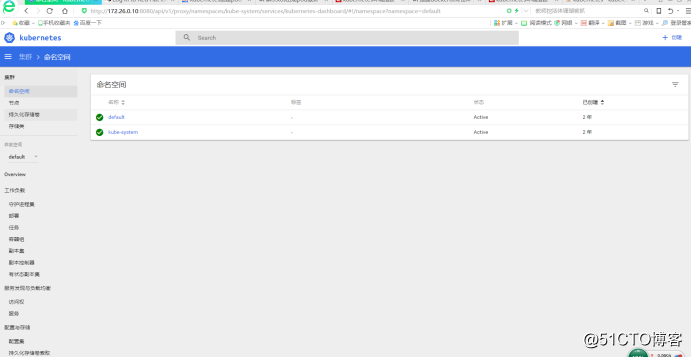

二、Kubernetes的web管理界面搭建

- 创建dashboard-deployment.yaml配置文件

[root@master~]# vim /etc/kubernetes/dashboard-deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

# Keep the name in sync with image version and

# gce/coreos/kube-manifests/addons/dashboard counterparts

name: kubernetes-dashboard-latest

namespace: kube-system

spec:

replicas: 1

template:

metadata:labels:k8s-app: kubernetes-dashboardversion: latestkubernetes.io/cluster-service: "true"spec:containers:- name: kubernetes-dashboardimage: docker.io/bestwu/kubernetes-dashboard-amd64:v1.6.3imagePullPolicy: IfNotPresentresources:\# keep request = limit to keep this container in guaranteed classlimits:cpu: 100mmemory: 50Mirequests:cpu: 100mmemory: 50Miports:- containerPort: 9090args:\- --apiserver-host=http://172.26.0.10:8080livenessProbe:httpGet:path: /port: 9090initialDelaySeconds: 30timeoutSeconds: 30

2.创建编辑 dashboard-service.yaml 文件:

[root@master ~]# vim /etc/kubernetes/dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboardkubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

- node1和node2需要提前拉取两个镜像

[root@node1 ~]#docker search kubernetes-dashboard-amd

[root@node1 ~]#docker pull docker.io/bestwu/kubernetes-dashboard-amd64

[root@node1 ~]#docker search pod-infrastructure

[root@node1 ~]#docker pull docker.io/xplenty/rhel7-pod-infrastructure

- 启劢 dashboard 的 deployment 和 service

[root@master ~]#kubectl create -f /etc/kubernetes/dashboard-deployment.yaml

[root@master ~]#kubectl create -f /etc/kubernetes/dashboard-service.yaml

[root@master ~]#kubectl get deployment —all-namespac

[root@master ~]# kubectl get svc —all-namespaces

注:安装过程中遇到的错误

kubelet does not have ClusterDNS IP configured and cannot create Pod using “ClusterFirst” policy. Falling back to DNSDefault policy.

以上软连接目标文件不存在

解决方式:

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv —to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

安装完成

转载于 //blog.51cto.com/5535123/2354451

//blog.51cto.com/5535123/2354451

还没有评论,来说两句吧...