搭建Ceph分布式存储

环境:

| 系统 | IP地址 | 主机名(登录用户) | 承载角色 |

| Centos 7.4 64Bit 1611 | 10.199.100.170 | dlp(yzyu) ceph-client(root) | admin-node ceph-client |

| Centos 7.4 64Bit 1611 | 10.199.100.171 | node1(yzyu) 添加一块硬盘 | mon-node osd0-node mds-node |

| Centos 7.4 64Bit 1611 | 10.199.100.172 | node2(yzyu) 添加一块硬盘 | mon-node osd1-node |

配置基础环境

[root@dlp ~]# useradd yzyu

[root@dlp ~]# echo “dhhy” |passwd —stdin dhhy

[root@dlp ~]# cat <>/etc/hosts

10.199.100.170 dlp

10.199.100.171 node1

10.199.100.172 node2

END

[root@dlp ~]# echo “yzyu ALL = (root) NOPASSWD:ALL” >> /etc/sudoers.d/yzyu

[root@dlp ~]# chmod 0440 /etc/sudoers.d/yzyu[root@node1~]# useradd yzyu

[root@node1 ~]# echo “yzyu” |passwd —stdin yzyu

[root@node1 ~]# cat <>/etc/hosts

10.199.100.170 dlp

10.199.100.171 node1

10.199.100.172 node2

END

[root@node1 ~]# echo “yzyu ALL = (root) NOPASSWD:ALL” >> /etc/sudoers.d/yzyu

[root@node1 ~]# chmod 0440 /etc/sudoers.d/yzyu

[root@node2 ~]# useradd yzyu[root@node2 ~]# echo "yzyu" |passwd --stdin yzyu[root@node2 ~]# cat <<END >>/etc/hosts10.199.100.170 dlp10.199.100.171 node110.199.100.172 node2END[root@node2 ~]# echo "yzyu ALL = (root) NOPASSWD:ALL" >> /etc/sudoers.d/yzyu[root@node2 ~]# chmod 0440 /etc/sudoers.d/yzyu

配置ntp时间服务

[root@dlp ~]# yum -y install ntp ntpdate

[root@dlp ~]# sed -i ‘/^server/s/^/#/g’ /etc/ntp.conf

[root@dlp ~]# sed -i ‘25aserver 127.127.1.0\nfudge 127.127.1.0 stratum 8’ /etc/ntp.conf

[root@dlp ~]# systemctl start ntpd

[root@dlp ~]# systemctl enable ntpd

[root@dlp ~]# netstat -lntup[root@node1 ~]# yum -y install ntpdate

[root@node1 ~]# /usr/sbin/ntpdate 10.199.100.170

[root@node1 ~]# echo “/usr/sbin/ntpdate 10.199.100.170” >>/etc/rc.local

[root@node1 ~]# chmod +x /etc/rc.local

[root@node2 ~]# yum -y install ntpdate[root@node2 ~]# /usr/sbin/ntpdate 10.199.100.170[root@node2 ~]# echo "/usr/sbin/ntpdate 10.199.100.170" >>/etc/rc.local[root@node2 ~]# chmod +x /etc/rc.local

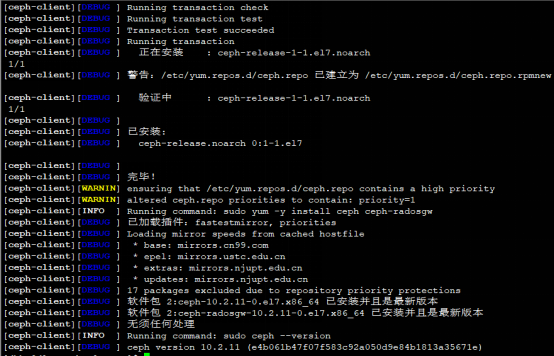

分别在dlp节点、node1、node2节点上安装Ceph

[root@dlp ~]# yum -y install yum-utils

[root@dlp ~]# yum-config-manager —add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/

[root@dlp ~]# yum -y install epel-release —nogpgcheck

[root@dlp ~]# cat <>/etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for \$basearch

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.163.com/ceph/keys/release.asc

priority=1

END

[root@dlp ~]# ls /etc/yum.repos.d/ ##必须保证有默认的官网源,结合epel源和网易的ceph源,才可以进行安装;

bak CentOS-fasttrack.repo ceph.repo

CentOS-Base.repo CentOS-Media.repo dl.fedoraproject.org_pub_epel_7_x86_64_.repo

CentOS-CR.repo CentOS-Sources.repo epel.repo

CentOS-Debuginfo.repo CentOS-Vault.repo epel-testing.repo

[root@dlp ~]# su - yzyu[dhhy@dlp ~]$ mkdir ceph-cluster ##创建ceph主目录[dhhy@dlp ~]$ cd ceph-cluster[dhhy@dlp ceph-cluster]$ sudo yum -y install ceph-deploy ##安装ceph管理工具[dhhy@dlp ceph-cluster]$ sudo yum -y install ceph --nogpgcheck ##安装ceph主程序[root@node1 ~]# yum -y install yum-utils[root@ node1 ~]# yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/[root@node1 ~]# yum -y install epel-release --nogpgcheck[root@node1 ~]# cat <<END >>/etc/yum.repos.d/ceph.repo[Ceph]name=Ceph packages for \$basearchbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearchenabled=1gpgcheck=0type=rpm-mdgpgkey=https://mirrors.163.com/ceph/keys/release.ascpriority=1[Ceph-noarch]name=Ceph noarch packagesbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarchenabled=1gpgcheck=0type=rpm-mdgpgkey=https://mirrors.163.com/ceph/keys/release.ascpriority=1[ceph-source]name=Ceph source packagesbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMSenabled=1gpgcheck=0type=rpm-mdgpgkey=https://mirrors.163.com/ceph/keys/release.ascpriority=1END[root@node1 ~]# su - yzyu[dhhy@node1 ~]$ mkdir ceph-cluster[dhhy@node1~]$ cd ceph-cluster[dhhy@node1 ceph-cluster]$ sudo yum -y install ceph-deploy[dhhy@node1 ceph-cluster]$ sudo yum -y install ceph --nogpgcheck[dhhy@node1 ceph-cluster]$ sudo yum -y install deltarpm[root@node2 ~]# yum -y install yum-utils[root@ node1 ~]# yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/[root@node2 ~]# yum -y install epel-release --nogpgcheck[root@node2 ~]# cat <<END >>/etc/yum.repos.d/ceph.repo[Ceph]name=Ceph packages for \$basearchbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/\$basearchenabled=1gpgcheck=0type=rpm-mdgpgkey=https://mirrors.163.com/ceph/keys/release.ascpriority=1[Ceph-noarch]name=Ceph noarch packagesbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/noarchenabled=1gpgcheck=0type=rpm-mdgpgkey=https://mirrors.163.com/ceph/keys/release.ascpriority=1[ceph-source]name=Ceph source packagesbaseurl=http://mirrors.163.com/ceph/rpm-jewel/el7/SRPMSenabled=1gpgcheck=0type=rpm-mdgpgkey=https://mirrors.163.com/ceph/keys/release.ascpriority=1END[root@node2 ~]# su - yzyu[dhhy@node2 ~]$ mkdir ceph-cluster[dhhy@node2 ~]$ cd ceph-cluster[dhhy@node2 ceph-cluster]$ sudo yum -y install ceph-deploy[dhhy@node2 ceph-cluster]$ sudo yum -y install ceph --nogpgcheck[dhhy@node2 ceph-cluster]$ sudo yum -y install deltarpm

在dlp节点管理node存储节点,安装注册服务,节点信息

[yzyu@dlp ceph-cluster]$ pwd ##当前目录必须为ceph的安装目录位置

/home/yzyu/ceph-cluster

[yzyu@dlp ceph-cluster]$ ssh-keygen -t rsa ##主节点需要远程管理mon节点,需要创建密钥对,并且将公钥复制到mon节点

[yzyu@dlp ceph-cluster]$ ssh-copy-id dhhy@dlp

[yzyu@dlp ceph-cluster]$ ssh-copy-id dhhy@node1

[yzyu@dlp ceph-cluster]$ ssh-copy-id dhhy@node2

[yzyu@dlp ceph-cluster]$ ssh-copy-id root@ceph-client

[yzyu@dlp ceph-cluster]$ cat <>/home/dhhy/.ssh/config

Host dlp

Hostname dlp

User yzyu

Host node1

Hostname node1

User yzyu

Host node2

Hostname node2

User yzyu

END

[yzyu@dlp ceph-cluster]$ chmod 644 /home/yzyu/.ssh/config

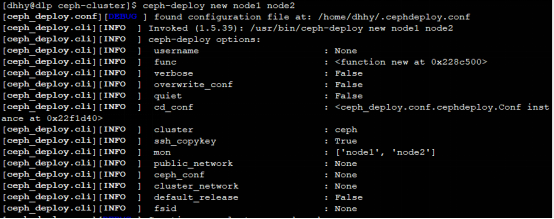

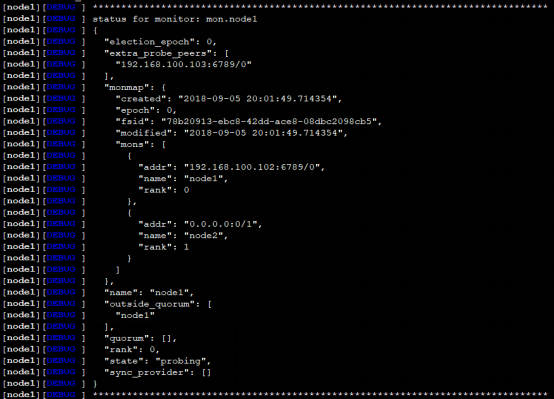

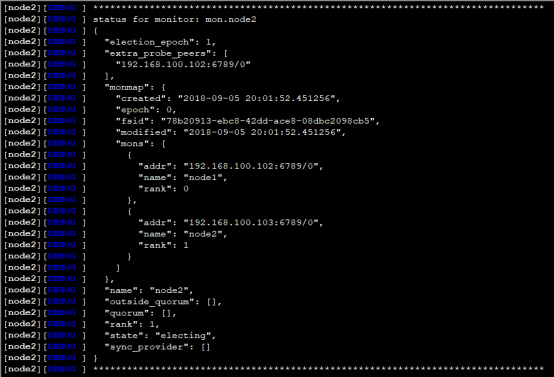

[yzyu@dlp ceph-cluster]$ ceph-deploy new node1 node2 ##初始化节点

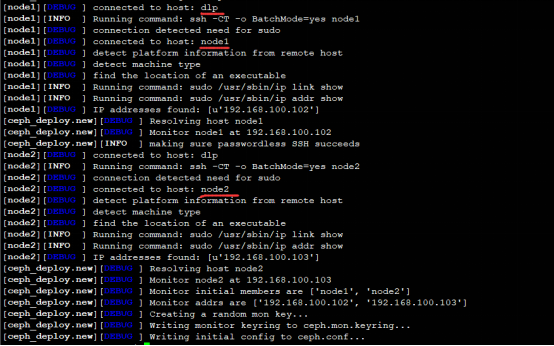

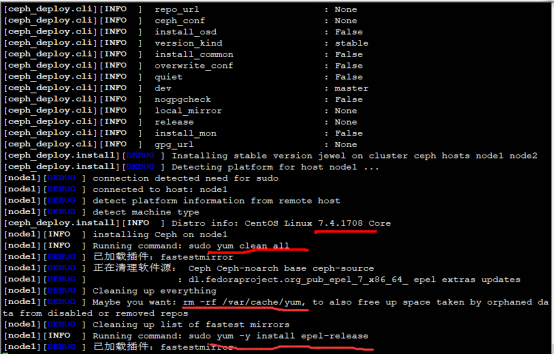

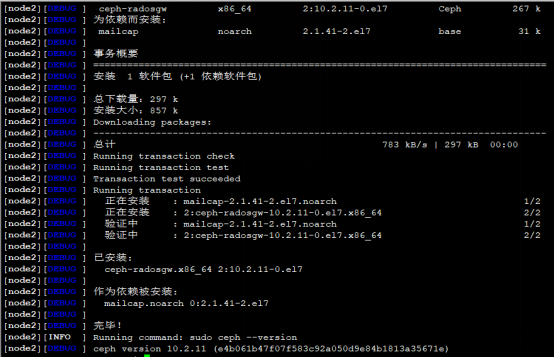

[yzyu@dlp ceph-cluster]$ cat <<END >>/home/yzyu/ceph-cluster/ceph.confosd pool default size = 2END[yzyu@dlp ceph-cluster]$ ceph-deploy install node1 node2 ##安装ceph

注解:node节点的配置文件在/etc/ceph/目录下,会自动同步dlp管理节点的配置文件;

配置node1节点的osd1存储设备:

[yzyu@node1 ~]$ sudo fdisk /dev/sdc...sdc ##格式化硬盘,转换为GPT分区[yzyu@node1 ~]$ pvcreate /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1 ##创建pv[yzyu@node1 ~]$ vgcreate vg1 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1 ##创建vg[yzyu@node1 ~]$ lvcreate -L 130T -n lv1 vg1 ##划分空间[yzyu@node1 ~]$ mkfs.xfs /dev/vg1/lv1 ##格式化[yzyu@node1 ~]$ sudo partx -a /dev/vg1/lv1[yzyu@node1 ~]$ sudo mkfs -t xfs /dev/vg1/lv1[yzyu@node1 ~]$ sudo mkdir /var/local/osd1[yzyu@node1 ~]$ sudo vi /etc/fstab/dev/vg1/lv1 /var/local/osd1 xfs defaults 0 0:wq[yzyu@node1 ~]$ sudo mount -a[yzyu@node1 ~]$ sudo chmod 777 /var/local/osd1[yzyu@node1 ~]$ sudo chown ceph:ceph /var/local/osd1/[yzyu@node1 ~]$ ls -ld /var/local/osd1/[yzyu@node1 ~]$ df -hT[yzyu@node1 ~]$ exit

配置node2节点的osd1存储设备:

[yzyu@node2 ~]$ sudo fdisk /dev/sdc...sdc[yzyu@node2 ~]$ pvcreate /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1[yzyu@node2 ~]$ vgcreate vg2 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1[yzyu@node2 ~]$ lvcreate -L 130T -n lv2 vg2[yzyu@node2 ~]$ mkfs.xfs /dev/vg2/lv2[yzyu@node2 ~]$ sudo partx -a /dev/vg2/lv2[yzyu@node2 ~]$ sudo mkfs -t xfs /dev/vg2/lv2[yzyu@node2 ~]$ sudo mkdir /var/local/osd2[yzyu@node2 ~]$ sudo vi /etc/fstab/dev/vg2/lv2 /var/local/osd2 xfs defaults 0 0:wq[yzyu@node2 ~]$ sudo mount -a[yzyu@node2 ~]$ sudo chmod 777 /var/local/osd2[yzyu@node2 ~]$ sudo chown ceph:ceph /var/local/osd2/[yzyu@node2 ~]$ ls -ld /var/local/osd2/[yzyu@node2 ~]$ df -hT[yzyu@node2 ~]$ exit

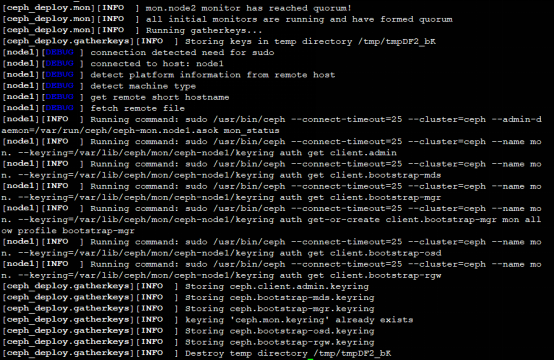

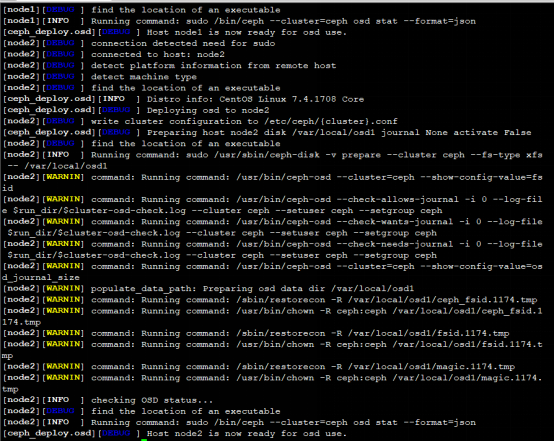

dlp管理节点注册node节点:

[yzyu@dlp ceph-cluster]$ ceph-deploy osd prepare node1:/var/local/osd1 node2:/var/local/osd2 ##初始创建osd节点并指定节点存储文件位置

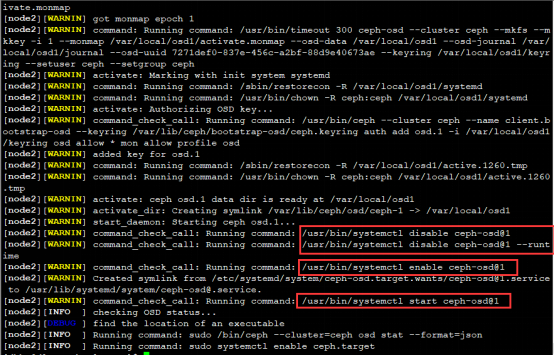

[yzyu@dlp ceph-cluster]$ chmod +r /home/yzyu/ceph-cluster/ceph.client.admin.keyring[yzyu@dlp ceph-cluster]$ ceph-deploy osd activate node1:/var/local/osd1 node2:/var/local/osd2 ##激活ods节点

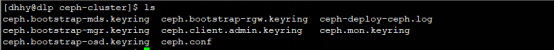

[yzyu@dlp ceph-cluster]$ ceph-deploy admin node1 node2 ##复制key管理密钥文件到node节点中

[yzyu@dlp ceph-cluster]$ sudo cp /home/dhhy/ceph-cluster/ceph.client.admin.keyring /etc/ceph/[yzyu@dlp ceph-cluster]$ sudo cp /home/dhhy/ceph-cluster/ceph.conf /etc/ceph/[yzyu@dlp ceph-cluster]$ ls /etc/ceph/ceph.client.admin.keyring ceph.conf rbdmap[yzyu@dlp ceph-cluster]$ ceph quorum_status --format json-pretty ##查看Ceph群集详细信息

[yzyu@dlp ceph-cluster]$ ceph health

HEALTH_OK

[yzyu@dlp ceph-cluster]$ ceph -s ##查看Ceph群集状态

cluster 24fb6518-8539-4058-9c8e-d64e43b8f2e2

health HEALTH_OK

monmap e1: 2 mons at {node1=10.199.100.171:6789/0,node2=10.199.100.172:6789/0}

election epoch 6, quorum 0,1 node1,node2

osdmap e10: 2 osds: 2 up, 2 in

flags sortbitwise,require_jewel_osds

pgmap v20: 64 pgs, 1 pools, 0 bytes data, 0 objects

10305 MB used, 30632 MB / 40938 MB avail ##已使用、剩余、总容量

64 active+clean

[dhhy@dlp ceph-cluster]$ ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 0.03897 root default

-2 0.01949 host node1

0 0.01949 osd.0 up 1.00000 1.00000

-3 0.01949 host node2

1 0.01949 osd.1 up 1.00000 1.00000

[yzyu@dlp ceph-cluster]$ ssh yzyu@node1 ##验证node1节点的端口监听状态以及其配置文件以及磁盘使用情况

[yzyu@node1 ~]$ df -hT |grep lv1

/dev/vg1/lv1 xfs 20G 5.1G 15G 26% /var/local/osd1

[yzyu@node1 ~]$ du -sh /var/local/osd1/

5.1G /var/local/osd1/

[yzyu@node1 ~]$ ls /var/local/osd1/

activate.monmap active ceph_fsid current fsid journal keyring magic ready store_version superblock systemd type whoami

[yzyu@node1 ~]$ ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap tmppVBe_2

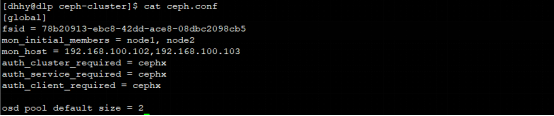

[yzyu@node1 ~]$ cat /etc/ceph/ceph.conf

[global]

fsid = 0fcdfa46-c8b7-43fc-8105-1733bce3bfeb

mon_initial_members = node1, node2

mon_host = 10.199.100.171,10.199.100.172

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd pool default size = 2

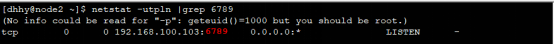

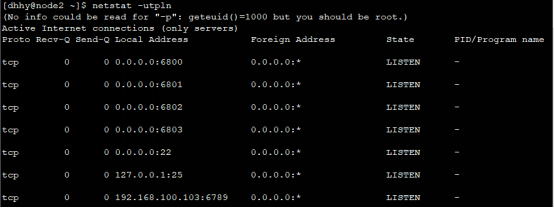

[yzyu@dlp ceph-cluster]$ ssh yzyu@node2 ##验证node2节点的端口监听状态以及其配置文件及其磁盘使用情况

[yzyu@node2 ~]$ df -hT |grep lv2

/dev/vg2/lv2 xfs 20G 5.1G 15G 26% /var/local/osd2

[yzyu@node2 ~]$ du -sh /var/local/osd2/

5.1G /var/local/osd2/

[yzyu@node2 ~]$ ls /var/local/osd2/

activate.monmap active ceph_fsid current fsid journal keyring magic ready store_version superblock systemd type whoami

[yzyu@node2 ~]$ ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap tmpmB_BTa

[yzyu@node2 ~]$ cat /etc/ceph/ceph.conf

[global]

fsid = 0fcdfa46-c8b7-43fc-8105-1733bce3bfeb

mon_initial_members = node1, node2

mon_host = 10.199.100.171,10.199.100.172

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

osd pool default size = 2

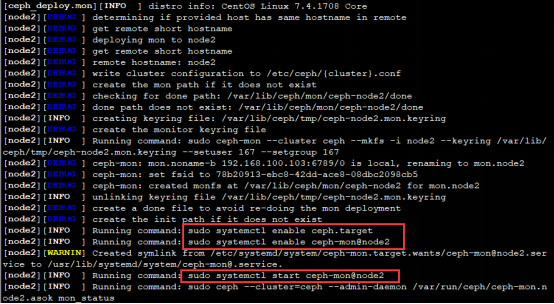

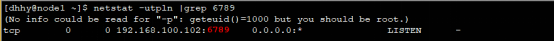

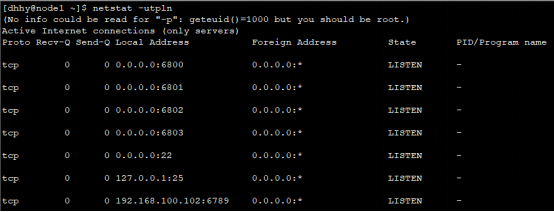

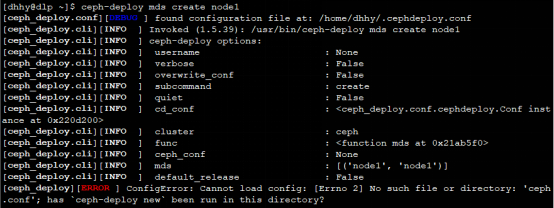

配置Ceph的mds元数据进程

[yzyu@dlp ceph-cluster]$ ceph-deploy mds create node1

[yzyu@dlp ceph-cluster]$ ssh dhhy@node1

[yzyu@node1 ~]$ netstat -utpln |grep 68

(No info could be read for “-p”: geteuid()=1000 but you should be root.)

tcp 0 0 0.0.0.0:6800 0.0.0.0: LISTEN -

tcp 0 0 0.0.0.0:6801 0.0.0.0: LISTEN -

tcp 0 0 0.0.0.0:6802 0.0.0.0: LISTEN -

tcp 0 0 0.0.0.0:6803 0.0.0.0: LISTEN -

tcp 0 0 0.0.0.0:6804 0.0.0.0: LISTEN -

tcp 0 0 192.168.100.102:6789 0.0.0.0: LISTEN -

[yzyu@node1 ~]$ exit配置Ceph的client客户端

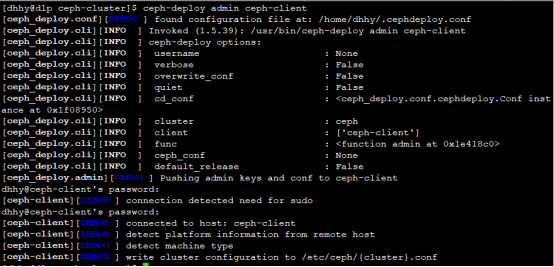

[yzyu@dlp ceph-cluster]$ ceph-deploy install ceph-client ##提示输入密码

[dhhy@dlp ceph-cluster]$ ceph-deploy admin ceph-client

[yzyu@dlp ceph-cluster]$ su -[root@dlp ~]# chmod +r /etc/ceph/ceph.client.admin.keyring[yzyu@dlp ceph-cluster]$ ceph osd pool create cephfs_data 128 ##数据存储池pool 'cephfs_data' created[yzyu@dlp ceph-cluster]$ ceph osd pool create cephfs_metadata 128 ##元数据存储池pool 'cephfs_metadata' created[yzyu@dlp ceph-cluster]$ ceph fs new cephfs cephfs_data cephfs_metadata ##创建文件系统new fs with metadata pool 1 and data pool 2[yzyu@dlp ceph-cluster]$ ceph fs ls ##查看文件系统name: cephfs, metadata pool: cephfs_data, data pools: [cephfs_metadata ][yzyu@dlp ceph-cluster]$ ceph -scluster 24fb6518-8539-4058-9c8e-d64e43b8f2e2health HEALTH_WARNclock skew detected on mon.node2too many PGs per OSD (320 > max 300)Monitor clock skew detectedmonmap e1: 2 mons at {node1=10.199.100.171:6789/0,node2=10.199.100.172:6789/0}election epoch 6, quorum 0,1 node1,node2fsmap e5: 1/1/1 up {0=node1=up:active}osdmap e17: 2 osds: 2 up, 2 inflags sortbitwise,require_jewel_osdspgmap v54: 320 pgs, 3 pools, 4678 bytes data, 24 objects10309 MB used, 30628 MB / 40938 MB avail320 active+clean

[root@ceph-client ~]# mkdir /mnt/ceph

[root@ceph-client ~]# grep key /etc/ceph/ceph.client.admin.keyring |awk ‘{print $3}‘ >>/etc/ceph/admin.secret

[root@ceph-client ~]# cat /etc/ceph/admin.secret

AQCd/x9bsMqKFBAAZRNXpU5QstsPlfe1/FvPtQ==

[root@ceph-client ~]# mount -t ceph 10.199.100.171 / /mnt/ceph/ -o name=admin,secretfile=/etc/ceph/admin.secret

/ /mnt/ceph/ -o name=admin,secretfile=/etc/ceph/admin.secret

[root@ceph-client ~]# df -hT |grep ceph

10.199.100.171 / ceph 40G 11G 30G 26% /mnt/ceph

/ ceph 40G 11G 30G 26% /mnt/ceph

[root@ceph-client ~]# dd if=/dev/zero of=/mnt/ceph/1.file bs=1G count=1

记录了1+0 的读入

记录了1+0 的写出

1073741824字节(1.1 GB)已复制,14.2938 秒,75.1 MB/秒

[root@ceph-client ~]# ls /mnt/ceph/

1.file

[root@ceph-client ~]# df -hT |grep ceph

10.199.100.171 / ceph 40G 13G 28G 33% /mnt/ceph

/ ceph 40G 13G 28G 33% /mnt/ceph

- 如若在配置过程中出现问题,重新创建集群或重新安装ceph,那么需要将ceph集群中的数据都清除掉,命令如下;

[dhhy@dlp ceph-cluster]$ ceph-deploy purge node1 node2

[dhhy@dlp ceph-cluster]$ ceph-deploy purgedata node1 node2

[dhhy@dlp ceph-cluster]$ ceph-deploy forgetkeys && rm ceph.*

2.dlp节点为node节点和客户端安装ceph时,会出现yum安装超时,大多由于网络问题导致,可以多执行几次安装命令;

3.dlp节点指定ceph-deploy命令管理node节点配置时,当前所在目录一定是/home/dhhy/ceph-cluster/,不然会提示找不到ceph.conf的配置文件;

4.osd节点的/var/local/osd*/存储数据实体的目录权限必须为777,并且属主和属组必须为ceph;

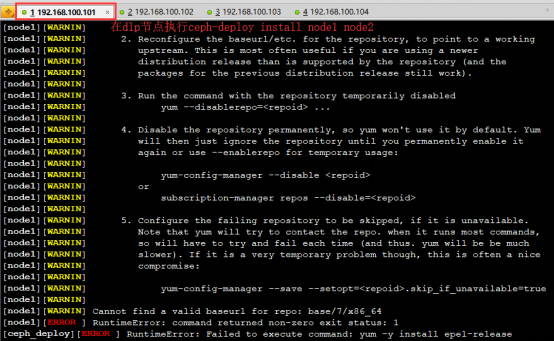

- 在dlp管理节点安装ceph时出现以下问题

解决方法:

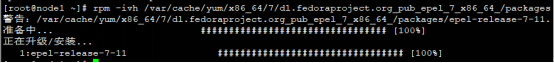

1.重新yum安装node1或者node2的epel-release软件包;

2.如若还无法解决,将软件包下载,使用以下命令进行本地安装;

6.如若在dlp管理节点中对/home/yzyu/ceph-cluster/ceph.conf主配置文件发生变化,那么需要将其主配置文件同步给node节点,命令如下:

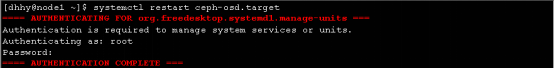

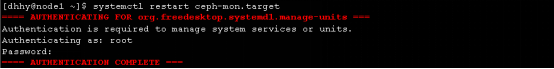

node节点收到配置文件后,需要重新启动进程:

7.在dlp管理节点查看ceph集群状态时,出现如下,原因是因为时间不一致所导致;

将dlp节点的ntpd时间服务重新启动,node节点再次同步时间即可,如下所示:

8.在dlp管理节点进行管理node节点时,所处的位置一定是/home/yzyu/ceph-cluster/,不然会提示找不到ceph.conf主配置文件;

转载于 //www.cnblogs.com/omgasw/p/11255224.html

//www.cnblogs.com/omgasw/p/11255224.html

还没有评论,来说两句吧...