python爬虫爬取百度文档

使用python爬虫爬取百度文档文字

话不多说,直接上代码!

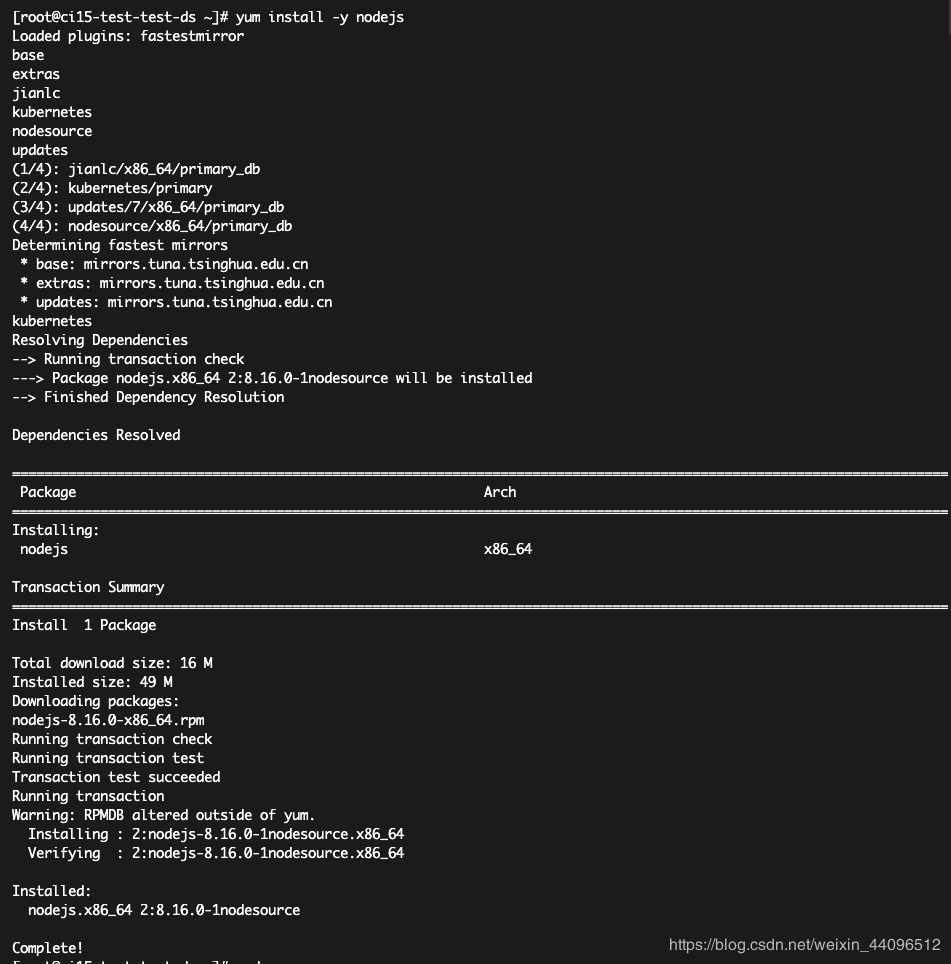

import requestsimport reheaders = {"User-Agent": "Mozilla/5.0 (Linux; Android 5.0; SM-G900P Build/LRX21T) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Mobile Safari/537.36"} # 模拟手机def get_num(url):response = requests.get(url, headers=headers).textresult = re.search(r'&md5sum=(.*)&sign=(.*)&rtcs_flag=(.*)&rtcs_ver=(.*?)".*rsign":"(.*?)",', response, re.M | re.I) # 寻找参数reader = {"md5sum": result.group(1),"sign": result.group(2),"rtcs_flag": result.group(3),"rtcs_ver": result.group(4),"width": 176,"type": "org","rsign": result.group(5)}result_page = re.findall(r'merge":"(.*?)".*?"page":(.*?)}', response) # 获取每页的标签doc_url = "https://wkretype.bdimg.com/retype/merge/" + url[29:-5] # 网页的前缀n = 0for i in range(len(result_page)): # 最大同时一次爬取10页if i % 10 is 0:doc_range = '_'.join([k for k, v in result_page[n:i]])reader['pn'] = n + 1reader['rn'] = 10reader['callback'] = 'sf_edu_wenku_retype_doc_jsonp_%s_10' % (reader.get('pn'))reader['range'] = doc_rangen = iget_page(doc_url, reader)else: # 剩余不足10页的doc_range = '_'.join([k for k, v in result_page[n:i + 1]])reader['pn'] = n + 1reader['rn'] = i - n + 1reader['callback'] = 'sf_edu_wenku_retype_doc_jsonp_%s_%s' % (reader.get('pn'), reader.get('rn'))reader['range'] = doc_rangeget_page(doc_url, reader)def get_page(url, data):response = requests.get(url, headers=headers, params=data).textresponse = response.encode('utf-8').decode('unicode_escape') # unciode转为utf-8 然后转为中文response = re.sub(r',"no_blank":true', '', response) # 清洗数据result = re.findall(r'c":"(.*?)"}', response) # 寻找文本匹配result = '\n'.join(result)with open("C:/Users/86135/Desktop/百度文库.txt",'wt') as f:f.write(result)if __name__ == '__main__':url = input("请输入百度文库的地址:")get_num(url)

爬取结果如下:

还没有评论,来说两句吧...